Android音频学习(十六)——CreateTrack

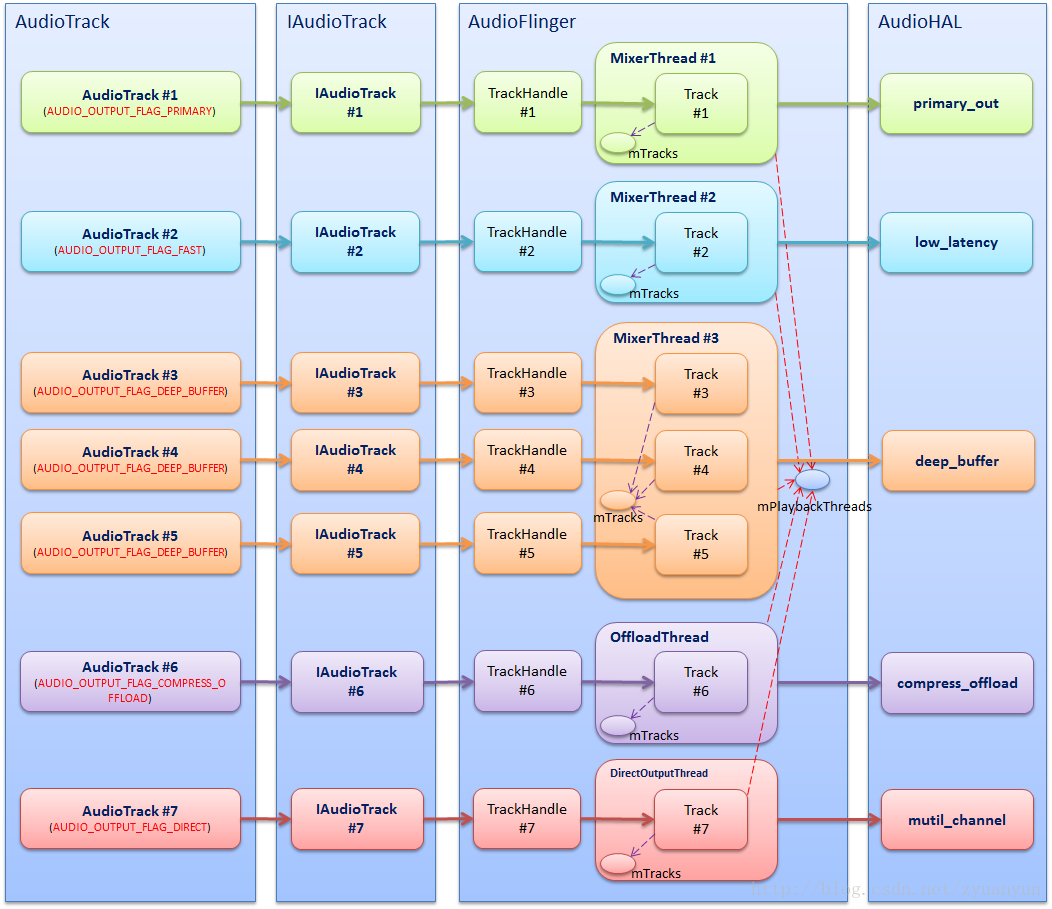

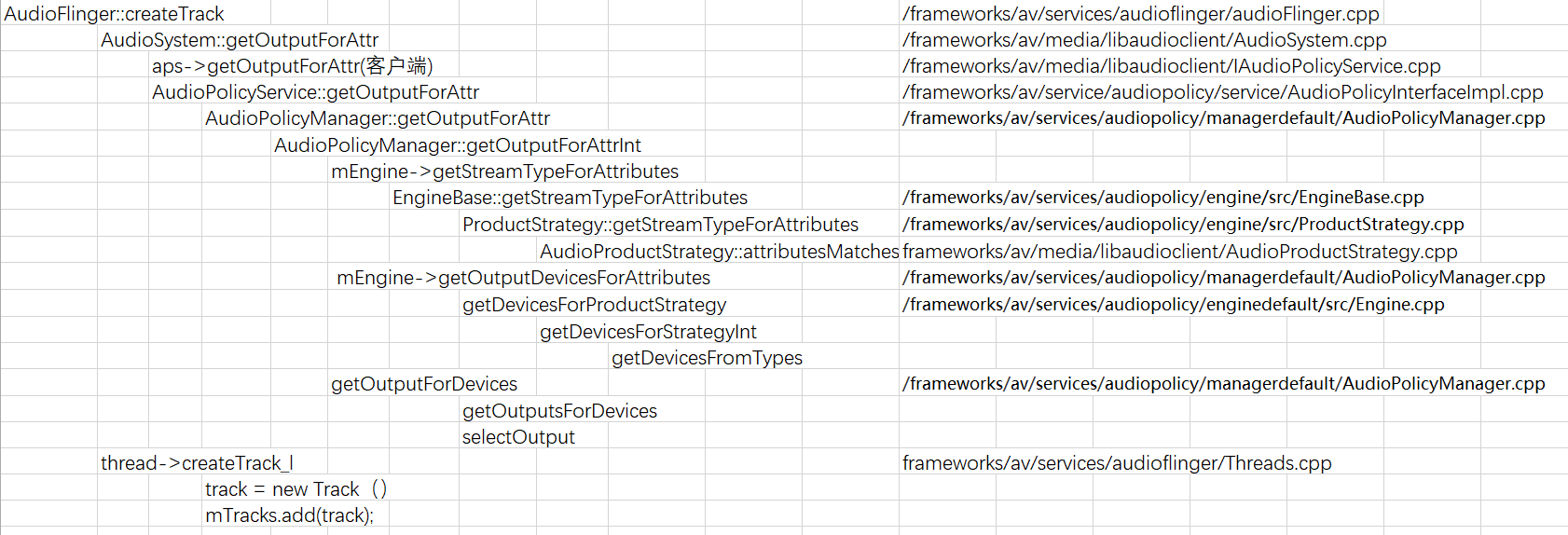

播放时会创建AudioTrack对象,调用流程在第五节分析过,Android音频学习(五)——AudioTrack调用方法-CSDN博客,创建AudioTrack对象时最终会调用到AudioFlinger的createTrack方法,调用流程如下:

createTrack方法首先会通过getOutputForAttr根据下发的Attribute找到对应的output(传入参数&output.outputId, 对应audio_io_handle_t *output, 个人理解最终获取output的id),会调用到AudioPolicyManager的getOutputForAttr。

audio_io_handle_t *output是 AudioTrack/AudioRecord/AudioSystem、AudioFlinger、AudioPolicyManager 之间一个重要的连接点,回顾下当打开输出流设备及创建 PlaybackThread 时,系统会分配一个全局唯一的值作为 audio_io_handle_t,并把 audio_io_handle_t 和 PlaybackThread 添加到键值对向量 mPlaybackThreads 中,由于 audio_io_handle_t 和 PlaybackThread 是一一对应的关系,因此拿到一个 audio_io_handle_t,就能遍历键值对向量 mPlaybackThreads 找到它对应的 PlaybackThread,可以简单理解 audio_io_handle_t 为 PlaybackThread 的索引号或线程 id。对应AudioFlinger::openOutput_l():

if (status == NO_ERROR) {if (flags & AUDIO_OUTPUT_FLAG_MMAP_NOIRQ) {sp<MmapPlaybackThread> thread =new MmapPlaybackThread(this, *output, outHwDev, outputStream, mSystemReady);mMmapThreads.add(*output, thread);ALOGV("openOutput_l() created mmap playback thread: ID %d thread %p",*output, thread.get());return thread;} else {sp<PlaybackThread> thread;if (flags & AUDIO_OUTPUT_FLAG_COMPRESS_OFFLOAD) {thread = new OffloadThread(this, outputStream, *output, mSystemReady);ALOGV("openOutput_l() created offload output: ID %d thread %p",*output, thread.get());} else if ((flags & AUDIO_OUTPUT_FLAG_DIRECT)|| !isValidPcmSinkFormat(config->format)|| !isValidPcmSinkChannelMask(config->channel_mask)) {thread = new DirectOutputThread(this, outputStream, *output, mSystemReady);ALOGV("openOutput_l() created direct output: ID %d thread %p",*output, thread.get());} else {thread = new MixerThread(this, outputStream, *output, mSystemReady);ALOGV("openOutput_l() created mixer output: ID %d thread %p",*output, thread.get());}mPlaybackThreads.add(*output, thread);mPatchPanel.notifyStreamOpened(outHwDev, *output);return thread;}在获取output时,有三个关键的步骤,首先是根据Attributes找到stream,对应函数getStreamTypeForAttributes, Attributes即应用下发的属性参数包括usage,contentType等,然后将输入参数attr的与ProductStrategy的attr对比,依次对比contentType、usage等(前面章节有提到,如果配置文件中没有则在EngineConfig中有初始化),相等就选择该ProductStrategy的配置streamType,最终是调用了attributesMatches函数进行匹配,代码如下:

audio_stream_type_t ProductStrategy::getStreamTypeForAttributes(const audio_attributes_t &attr) const

{const auto &iter = std::find_if(begin(mAttributesVector), end(mAttributesVector),[&attr](const auto &supportedAttr) {return AudioProductStrategy::attributesMatches(supportedAttr.mAttributes, attr); });if (iter == end(mAttributesVector)) {return AUDIO_STREAM_DEFAULT;}audio_stream_type_t streamType = iter->mStream;ALOGW_IF(streamType == AUDIO_STREAM_DEFAULT,"%s: Strategy %s supporting attributes %s has not stream type associated""fallback on MUSIC. Do not use stream volume API", __func__, mName.c_str(),toString(attr).c_str());return streamType != AUDIO_STREAM_DEFAULT ? streamType : AUDIO_STREAM_MUSIC;

}// Keep in sync with android/media/audiopolicy/AudioProductStrategy#attributeMatches

bool AudioProductStrategy::attributesMatches(const audio_attributes_t refAttributes,const audio_attributes_t clientAttritubes)

{if (refAttributes == AUDIO_ATTRIBUTES_INITIALIZER) {// The default product strategy is the strategy that holds default attributes by convention.// All attributes that fail to match will follow the default strategy for routing.// Choosing the default must be done as a fallback, the attributes match shall not// select the default.return false;}return ((refAttributes.usage == AUDIO_USAGE_UNKNOWN) ||(clientAttritubes.usage == refAttributes.usage)) &&((refAttributes.content_type == AUDIO_CONTENT_TYPE_UNKNOWN) ||(clientAttritubes.content_type == refAttributes.content_type)) &&((refAttributes.flags == AUDIO_FLAG_NONE) ||(clientAttritubes.flags != AUDIO_FLAG_NONE &&(clientAttritubes.flags & refAttributes.flags) == refAttributes.flags)) &&((strlen(refAttributes.tags) == 0) ||(std::strcmp(clientAttritubes.tags, refAttributes.tags) == 0));

}

其次是根据attributes查找对应的strategy,对应函数getOutputDevicesForAttributes,根据输入attr(usage/content-type/flag)参数去Engine模块确定devices设备。

DeviceVector Engine::getOutputDevicesForAttributes(const audio_attributes_t &attributes,const sp<DeviceDescriptor> &preferredDevice,bool fromCache) const

{// First check for explict routing deviceif (preferredDevice != nullptr) {ALOGV("%s explicit Routing on device %s", __func__, preferredDevice->toString().c_str());return DeviceVector(preferredDevice);}product_strategy_t strategy = getProductStrategyForAttributes(attributes);const DeviceVector availableOutputDevices = getApmObserver()->getAvailableOutputDevices();const SwAudioOutputCollection &outputs = getApmObserver()->getOutputs();//// @TODO: what is the priority of explicit routing? Shall it be considered first as it used to// be by APM?//// Honor explicit routing requests only if all active clients have a preferred route in which// case the last active client route is usedsp<DeviceDescriptor> device = findPreferredDevice(outputs, strategy, availableOutputDevices);if (device != nullptr) {return DeviceVector(device);}return fromCache? mDevicesForStrategies.at(strategy) : getDevicesForProductStrategy(strategy);

}匹配规则根据策略不同而定,一般是以下这个规则:蓝牙设备->有线连接设备(如耳机)->usb连接设备->自带的音频设备,最后就找到了输出设备DeviceVector,对应着配置文件audio_policy_configuration.xml中devicePort的标签, 例如STRATEGY_MEDIA对应的策略制定规则如下:

case STRATEGY_MEDIA: {DeviceVector devices2;if (strategy != STRATEGY_SONIFICATION) {// no sonification on remote submix (e.g. WFD)sp<DeviceDescriptor> remoteSubmix;if ((remoteSubmix = availableOutputDevices.getDevice(AUDIO_DEVICE_OUT_REMOTE_SUBMIX, String8("0"),AUDIO_FORMAT_DEFAULT)) != nullptr) {devices2.add(remoteSubmix);}}if (isInCall() && (strategy == STRATEGY_MEDIA)) {devices = getDevicesForStrategyInt(STRATEGY_PHONE, availableOutputDevices, availableInputDevices, outputs);break;}// FIXME: Find a better solution to prevent routing to BT hearing aid(b/122931261).if ((devices2.isEmpty()) &&(getForceUse(AUDIO_POLICY_FORCE_FOR_MEDIA) != AUDIO_POLICY_FORCE_NO_BT_A2DP)) {devices2 = availableOutputDevices.getDevicesFromType(AUDIO_DEVICE_OUT_HEARING_AID);}if ((devices2.isEmpty()) &&(getForceUse(AUDIO_POLICY_FORCE_FOR_MEDIA) == AUDIO_POLICY_FORCE_SPEAKER)) {devices2 = availableOutputDevices.getDevicesFromType(AUDIO_DEVICE_OUT_SPEAKER);}if (devices2.isEmpty() && (getLastRemovableMediaDevices().size() > 0)) {if ((getForceUse(AUDIO_POLICY_FORCE_FOR_MEDIA) != AUDIO_POLICY_FORCE_NO_BT_A2DP) &&outputs.isA2dpSupported()) {// Get the last connected device of wired and bluetooth a2dpdevices2 = availableOutputDevices.getFirstDevicesFromTypes(getLastRemovableMediaDevices());} else {// Get the last connected device of wired except bluetooth a2dpdevices2 = availableOutputDevices.getFirstDevicesFromTypes(getLastRemovableMediaDevices(GROUP_WIRED));}}if ((devices2.isEmpty()) && (strategy != STRATEGY_SONIFICATION)) {// no sonification on aux digital (e.g. HDMI)devices2 = availableOutputDevices.getDevicesFromType(AUDIO_DEVICE_OUT_AUX_DIGITAL);}if ((devices2.isEmpty()) &&(getForceUse(AUDIO_POLICY_FORCE_FOR_DOCK) == AUDIO_POLICY_FORCE_ANALOG_DOCK)) {devices2 = availableOutputDevices.getDevicesFromType(AUDIO_DEVICE_OUT_ANLG_DOCK_HEADSET);}if (devices2.isEmpty()) {devices2 = availableOutputDevices.getDevicesFromType(AUDIO_DEVICE_OUT_SPEAKER);}DeviceVector devices3;if (strategy == STRATEGY_MEDIA) {// ARC, SPDIF and AUX_LINE can co-exist with others.devices3 = availableOutputDevices.getDevicesFromTypes({AUDIO_DEVICE_OUT_HDMI_ARC, AUDIO_DEVICE_OUT_SPDIF, AUDIO_DEVICE_OUT_AUX_LINE});}devices2.add(devices3);// device is DEVICE_OUT_SPEAKER if we come from case STRATEGY_SONIFICATION or// STRATEGY_ENFORCED_AUDIBLE, AUDIO_DEVICE_NONE otherwisedevices.add(devices2);// If hdmi system audio mode is on, remove speaker out of output list.if ((strategy == STRATEGY_MEDIA) &&(getForceUse(AUDIO_POLICY_FORCE_FOR_HDMI_SYSTEM_AUDIO) ==AUDIO_POLICY_FORCE_HDMI_SYSTEM_AUDIO_ENFORCED)) {devices.remove(devices.getDevicesFromType(AUDIO_DEVICE_OUT_SPEAKER));}// for STRATEGY_SONIFICATION:// if SPEAKER was selected, and SPEAKER_SAFE is available, use SPEAKER_SAFE insteadif (strategy == STRATEGY_SONIFICATION) {devices.replaceDevicesByType(AUDIO_DEVICE_OUT_SPEAKER,availableOutputDevices.getDevicesFromType(AUDIO_DEVICE_OUT_SPEAKER_SAFE));}} break;最后,根据前两个步骤得到的streamType、DeviceVector以及输入attr参数确定输出通道output,对应函数getOutputForDevices,首先通过streamType确定通道类型flag,如果是AUDIO_OUTPUT_FLAG_DIRECT类型(音频流直接输出到音频设备)则需要调用openDirectOutput打开output,如果是其他类型则从已经打开的mOutputs输出通道中,遍历每个通道,且该通道包含支持设备必须全包含devices集合,然后从outputs集合中,根据format、channelMask、sampleRate选择一个匹配度最高作为输出通道。

status_t status = openDirectOutput(stream, session, &directConfig, *flags, devices, &output);if (status != NAME_NOT_FOUND) {return output;}if (audio_is_linear_pcm(config->format)) {// get which output is suitable for the specified stream. The actual// routing change will happen when startOutput() will be calledSortedVector<audio_io_handle_t> outputs = getOutputsForDevices(devices, mOutputs);// at this stage we should ignore the DIRECT flag as no direct output could be found earlier*flags = (audio_output_flags_t)(*flags & ~AUDIO_OUTPUT_FLAG_DIRECT);output = selectOutput(outputs, *flags, config->format, channelMask, config->sample_rate);}回到getOutputAttr函数,最后将选出的output保存在outputDesc中。

sp<SwAudioOutputDescriptor> outputDesc = mOutputs.valueFor(*output);sp<TrackClientDescriptor> clientDesc =new TrackClientDescriptor(*portId, uid, session, resultAttr, clientConfig,sanitizedRequestedPortId, *stream,mEngine->getProductStrategyForAttributes(resultAttr),toVolumeSource(resultAttr),*flags, isRequestedDeviceForExclusiveUse,std::move(weakSecondaryOutputDescs),outputDesc->mPolicyMix);outputDesc->addClient(clientDesc);再返回到AudioFlinger的createTrack, 获取到对应的output之后,就可以通过thread->createTrack_l创建AudioTrack对应的track了。

AudioFlinger.cpp

track = thread->createTrack_l(client, streamType, localAttr, &output.sampleRate,input.config.format, input.config.channel_mask,&output.frameCount, &output.notificationFrameCount,input.notificationsPerBuffer, input.speed,input.sharedBuffer, sessionId, &output.flags,callingPid, input.clientInfo.clientTid, clientUid,&lStatus, portId, input.audioTrackCallback);Thread.cpp

track = new Track(this, client, streamType, attr, sampleRate, format,channelMask, frameCount,nullptr /* buffer */, (size_t)0 /* bufferSize */, sharedBuffer,sessionId, creatorPid, uid, *flags, TrackBase::TYPE_DEFAULT, portId);lStatus = track != 0 ? track->initCheck() : (status_t) NO_MEMORY;if (lStatus != NO_ERROR) {ALOGE("createTrack_l() initCheck failed %d; no control block?", lStatus);// track must be cleared from the caller as the caller has the AF lockgoto Exit;}mTracks.add(track);这里主要是playbackthread创建新的track并把track加入到自己的mTracks表中。

构造 1 个 AudioTrack 实例时,AudioFlinger 会有 1 个 PlaybackThread 实例、1 个 Track 实例、1 个 TrackHandle 实例、1 个 AudioTrackServerProxy 实例、1 块 FIFO 与之对应。