部署k8s-efk日志收集服务(小白的“升级打怪”成长之路)

目录

部署efk

1、所有节点都要安装

2、创建nfs存储

3、在master主机操作

4、创建nfs存储访问、构建es集群

5、部署kibana

6、安装fluentd组件

部署efk

使用k8s集群部署

1、所有节点都要安装

yum install -y nfs-utils yum install -y socat

2、创建nfs存储

mkdir /data/v1 -p

vim /etc/exports

/data/v1 192.168.58.0/24(rw,sync,no_root_squash)

[root@k8s-master efk]# systemctl enable --now nfs

Created symlink /etc/systemd/system/multi-user.target.wants/nfs-server.service → /usr/lib/systemd/system/nfs-server.service.

[root@k8s-master efk]# exportfs -arv

exportfs: /etc/exports [1]: Neither 'subtree_check' or 'no_subtree_check' specified for export "192.168.58.0/24:/data/v1".Assuming default behaviour ('no_subtree_check').NOTE: this default has changed since nfs-utils version 1.0.x

exporting 192.168.58.0/24:/data/v1

[root@k8s-master efk]# showmount -e

Export list for k8s-master:

/data/v1 192.168.58.0/24

3、在master主机操作

安装efk服务

rz efk.zip unzip efk.zip cd efk/

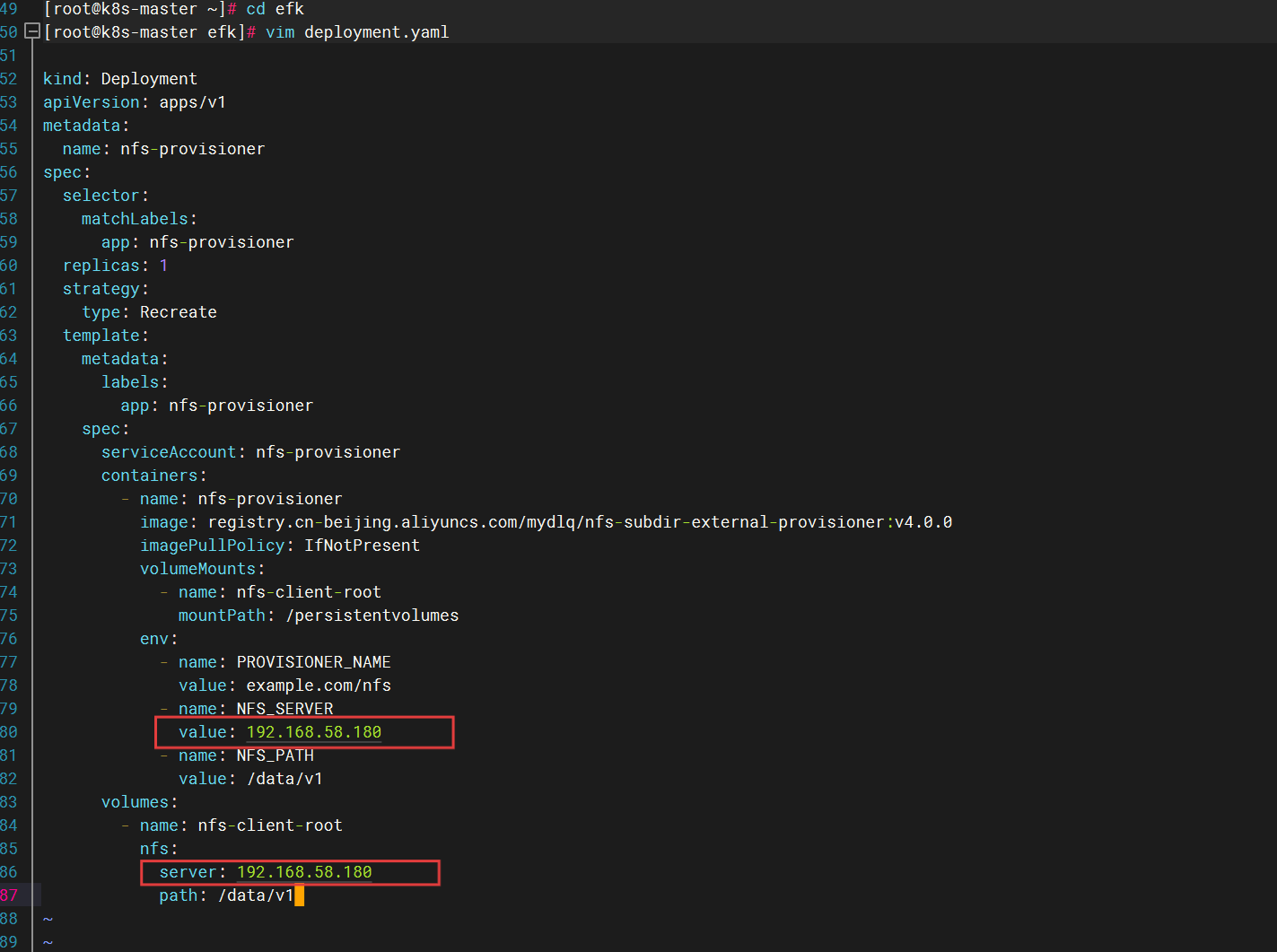

修改配置文件

vim deployment.yaml

#修改为本机ip地址

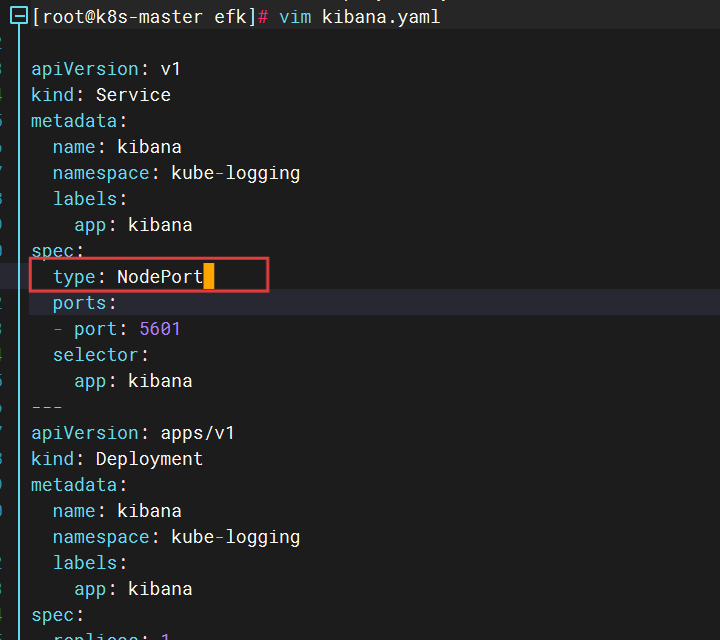

vim kibana.yaml

#在spec字段中增加type字段

4、创建nfs存储访问、构建es集群

[root@k8s-master efk]# ls class.yaml elasticsearch-statefulset.yaml fluentd.yaml kube-logging.yaml rbac.yaml deployment.yaml elasticsearch_svc.yaml kibana.yaml pod.yaml serviceaccount.yaml

##创建nfs存储访问 kubectl create -f serviceaccount.yaml kubectl create -f rbac.yaml kubectl create -f deployment.yaml kubectl create -f class.yaml ##构建es集群 kubectl create -f kube-logging.yaml kubectl create -f elasticsearch-statefulset.yaml kubectl create -f elasticsearch_svc.yaml

[root@k8s-master efk]# kubectl -n kube-logging get po -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES es-cluster-0 1/1 Running 0 32s 10.244.169.154 k8s-node2 <none> <none> es-cluster-1 1/1 Running 0 25s 10.244.36.90 k8s-node1 <none> <none> es-cluster-2 1/1 Running 0 20s 10.244.169.155 k8s-node2 <none> <none>

5、部署kibana

vim kibana.yml

server.name: kibana server.host: "0" elasticsearch.hosts: [ "http://elasticsearch:9200" ] xpack.monitoring.ui.container.elasticsearch.enabled: true i18n.locale: "zh-CN"

kubectl -n kube-logging create configmap kibana-configmap --from-file=kibana.yml=./kibana.yml

vim kibana.yaml

#最后加入volumeMounts:- name: kibana-configmountPath: /usr/share/kibana/config/volumes:- name: kibana-configconfigMap:name: kibana-configmap

等待es的pod running后执行

[root@k8s-master efk]# kubectl -n kube-logging get po -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES es-cluster-0 1/1 Running 0 32s 10.244.169.154 k8s-node2 <none> <none> es-cluster-1 1/1 Running 0 25s 10.244.36.90 k8s-node1 <none> <none> es-cluster-2 1/1 Running 0 20s 10.244.169.155 k8s-node2 <none> <none>

kubectl apply -f kibana.yaml

[root@k8s-master efk]# kubectl -n kube-logging get po NAME READY STATUS RESTARTS AGE es-cluster-0 1/1 Running 0 7m15s es-cluster-1 1/1 Running 0 7m8s es-cluster-2 1/1 Running 0 7m3s kibana-7645484fc7-8gjfk 1/1 Running 0 4m59s

安装完后在master节点执行

[root@k8s-master efk]# kubectl port-forward --address 192.168.58.180 es-cluster-0 9200:9200 --namespace=kube-logging Forwarding from 192.168.58.180:9200 -> 9200 Handling connection for 9200 Handling connection for 9200

网站访问:http://192.168.58.180:9200/_cluster/health?pretty

查看暴露端口号

[root@k8s-master efk]kubectl -n kube-logging get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE elasticsearch ClusterIP None <none> 9200/TCP,9300/TCP 8m15s kibana NodePort 10.97.151.213 <none> 5601:31403/TCP 6m4s

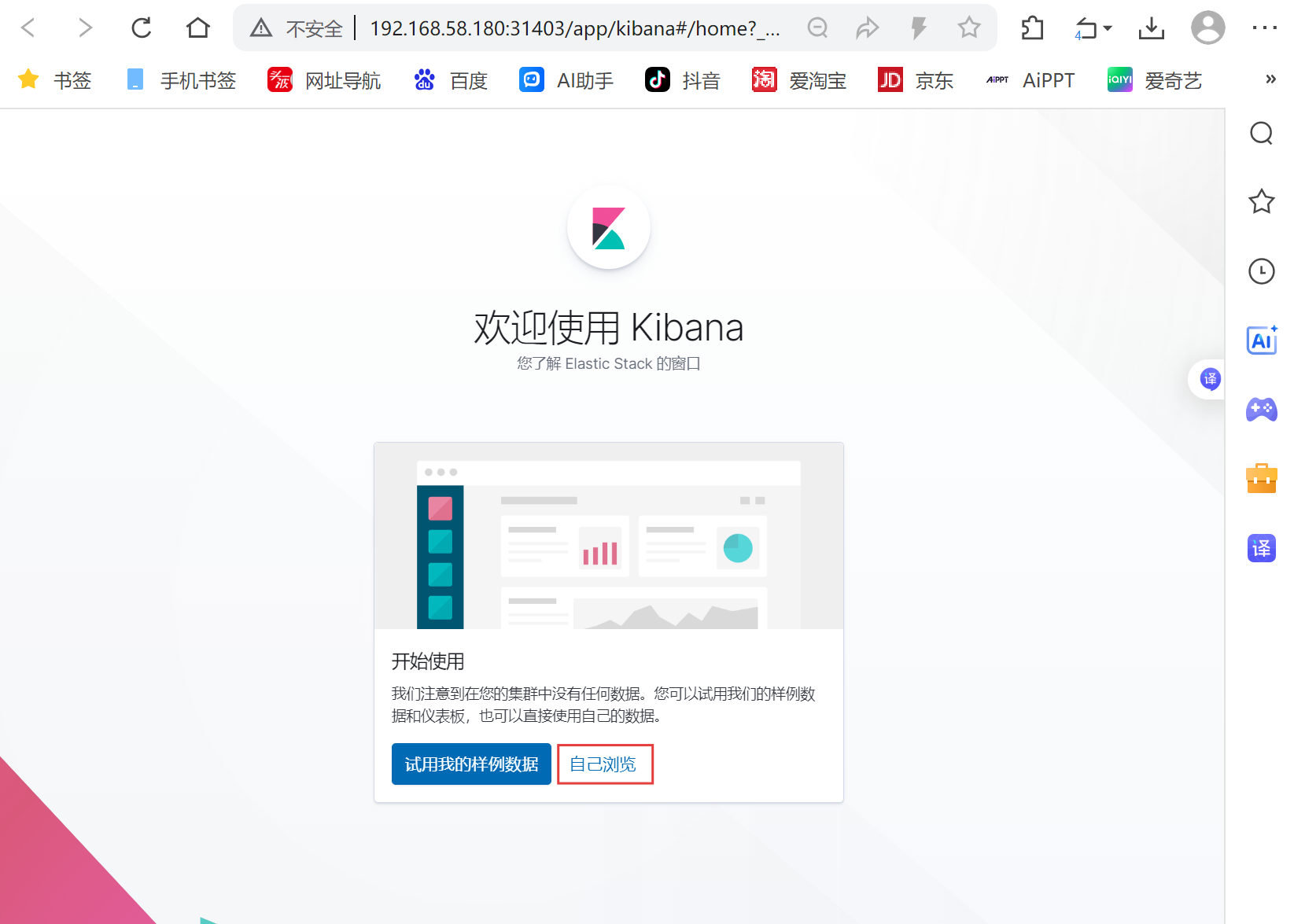

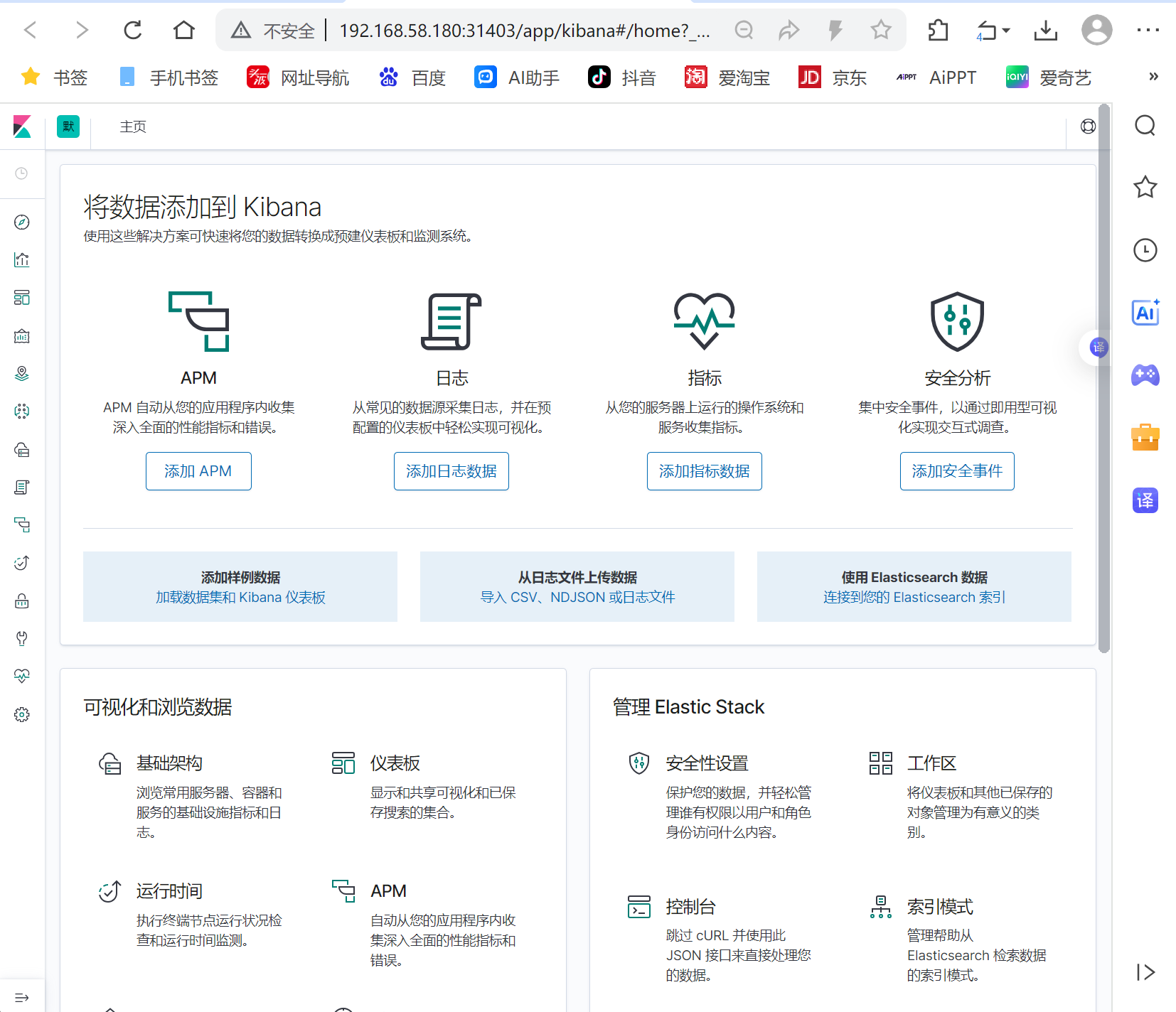

网站访问:192.168.58.180:31403

6、安装fluentd组件

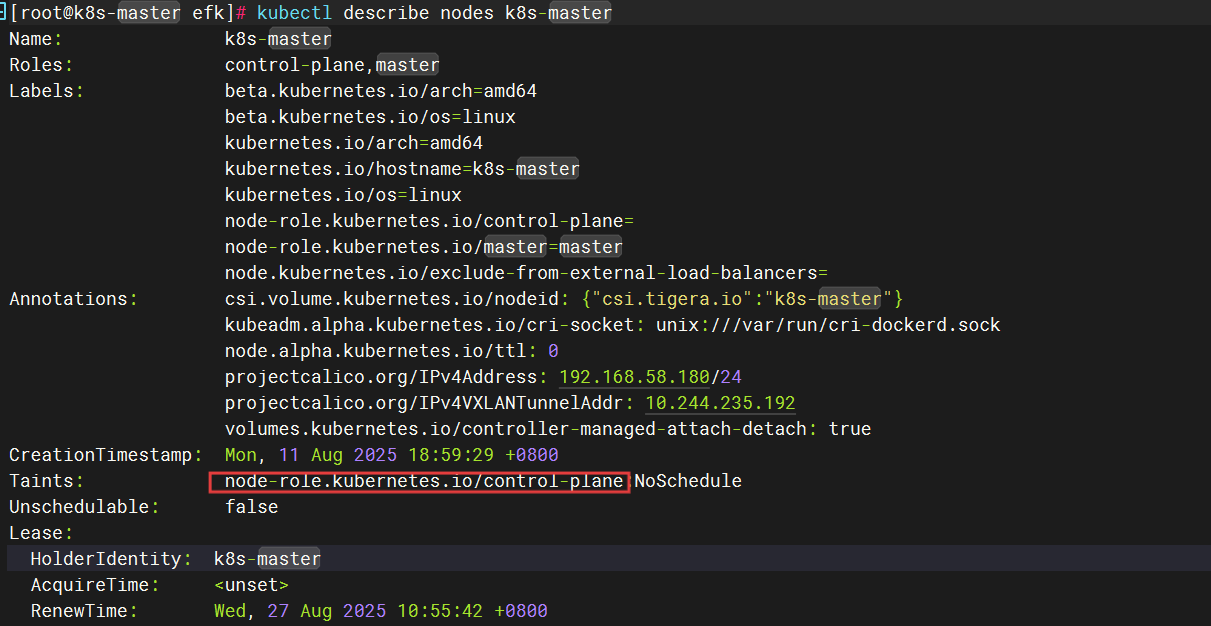

查看污点并复制污点

kubectl describe nodes k8s-master

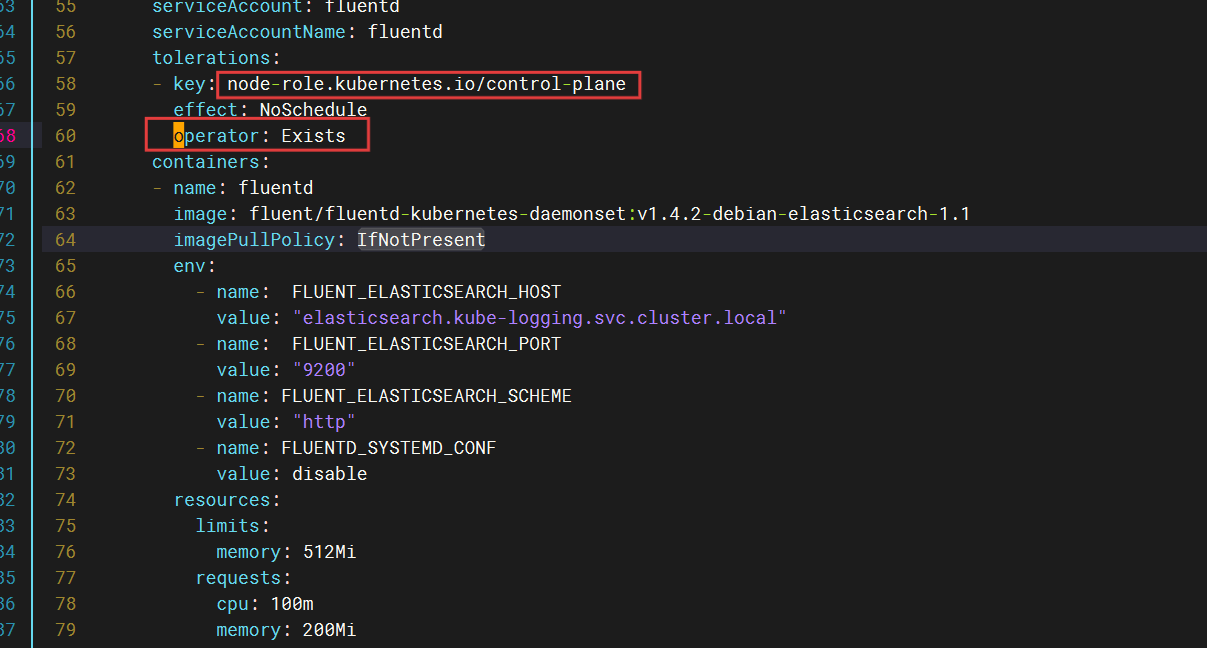

修改配置文件

vim fluentd.yaml

##修改key的污点 ##在tolerations字段中加上:operator: Exists

kubectl apply -f fluentd.yaml

[root@k8s-master efk]# kubectl -n kube-logging get po -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES es-cluster-0 1/1 Running 0 40m 10.244.169.154 k8s-node2 <none> <none> es-cluster-1 1/1 Running 0 40m 10.244.36.90 k8s-node1 <none> <none> es-cluster-2 1/1 Running 0 40m 10.244.169.155 k8s-node2 <none> <none> fluentd-5rndr 1/1 Running 0 114s 10.244.169.157 k8s-node2 <none> <none> fluentd-kjc7h 1/1 Running 0 3m43s 10.244.235.197 k8s-master <none> <none> fluentd-xrkr2 1/1 Running 0 2m3s 10.244.36.93 k8s-node1 <none> <none> kibana-7645484fc7-8gjfk 1/1 Running 0 38m 10.244.36.91 k8s-node1 <none> <none>

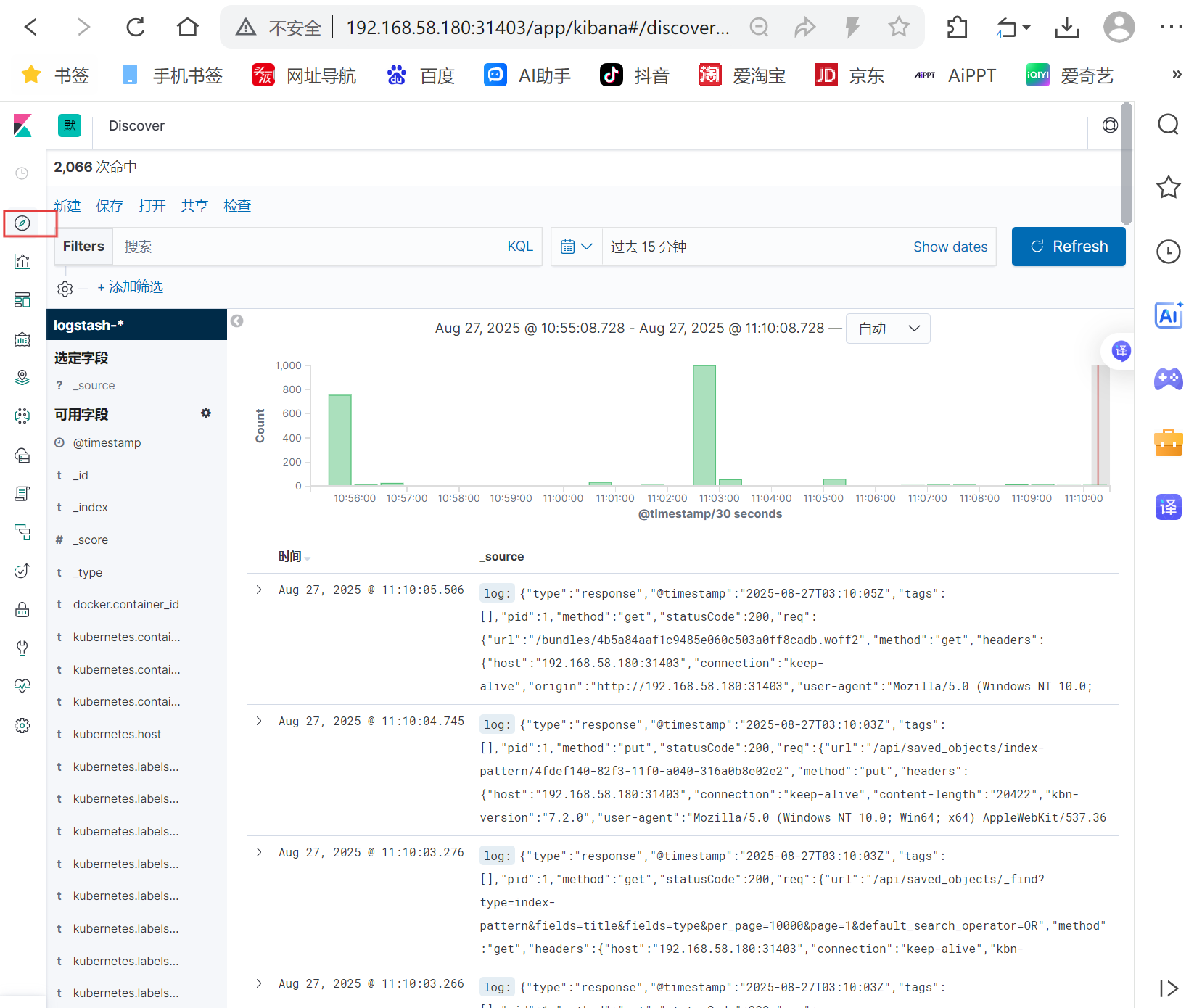

网站访问:192.168.58.180:31715

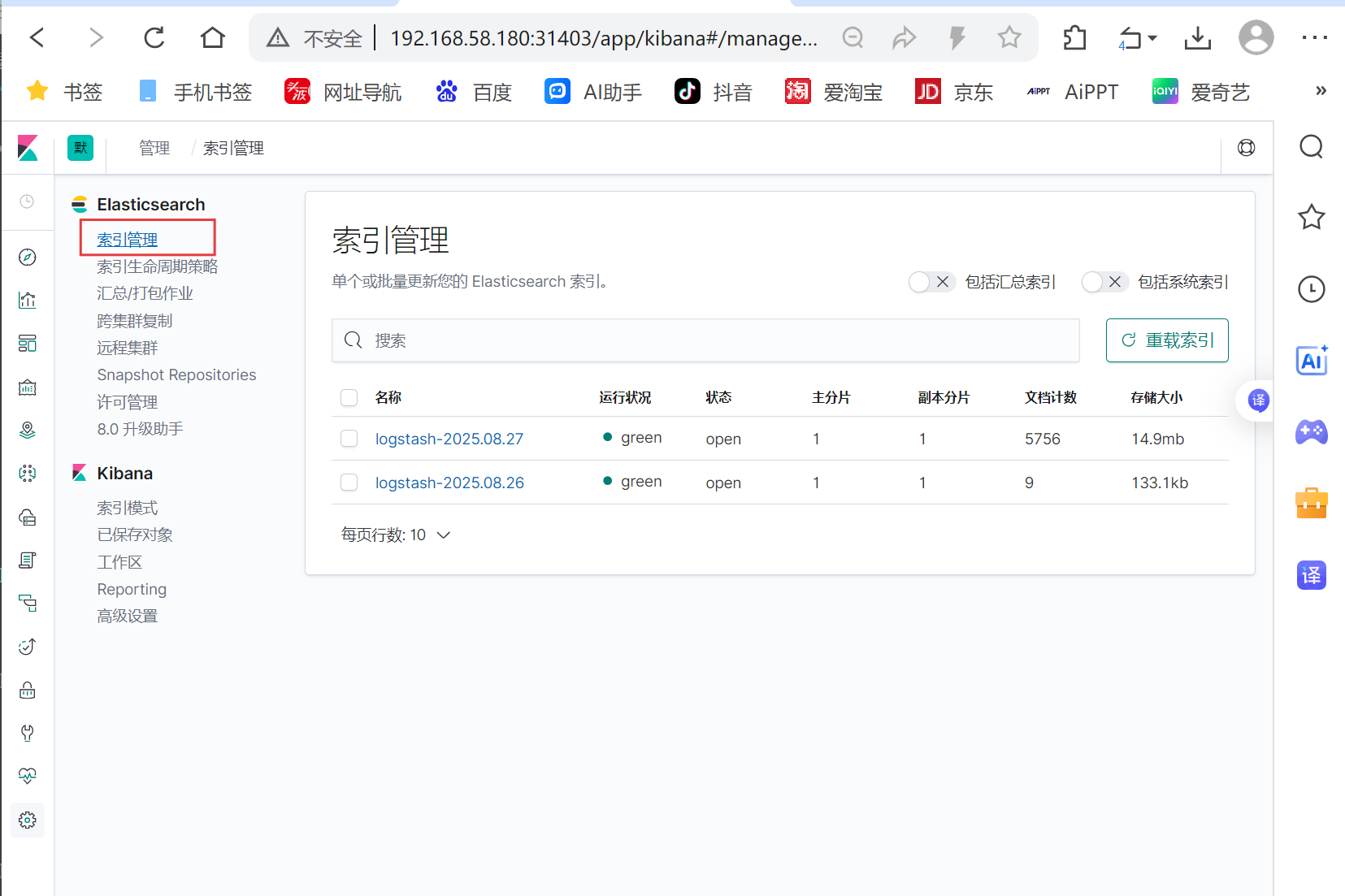

创建索引模式

查看