Kubernetes部署Prometheus+Grafana 监控系统NFS存储方案

文章目录

- Kubernetes 部署 Prometheus + Grafana 监控系统(NFS存储方案)

- 一、NFS服务器配置(192.168.104.50)

- 1. 安装NFS服务

- 2. 创建共享目录

- 3. 配置NFS共享

- 4. 验证NFS共享

- 二、所有Kubernetes节点配置(包括master和worker)

- 1. 安装NFS客户端

- 2. 验证NFS连接

- 三、准备容器镜像(在可以访问外网的机器上操作)

- 1. 拉取所需镜像

- 2. 保存镜像为文件

- 3. 将镜像文件传输到所有节点

- 4. 在每个节点加载镜像

- 四、部署NFS Provisioner

- 1. 创建命名空间

- 2. 创建NFS Provisioner部署文件(nfs-provisioner.yaml)

- 3. 创建StorageClass(nfs-storageclass.yaml)

- 4. 应用配置

- 5. 验证部署

- 五、部署Prometheus监控系统

- 1. 创建监控命名空间

- 2. 创建Prometheus配置文件(prometheus-configmap.yaml)

- 3. 创建Prometheus主部署文件(prometheus.yaml)

- 4. 应用Prometheus配置

- 六、部署Node Exporter

- 1. 创建Node Exporter部署文件(node-exportet-daemonset.yaml)

- 2. 创建Node Exporter服务文件(node-exportet-svc.yaml)

- 3. 应用Node Exporter配置

- 七、部署Grafana

- 1. 创建Grafana部署文件(grafana.yaml)

- 2. 应用Grafana配置

- 八、验证部署

- 1. 检查所有组件状态

- 2. 访问监控界面

- 3. 配置Grafana

- 九、常见问题解决指南

- 问题1:PVC处于Pending状态

- 问题2:镜像拉取失败

- 问题3:NFS连接问题

Kubernetes 部署 Prometheus + Grafana 监控系统(NFS存储方案)

一、NFS服务器配置(192.168.104.50)

1. 安装NFS服务

yum install -y nfs-utils rpcbind

systemctl enable --now rpcbind

systemctl enable --now nfs-server

2. 创建共享目录

mkdir -p /data/k8s_data

chmod 777 /data/k8s_data

3. 配置NFS共享

echo "/data/k8s_data *(rw,sync,no_root_squash,no_subtree_check)" > /etc/exports

exportfs -arv

4. 验证NFS共享

showmount -e localhost

# 应该输出:

# Export list for localhost:

# /data/k8s_data *

二、所有Kubernetes节点配置(包括master和worker)

1. 安装NFS客户端

yum install -y nfs-utils

2. 验证NFS连接

mkdir /mnt/test

mount -t nfs 192.168.104.50:/data/k8s_data /mnt/test

touch /mnt/test/testfile

ls /mnt/test

umount /mnt/test

三、准备容器镜像(在可以访问外网的机器上操作)

1. 拉取所需镜像

docker pull prom/prometheus:latest

docker pull grafana/grafana:latest

docker pull prom/node-exporter:latest

docker pull registry.k8s.io/sig-storage/nfs-subdir-external-provisioner:v4.0.2

docker pull busybox:latest

docker pull quay.io/coreos/kube-state-metrics:v2.9.2

2. 保存镜像为文件

docker save -o prometheus.tar prom/prometheus:latest

docker save -o grafana.tar grafana/grafana:latest

docker save -o node-exporter.tar prom/node-exporter:latest

docker save -o nfs-provisioner.tar registry.k8s.io/sig-storage/nfs-subdir-external-provisioner:v4.0.2

docker save -o busybox.tar busybox:latest

docker save -o kube-state-metrics.tar quay.io/coreos/kube-state-metrics:v2.9.2

3. 将镜像文件传输到所有节点

scp *.tar root@192.168.104.51:/root

scp *.tar root@192.168.104.52:/root

scp *.tar root@192.168.104.53:/root

4. 在每个节点加载镜像

docker load -i prometheus.tar

docker load -i grafana.tar

docker load -i node-exporter.tar

docker load -i nfs-provisioner.tar

docker load -i busybox.tar

docker load -i kube-state-metrics.tar

四、部署NFS Provisioner

1. 创建命名空间

kubectl create namespace nfs-storageclass

2. 创建NFS Provisioner部署文件(nfs-provisioner.yaml)

apiVersion: apps/v1

kind: Deployment

metadata:name: nfs-client-provisionernamespace: nfs-storageclass

spec:replicas: 1selector:matchLabels:app: nfs-client-provisionerstrategy:type: Recreatetemplate:metadata:labels:app: nfs-client-provisionerspec:serviceAccountName: nfs-client-provisionernodeSelector:kubernetes.io/hostname: node1 # 指定运行在node1节点containers:- name: nfs-client-provisionerimage: registry.k8s.io/sig-storage/nfs-subdir-external-provisioner:v4.0.2imagePullPolicy: IfNotPresentvolumeMounts:- name: nfs-client-rootmountPath: /persistentvolumesenv:- name: PROVISIONER_NAMEvalue: k8s-sigs.io/nfs-subdir-external-provisioner- name: NFS_SERVERvalue: 192.168.104.50- name: NFS_PATHvalue: /data/k8s_datavolumes:- name: nfs-client-rootnfs:server: 192.168.104.50path: /data/k8s_data

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:name: nfs-client-provisioner-runner

rules:- apiGroups: [""]resources: ["persistentvolumes"]verbs: ["get", "list", "watch", "create", "delete"]- apiGroups: [""]resources: ["persistentvolumeclaims"]verbs: ["get", "list", "watch", "update"]- apiGroups: ["storage.k8s.io"]resources: ["storageclasses"]verbs: ["get", "list", "watch"]- apiGroups: [""]resources: ["events"]verbs: ["create", "patch", "update"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:name: run-nfs-client-provisioner

subjects:- kind: ServiceAccountname: nfs-client-provisionernamespace: nfs-storageclass

roleRef:kind: ClusterRolename: nfs-client-provisioner-runnerapiGroup: rbac.authorization.k8s.io

---

apiVersion: v1

kind: ServiceAccount

metadata:name: nfs-client-provisionernamespace: nfs-storageclass

3. 创建StorageClass(nfs-storageclass.yaml)

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:name: nfs-clientannotations:storageclass.kubernetes.io/is-default-class: "true"

provisioner: k8s-sigs.io/nfs-subdir-external-provisioner

parameters:archiveOnDelete: "false"

4. 应用配置

kubectl apply -f nfs-provisioner.yaml

kubectl apply -f nfs-storageclass.yaml

5. 验证部署

kubectl get pods -n nfs-storageclass

# 应该看到nfs-client-provisioner运行中kubectl get storageclass

# 应该看到nfs-client标记为(default)

五、部署Prometheus监控系统

1. 创建监控命名空间

kubectl create namespace monitor

2. 创建Prometheus配置文件(prometheus-configmap.yaml)

apiVersion: v1

kind: ConfigMap

metadata:name: prometheus-confignamespace: monitor

data:prometheus.yml: |global:scrape_interval: 15sevaluation_interval: 15sscrape_configs:- job_name: 'prometheus'kubernetes_sd_configs:- role: endpointsnamespaces:names: [monitor]relabel_configs:- source_labels: [__meta_kubernetes_service_name]regex: prometheus-svcaction: keep- source_labels: [__meta_kubernetes_endpoint_port_name]regex: webaction: keep- job_name: 'coredns'kubernetes_sd_configs:- role: endpointsnamespaces:names: [kube-system]relabel_configs:- source_labels: [__meta_kubernetes_service_name]regex: kube-dnsaction: keep- source_labels: [__meta_kubernetes_endpoint_port_name]regex: metricsaction: keep- job_name: 'kube-apiserver'scheme: httpstls_config:ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crtinsecure_skip_verify: falsebearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/tokenkubernetes_sd_configs:- role: endpointsnamespaces:names: [default, kube-system]relabel_configs:- source_labels: [__meta_kubernetes_service_name]regex: kubernetesaction: keep- source_labels: [__meta_kubernetes_endpoint_port_name]regex: httpsaction: keep- job_name: 'node-exporter'kubernetes_sd_configs:- role: noderelabel_configs:- source_labels: [__address__]regex: '(.*):10250'replacement: '${1}:9100'target_label: __address__action: replace- job_name: 'cadvisor'kubernetes_sd_configs:- role: nodescheme: httpstls_config:insecure_skip_verify: trueca_file: '/var/run/secrets/kubernetes.io/serviceaccount/ca.crt'bearer_token_file: '/var/run/secrets/kubernetes.io/serviceaccount/token'relabel_configs:- target_label: __metrics_path__replacement: /metrics/cadvisor

3. 创建Prometheus主部署文件(prometheus.yaml)

apiVersion: v1

kind: ServiceAccount

metadata:name: prometheusnamespace: monitor

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:name: prometheus

rules:- apiGroups: [""]resources: ["nodes", "services", "endpoints", "pods", "nodes/proxy"]verbs: ["get", "list", "watch"]- apiGroups: ["extensions"]resources: ["ingresses"]verbs: ["get", "list", "watch"]- apiGroups: [""]resources: ["configmaps", "nodes/metrics"]verbs: ["get"]- nonResourceURLs: ["/metrics"]verbs: ["get"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:name: prometheus

roleRef:apiGroup: rbac.authorization.k8s.iokind: ClusterRolename: prometheus

subjects:- kind: ServiceAccountname: prometheusnamespace: monitor

---

apiVersion: v1

kind: Service

metadata:name: prometheus-svcnamespace: monitorlabels:app: prometheusannotations:prometheus_io_scrape: "true"

spec:selector:app: prometheustype: NodePortports:- name: webnodePort: 32224port: 9090targetPort: http

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:name: prometheus-pvcnamespace: monitor

spec:accessModes:- ReadWriteOnceresources:requests:storage: 2GistorageClassName: nfs-client

---

apiVersion: apps/v1

kind: Deployment

metadata:name: prometheusnamespace: monitorlabels:app: prometheus

spec:selector:matchLabels:app: prometheusreplicas: 1template:metadata:labels:app: prometheusspec:serviceAccountName: prometheusinitContainers:- name: "change-permission-of-directory"image: busybox:latestcommand: ["/bin/sh", "-c"]args:- chown -R 65534:65534 /prometheussecurityContext:privileged: truevolumeMounts:- mountPath: "/etc/prometheus"name: config-volume- mountPath: "/prometheus"name: datacontainers:- name: prometheusimage: prom/prometheus:latestimagePullPolicy: IfNotPresentargs:- "--config.file=/etc/prometheus/prometheus.yml"- "--storage.tsdb.path=/prometheus"- "--web.enable-lifecycle"- "--web.console.libraries=/usr/share/prometheus/console_libraries"- "--web.console.templates=/usr/share/prometheus/consoles"ports:- name: httpcontainerPort: 9090volumeMounts:- mountPath: "/etc/prometheus"name: config-volume- mountPath: "/prometheus"name: dataresources:requests:cpu: 100mmemory: 512Milimits:cpu: 100mmemory: 512Mivolumes:- name: datapersistentVolumeClaim:claimName: prometheus-pvc- name: config-volumeconfigMap:name: prometheus-config

4. 应用Prometheus配置

kubectl apply -f prometheus-configmap.yaml

kubectl apply -f prometheus.yaml

六、部署Node Exporter

1. 创建Node Exporter部署文件(node-exportet-daemonset.yaml)

apiVersion: apps/v1

kind: DaemonSet

metadata:name: node-exporternamespace: monitorlabels:app: node-exporter

spec:selector:matchLabels:app: node-exportertemplate:metadata:labels:app: node-exporterspec:hostPID: truehostIPC: truehostNetwork: truenodeSelector:kubernetes.io/os: linuxcontainers:- name: node-exporterimage: prom/node-exporter:latestimagePullPolicy: IfNotPresentargs:- --web.listen-address=$(HOSTIP):9100- --path.procfs=/host/proc- --path.sysfs=/host/sys- --path.rootfs=/host/root- --collector.filesystem.ignored-mount-points=^/(dev|proc|sys|var/lib/docker/.+)($|/)- --collector.filesystem.ignored-fs-types=^(autofs|binfmt_misc|cgroup|configfs|debugfs|devpts|devtmpfs|fusectl|hugetlbfs|mqueue|overlay|proc|procfs|pstore|rpc_pipefs|securityfs|sysfs|tracefs)$env:- name: HOSTIPvalueFrom:fieldRef:fieldPath: status.hostIPports:- containerPort: 9100resources:requests:cpu: 150mmemory: 180Milimits:cpu: 150mmemory: 180MisecurityContext:runAsNonRoot: truerunAsUser: 65534volumeMounts:- name: procmountPath: /host/proc- name: sysmountPath: /host/sys- name: rootmountPath: /host/rootmountPropagation: HostToContainerreadOnly: truetolerations:- operator: "Exists"volumes:- name: prochostPath:path: /proc- name: devhostPath:path: /dev- name: syshostPath:path: /sys- name: roothostPath:path: /

2. 创建Node Exporter服务文件(node-exportet-svc.yaml)

apiVersion: v1

kind: Service

metadata:name: node-exporternamespace: monitorlabels:app: node-exporter

spec:selector:app: node-exporterports:- name: metricsport: 9100targetPort: 9100clusterIP: None

3. 应用Node Exporter配置

kubectl apply -f node-exportet-daemonset.yaml

kubectl apply -f node-exportet-svc.yaml

七、部署Grafana

1. 创建Grafana部署文件(grafana.yaml)

apiVersion: v1

kind: PersistentVolumeClaim

metadata:name: grafana-pvcnamespace: monitor

spec:accessModes:- ReadWriteOnceresources:requests:storage: 2GistorageClassName: nfs-client

---

apiVersion: apps/v1

kind: Deployment

metadata:name: grafana-servernamespace: monitor

spec:replicas: 1selector:matchLabels:task: monitoringk8s-app: grafanatemplate:metadata:labels:task: monitoringk8s-app: grafanaspec:containers:- name: grafanaimage: grafana/grafana:latestimagePullPolicy: IfNotPresentports:- containerPort: 3000protocol: TCPvolumeMounts:- mountPath: /var/lib/grafana/name: grafana-dataenv:- name: GF_SERVER_HTTP_PORTvalue: "3000"- name: GF_AUTH_BASIC_ENABLEDvalue: "false"- name: GF_AUTH_ANONYMOUS_ENABLEDvalue: "true"- name: GF_AUTH_ANONYMOUS_ORG_ROLEvalue: Admin- name: GF_SERVER_ROOT_URLvalue: /volumes:- name: grafana-datapersistentVolumeClaim:claimName: grafana-pvc

---

apiVersion: v1

kind: Service

metadata:labels:kubernetes.io/cluster-service: 'true'kubernetes.io/name: monitoring-grafananame: grafana-svcnamespace: monitor

spec:ports:- port: 80targetPort: 3000nodePort: 31091selector:k8s-app: grafanatype: NodePort

2. 应用Grafana配置

kubectl apply -f grafana.yaml

八、验证部署

1. 检查所有组件状态

kubectl get pods -n monitor

# 应该看到类似以下输出:

# NAME READY STATUS RESTARTS AGE

# grafana-server-7868b7cc7c-k8lrd 1/1 Running 0 5m

# kube-state-metrics-74c47f9485-h8787 1/1 Running 0 6m

# node-exporter-6h79q 1/1 Running 0 5m

# node-exporter-9qkbs 1/1 Running 0 5m

# node-exporter-t64xb 1/1 Running 0 5m

# prometheus-5696fb478b-4wf4j 1/1 Running 0 6mkubectl get pvc -n monitor

# 应该看到所有PVC状态为Boundkubectl get svc -n monitor

# 应该看到Prometheus和Grafana服务

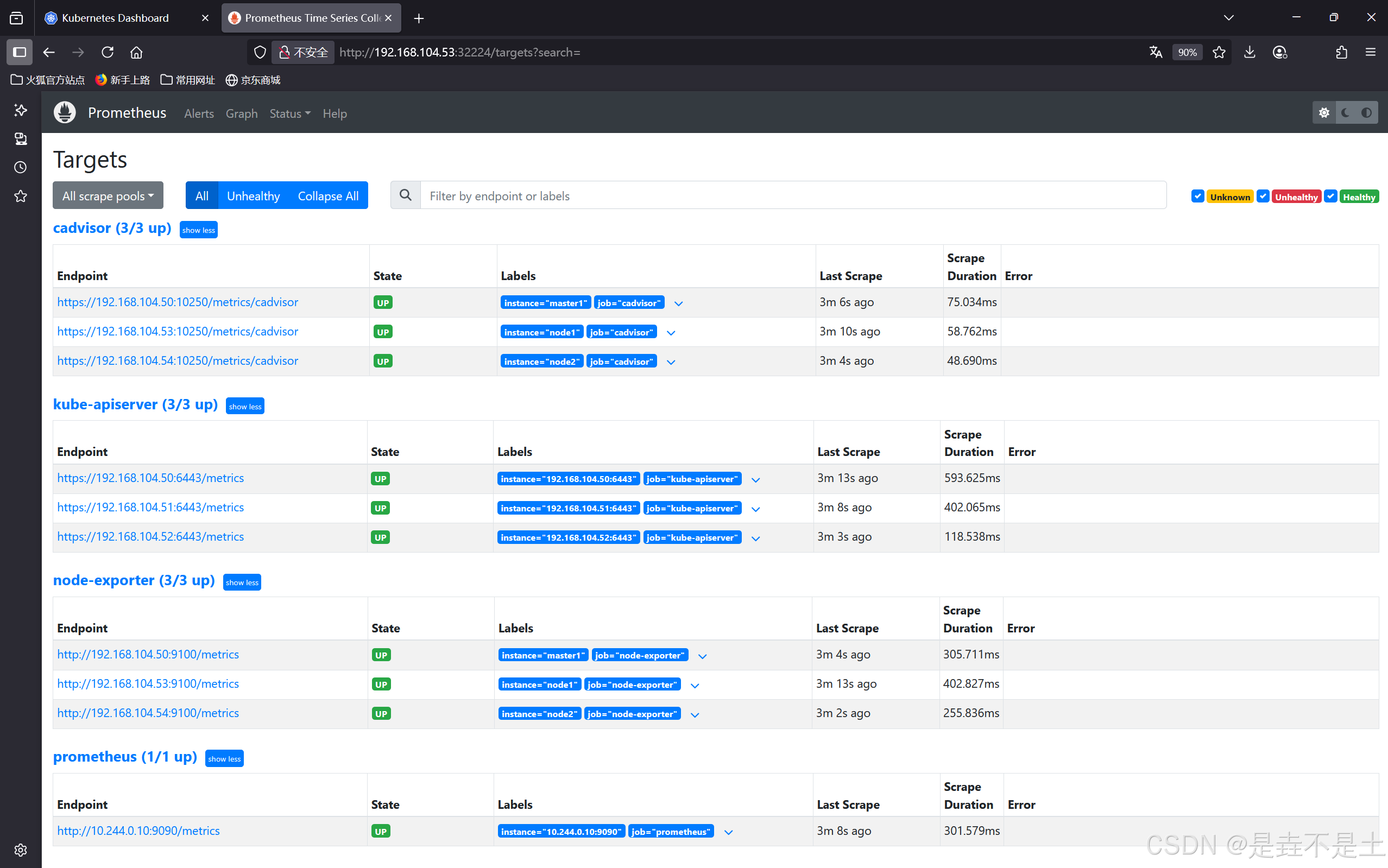

2. 访问监控界面

-

Prometheus:

http://<任意节点IP>:32224

-

Grafana:

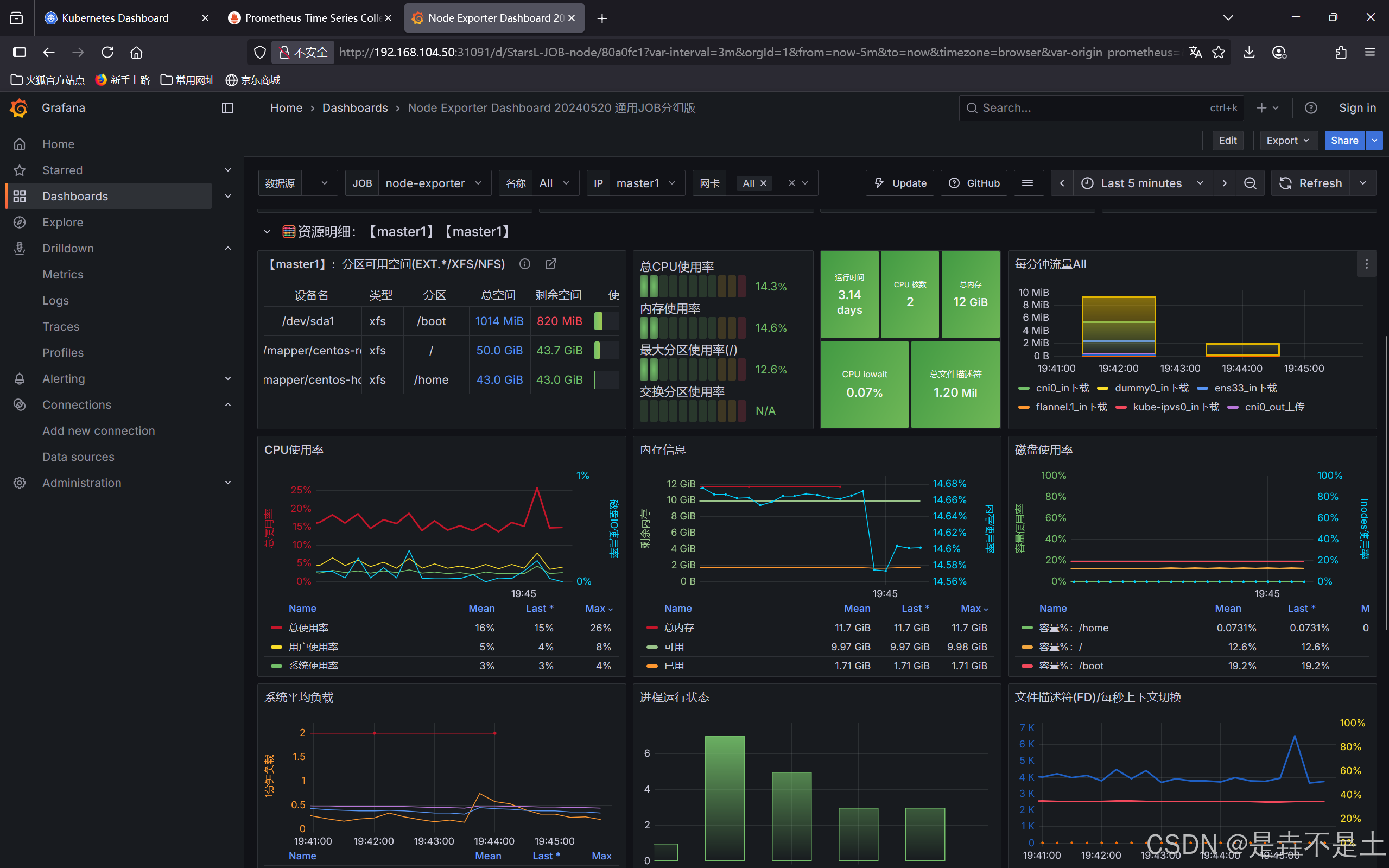

http://<任意节点IP>:31091

3. 配置Grafana

- 登录Grafana(默认用户/密码:admin/admin)

- 添加数据源:

- 类型:Prometheus

- URL:

http://prometheus-svc.monitor.svc.cluster.local:9090

- 导入仪表盘:

- Node监控:ID

16098 - Kubernetes集群监控:ID

14249

- Node监控:ID

九、常见问题解决指南

问题1:PVC处于Pending状态

原因:NFS Provisioner未能自动创建PV

解决方案:手动创建PV

# 在NFS服务器创建目录

ssh 192.168.104.50

mkdir -p /data/k8s_data/{prometheus,grafana}

chmod 777 /data/k8s_data/{prometheus,grafana}

exit# 创建手动PV

cat <<EOF | kubectl apply -f -

apiVersion: v1

kind: PersistentVolume

metadata:name: prometheus-pv

spec:capacity:storage: 2GivolumeMode: FilesystemaccessModes:- ReadWriteOncepersistentVolumeReclaimPolicy: RetainstorageClassName: nfs-clientnfs:path: /data/k8s_data/prometheusserver: 192.168.104.50

---

apiVersion: v1

kind: PersistentVolume

metadata:name: grafana-pv

spec:capacity:storage: 2GivolumeMode: FilesystemaccessModes:- ReadWriteOncepersistentVolumeReclaimPolicy: RetainstorageClassName: nfs-clientnfs:path: /data/k8s_data/grafanaserver: 192.168.104.50

EOF# 删除旧Pod强制重建

kubectl delete pod -n monitor --all

问题2:镜像拉取失败

解决方案:

- 使用本地镜像加载

- 在部署文件中指定

imagePullPolicy: IfNotPresent - 确保节点上已加载所需镜像

问题3:NFS连接问题

验证步骤:

# 在K8s节点上测试

mkdir /mnt/test

mount -t nfs 192.168.104.50:/data/k8s_data /mnt/test

touch /mnt/test/testfile

ls /mnt/test

umount /mnt/test

修复方法:

- 确保NFS服务器防火墙开放2049端口

- 检查NFS服务器

/etc/exports配置 - 在NFS服务器运行:

exportfs -arv