k8s:将打包好的 Kubernetes 集群镜像推送到Harbor私有镜像仓库

本文介绍了在离线环境中部署Harbor镜像仓库的完整流程。首先通过脚本创建多个Harbor项目,然后使用KubeKey工具将预打包的Kubernetes镜像(kubesphere.tar.gz)推送到Harbor仓库。接着配置containerd以支持从私有仓库拉取镜像,包括设置TLS证书和镜像仓库端点。最后解决Kubernetes 1.26.12安装过程中pause镜像的拉取问题,通过重命名本地镜像的方式替代原本需要从registry.k8s.io获取的pause镜像。整个过程涉及Harbor项目创建、镜像推送、containerd配置和镜像重命名等关键步骤。

离线部署Harbor,详见我另外一篇博客《k8s:docker compose离线部署haborV2.13.1及采用外部的postgresql及redis数据库》

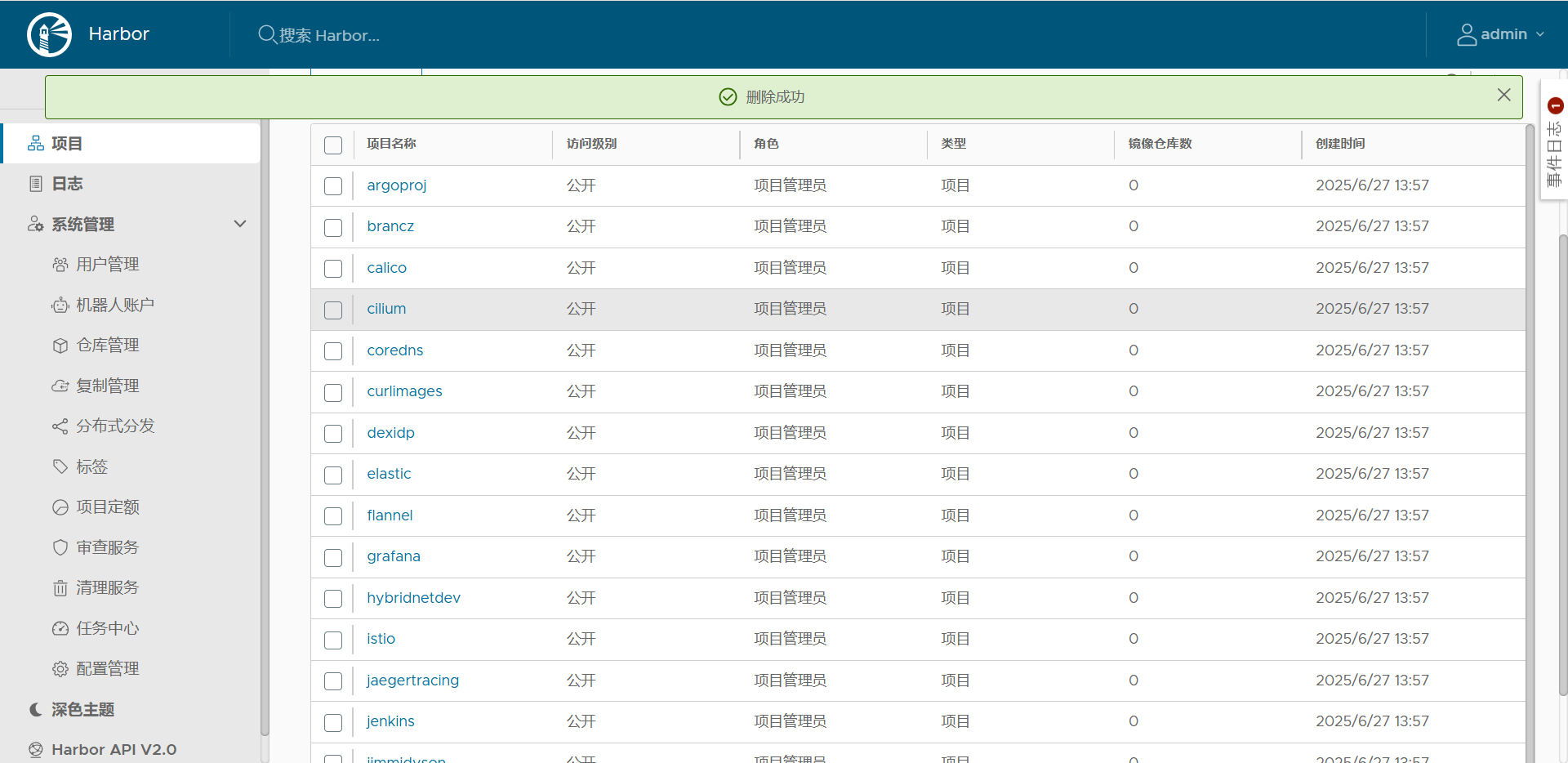

一、创建 harbor 项目

#cd /app/KubeSphere/setup

vi create_project_harbor.sh

#!/usr/bin/env bash

# Copyright 2018 The KubeSphere Authors.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.url="https://dockerhub.kubekey.local" # 或修改为实际镜像仓库地址

user="admin"

passwd="Harbor12345"harbor_projects=(

ks

kubesphere

kubesphereio

coredns

calico

flannel

cilium

hybridnetdev

kubeovn

openebs

library

plndr

jenkins

argoproj

dexidp

openpolicyagent

curlimages

grafana

kubeedge

nginxinc

prom

kiwigrid

minio

opensearchproject

istio

jaegertracing

timberio

prometheus-operator

jimmidyson

elastic

thanosio

brancz

prometheus

)for project in "${harbor_projects[@]}"; do

echo "creating $project"

curl -u "${user}:${passwd}" -X POST -H "Content-Type: application/json" "${url}/api/v2.0/projects" -d "{ \"project_name\": \"${project}\", \"public\": true}" -k # 注意在 curl 命令末尾加上 -k

done

#chmod +x create_project_harbor.sh

./create_project_harbor.sh

二、推送到harbor仓库(时间比较久)

#cd /app/KubeSphere/setup

./kk artifact image push -f config-sample.yaml -a /app/KubeSphere/setup/kubesphere.tar.gz

./kk:KubeKey 是一个用于部署 Kubernetes 和 KubeSphere 集群的工具。

artifact image push:KubeKey 的子命令,专门用于推送预先打包好的 Kubernetes 或 KubeSphere 镜像到指定的镜像仓库。

-f 参数用于指定配置文件路径。

config-sample.yaml 集群准备的配置文件,其中包含了关于如何连接到私有镜像仓库的信息(如认证信息、仓库地址等),以及其他与集群相关的配置。

-a 参数用于指定本地的镜像归档文件路径。

/app/KubeSphere/setup/kubesphere.tar.gz 是生成的包含所有必要镜像的压缩包。这个文件通常包含了搭建 Kubernetes 或 KubeSphere 所需的所有 Docker 镜像,

注:kubesphere.tar.gz 的构建,详见《K8s:离线部署Kubernetes1.26.12及采用外部Harbor》的第二章。

三、编辑 containerd 的配置文件(通常位于 /etc/containerd/config.toml):

为了保证通过crictl能从harbor拉取jar,需要进行如下配置:

vi /etc/containerd/config.toml

disabled_plugins = []

[plugins."io.containerd.grpc.v1.cri"]

enable_selinux = false

selinux_category_range = 1024

disable_seccomp = true

sandbox_image = "172.23.123.117:8443/kubesphereio/pause:3.9"

[plugins."io.containerd.grpc.v1.cri".registry]

[plugins."io.containerd.grpc.v1.cri".registry.configs]

[plugins."io.containerd.grpc.v1.cri".registry.configs."172.23.123.117:8443"]

tls = true

cert_file = "/etc/containerd/certs.d/172.23.123.117:8443/172.23.123.117.cert"

key_file = "/etc/containerd/certs.d/172.23.123.117:8443/172.23.123.117.key"

ca_file = "/etc/containerd/certs.d/172.23.123.117:8443/ca.crt"

skip_verify = false

[plugins."io.containerd.grpc.v1.cri".registry.mirrors]

[plugins."io.containerd.grpc.v1.cri".registry.mirrors."registry.k8s.io"]

endpoint = ["https://172.23.123.117:8443"]

[plugins."io.containerd.grpc.v1.cri".registry.mirrors."docker.io"]

endpoint = ["https://172.23.123.117:8443"]

重启

sudo systemctl daemon-reload

sudo systemctl restart containerd

四.增加registry.k8s.io/pause

在安装Kubernetes1.26.12时,需要registry.k8s.io/pause,因为是离线环境,所以手动构建.

从私有库拉取3.9版本的pause

crictl pull 172.23.123.117:8443/kubesphereio/pause:3.9

通过打标签方式,将3.9版本的pause变成 registry.k8s.io/pause:3.8,从而解决离线环境拉取不到 registry.k8s.io/pause:3.8的问题。

ctr -n=k8s.io image tag 172.23.123.117:8443/kubesphereio/pause:3.9 registry.k8s.io/pause:3.8