Mistral AI开源 Magistral-Small-2507

宣布Magistral——Mistral AI推出的首款推理模型,专精于垂直领域、具备透明化特性与多语言推理能力。

最优秀的人类思维并非线性——它穿梭于逻辑、洞见、不确定性与发现之间。推理型语言模型让我们得以将复杂思考和深度理解交由AI增强或代劳,提升了人类处理需要精确逐步推敲分析问题的能力。

但这一领域仍处于萌芽阶段。早期思维模型存在诸多已知局限:缺乏针对垂直领域问题的专业深度、透明度不足、以及目标语言环境下的推理不连贯等。

今天我们激动地宣布通过Magistral模型为AI研究做出最新贡献——这是我们首个推理模型。Magistral同步推出开源版与企业版,其设计理念是:以人类熟悉的思维方式进行深度推理论证,同时兼具跨专业领域的知识储备、可追踪验证的透明推理过程,以及深度的多语言适应能力。

亮点

Magistral Small 1.1

基于Mistral Small 3.1(2503版本)开发,额外增强推理能力,通过Magistral Medium轨迹进行监督微调并叠加强化学习,最终形成这款高效的小型推理模型,参数量达240亿。

Magistral Small支持本地部署,经量化后可适配单张RTX 4090显卡或32GB内存的MacBook设备运行。

Magistral Small 1.1 版本应提供与 基准测试结果 中 Magistral Small 1.0 相近的性能表现。

本次更新包含以下特性:

- 更优化的语气与模型行为表现。您将体验到更出色的 LaTeX 和 Markdown 格式处理能力,以及对简单通用提示的更简洁回答。

- 模型陷入无限生成循环的概率显著降低。

- 新增

[THINK]和[/THINK]特殊标记用于封装推理内容。该设计既便于解析思维轨迹,也能有效避免提示中出现’[THINK]'字符串时引发混淆。 - 推理提示词现已整合至系统提示模板中。

主要特点

- 推理能力:能够在给出答案前进行长链式推理追踪。

- 多语言支持:支持数十种语言,包括英语、法语、德语、希腊语、印地语、印尼语、意大利语、日语、韩语、马来语、尼泊尔语、波兰语、葡萄牙语、罗马尼亚语、俄语、塞尔维亚语、西班牙语、土耳其语、乌克兰语、越南语、阿拉伯语、孟加拉语、中文及波斯语。

- Apache 2.0许可证:开放许可协议,允许商业及非商业用途的修改和使用。

- 上下文窗口:128k上下文窗口,但超过40k后性能可能下降。因此建议将模型最大长度设置为40k。

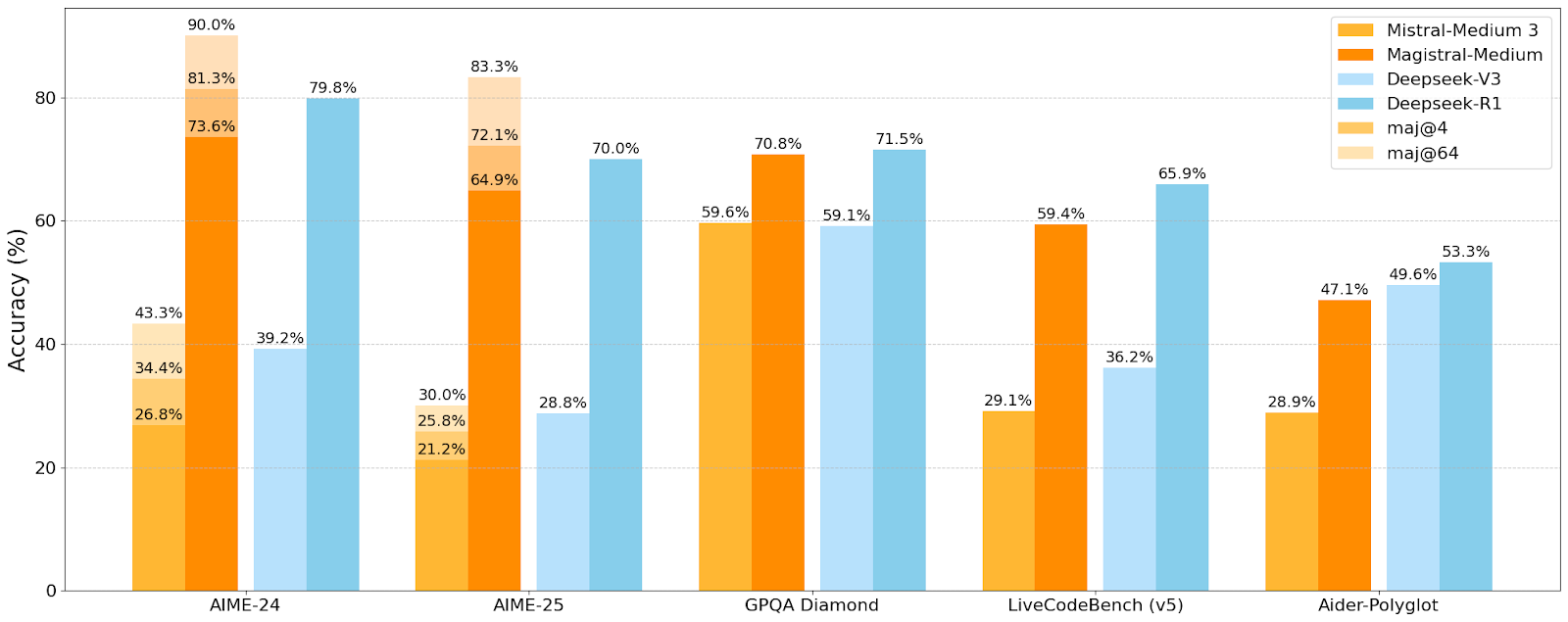

基准测试结果

| Model | AIME24 pass@1 | AIME25 pass@1 | GPQA Diamond | Livecodebench (v5) |

|---|---|---|---|---|

| Magistral Medium 1.1 | 72.03% | 60.99% | 71.46% | 59.35% |

| Magistral Medium 1.0 | 73.59% | 64.95% | 70.83% | 59.36% |

| Magistral Small 1.1 | 70.52% | 62.03% | 65.78% | 59.17% |

| Magistral Small 1.0 | 70.68% | 62.76% | 68.18% | 55.84% |

采样参数

请确保使用:

top_p: 0.95temperature: 0.7max_tokens: 40960

基础聊天模板

为获得最佳效果,我们强烈建议包含以下系统提示词(可根据具体使用场景进行编辑调整):

请先通过思维过程(内心独白)构思,直至形成最终回复。使用Markdown格式撰写回复,数学公式请用LaTeX表示。思维过程和回复内容需与输入语言保持一致。

思维过程必须遵循以下模板:[THINK]您的思考或草稿内容,如同在草稿纸上演算习题。可随意采用非正式表达并充分展开,直至有把握生成最终回复。语言需与输入保持一致。[/THINK]此处提供完整的最终回复内容。

[THINK]和[/THINK]是必须保持原样的特殊标记符。

请务必以mistral-common作为权威参考。下方提供支持mistral-common的库示例。

根据使用场景和需求,您可选择在多轮对话中保留推理痕迹,或仅保留助手最终回复内容。

使用方法

该模型可与以下框架配合使用:

推理

vllm(推荐): 参见下文transformers: 参见下文

此外,社区还准备了量化版本的模型,可与以下框架配合使用(按字母顺序排序):

llama.cpp: https://huggingface.co/mistralai/Magistral-Small-2507-GGUFlmstudio(llama.cpp, MLX): GGUF, MLX-bf16, MLX-8bit, MLX-6bit, MLX-4bit

训练

支持通过以下工具进行微调(按字母顺序排列):

axolotl: https://github.com/axolotl-ai-cloud/axolotl/tree/main/examples/magistralunsloth: https://docs.unsloth.ai/basics/magistral

vLLM(推荐)

我们建议配合使用vLLM库部署生产级推理流水线。

安装指南

请确保安装最新版vLLM代码库:

pip install -U vllm \--pre \--extra-index-url https://wheels.vllm.ai/nightly

该操作会自动安装mistral_common >= 1.8.2版本。

版本验证命令:

python -c "import mistral_common; print(mistral_common.__version__)"

您也可以直接使用现成的 Docker 镜像 或从 Docker Hub 获取。

按如下方式启动模型服务:

vllm serve mistralai/Magistral-Small-2507 --reasoning-parser mistral --tokenizer_mode mistral --config_format mistral --load_format mistral --tool-call-parser mistral --enable-auto-tool-choice --tensor-parallel-size 2

按如下方式测试模型连通性:

from typing import Any

from openai import OpenAI

from huggingface_hub import hf_hub_download# Modify OpenAI's API key and API base to use vLLM's API server.

openai_api_key = "EMPTY"

openai_api_base = "http://localhost:8000/v1"TEMP = 0.7

TOP_P = 0.95

MAX_TOK = 40_960client = OpenAI(api_key=openai_api_key,base_url=openai_api_base,

)models = client.models.list()

model = models.data[0].iddef load_system_prompt(repo_id: str, filename: str) -> dict[str, Any]:file_path = hf_hub_download(repo_id=repo_id, filename=filename)with open(file_path, "r") as file:system_prompt = file.read()index_begin_think = system_prompt.find("[THINK]")index_end_think = system_prompt.find("[/THINK]")return {"role": "system","content": [{"type": "text", "text": system_prompt[:index_begin_think]},{"type": "thinking","thinking": system_prompt[index_begin_think + len("[THINK]") : index_end_think],"closed": True,},{"type": "text","text": system_prompt[index_end_think + len("[/THINK]") :],},],}SYSTEM_PROMPT = load_system_prompt(model, "SYSTEM_PROMPT.txt")query = "Write 4 sentences, each with at least 8 words. Now make absolutely sure that every sentence has exactly one word less than the previous sentence."

# or try out other queries

# query = "Exactly how many days ago did the French Revolution start? Today is June 4th, 2025."

# query = "Think about 5 random numbers. Verify if you can combine them with addition, multiplication, subtraction or division to 133"

# query = "If it takes 30 minutes to dry 12 T-shirts in the sun, how long does it take to dry 33 T-shirts?"messages = [SYSTEM_PROMPT,{"role": "user", "content": query}

]

stream = client.chat.completions.create(model=model,messages=messages,stream=True,temperature=TEMP,top_p=TOP_P,max_tokens=MAX_TOK,

)print("client: Start streaming chat completions...:\n")

printed_reasoning_content = False

answer = []for chunk in stream:reasoning_content = Nonecontent = None# Check the content is reasoning_content or contentif hasattr(chunk.choices[0].delta, "reasoning_content"):reasoning_content = chunk.choices[0].delta.reasoning_contentelif hasattr(chunk.choices[0].delta, "content"):content = chunk.choices[0].delta.contentif reasoning_content is not None:if not printed_reasoning_content:printed_reasoning_content = Trueprint("Start reasoning:\n", end="", flush=True)print(reasoning_content, end="", flush=True)elif content is not None:# Extract and print the contentif not reasoning_content and printed_reasoning_content:answer.extend(content)print(content, end="", flush=True)if answer:print("\n\n=============\nAnswer\n=============\n")print("".join(answer))

else:print("\n\n=============\nNo Answer\n=============\n")print("No answer was generated by the model, probably because the maximum number of tokens was reached.")# client: Start streaming chat completions...:

#

# Start reasoning:

# First, I need to write ...

# ...

#

#

# =============

# Answer

# =============

#

# Here are four sentences where each has at least 8 words, and each subsequent sentence has exactly one word less than the previous one:# 1. The quick brown fox jumps over the lazy dog and rests.

# 2. The lazy dog rests under the big shady tree peacefully.

# 3. The big shady tree provides ample shade during summer.

# 4. The tree's leaves are very lush and green.

Transformers

请确保安装最新版本的Transformers代码:

pip install git+https://github.com/huggingface/transformers

同时请确保安装 mistral_common >= 1.8.2:

pip install --upgrade mistral-common

检查

python -c "import mistral_common; print(mistral_common.__version__)"

现在你可以在Magistral中使用Transformers了:

from typing import Any

import torchfrom huggingface_hub import hf_hub_download

from transformers import AutoModelForCausalLM, AutoTokenizerTEMP = 0.7

TOP_P = 0.95

MAX_TOK = 40_960def load_system_prompt(repo_id: str, filename: str) -> dict[str, Any]:file_path = hf_hub_download(repo_id=repo_id, filename=filename)with open(file_path, "r") as file:system_prompt = file.read()index_begin_think = system_prompt.find("[THINK]")index_end_think = system_prompt.find("[/THINK]")return {"role": "system","content": [{"type": "text", "text": system_prompt[:index_begin_think]},{"type": "thinking","thinking": system_prompt[index_begin_think + len("[THINK]") : index_end_think],"closed": True,},{"type": "text","text": system_prompt[index_end_think + len("[/THINK]") :],},],}model_id = "mistralai/Magistral-Small-2507"

SYSTEM_PROMPT = load_system_prompt(model_id, "SYSTEM_PROMPT.txt")

query = "Think about 5 random numbers. Verify if you can combine them with addition, multiplication, subtraction or division to 133."

# or try out other queries

# query = "Exactly how many days ago did the French Revolution start? Today is June 4th, 2025."

# query = "Write 4 sentences, each with at least 8 words. Now make absolutely sure that every sentence has exactly one word less than the previous sentence."

# query = "If it takes 30 minutes to dry 12 T-shirts in the sun, how long does it take to dry 33 T-shirts?"tokenizer = AutoTokenizer.from_pretrained(model_id, tokenizer_type="mistral", use_fast=False)

model = AutoModelForCausalLM.from_pretrained(model_id, torch_dtype=torch.bfloat16, device_map="auto"

)input_ids = tokenizer.apply_chat_template([SYSTEM_PROMPT,{"role": "user", "content": query},],

)output = model.generate(input_ids=torch.tensor([input_ids], device=model.device),pad_token_id=tokenizer.pad_token_id,eos_token_id=tokenizer.eos_token_id,temperature=TEMP,top_p=TOP_P,do_sample=True,max_new_tokens=MAX_TOK,

)[0]decoded_output = tokenizer.decode(output[len(input_ids) :])

print(decoded_output)# [THINK]Alright, I need to think of 5 random numbers first. Let's say I pick the numbers 5, 10, 2, 7, and 3.

#

# Now, I need to see if I can combine these numbers using addition, multiplication, subtraction, or division to get 133.

# ...

# ...

# ...

# But if we're to find any five numbers that can be combined to make 133, then yes, such sets exist, like the one demonstrated above.[/THINK]Yes, it is possible to combine some sets of five random numbers to make 133 using basic arithmetic operations. For example, the numbers 13, 10, 1, 2, and 3 can be combined as follows to make 133:

#

# \[ (13 \times 10) + (3 \times (2 - 1)) = 130 + 3 = 133 \]

#

# However, not all sets of five random numbers can be combined in this way to make 133. For instance, with the numbers 5, 10, 2, 7, and 3, it is not possible to combine them using the allowed operations to get exactly 133.

#

# Therefore, the ability to combine five random numbers to make 133 depends on the specific numbers chosen.

#

# $133 = (13 \times 10) + (3 \times (2 - 1))$</s>