LangChain4j-第二篇 |实现声明式 AI 服务 AiService:简化 AI 集成新范式

引言:AI服务集成的痛点与Spring Boot的救赎

- 场景痛点:

描述传统AI服务(如OpenAI、LangChain)集成中的问题:- 大量重复代码(认证、客户端配置、异常处理);

- 配置分散,难以统一管理;

- 多模型环境切换困难。

- Spring Boot 的解法:

引入 Declarative Programming(声明式编程) 概念:- 用注解和自动配置隐藏复杂性;

- 类比Spring Data JPA的@Repository。

一、小试牛刀,快速集成AiService

1.1 maven配置依赖

<dependency><groupId>dev.langchain4j</groupId><artifactId>langchain4j-spring-boot-starter</artifactId><version>1.0.0-beta3</version></dependency><dependency><groupId>dev.langchain4j</groupId><artifactId>langchain4j-open-ai-spring-boot-starter</artifactId><version>1.0.0-beta3</version></dependency>1.2 基础配置

application.properties 配置如下

langchain4j.open-ai.chat-model.base-url=https://api.deepseek.com

langchain4j.open-ai.chat-model.api-key=申请的

langchain4j.open-ai.chat-model.model-name=deepseek-chat

langchain4j.open-ai.chat-model.log-requests=true

langchain4j.open-ai.chat-model.log-responses=true

1.3 构建AiService

@AiService

interface Assistant {@SystemMessage("回答问题的字数限制在50字以内,输出格式为 markdown")String chat(String userMessage);

}

@RestController

public class ChatController {@AutowiredAssistant assistant;@GetMapping("/chatAs")public String chat(String message) {return assistant.chat(message);}

}

当应用程序启动时,LangChain4j starter将扫描类路径并找到所有带有@AiService注释的接口。对于找到的每个AI Service,它将使用应用程序上下文中可用的所有LangChain4j组件创建此接口的实现,并将其注册为bean

结果测试

我们在上面的@SystemMessage 中对结果进行了限制 回答问题的字数限制在50字以内,输出格式为 markdown 测试结果如下,至此,我们已经完成了简单的集成工作。

二、AiService 的High-level 操作

2.1 多模型集成

如果我们有多个AI服务,并希望将不同的LangChain4j组件连接到每个服务中,比如上面我们已经集成了在线的deepseek 调用,如果现在想把本地部署的模型集成进来怎么办。下来我们演示如何调用本地部署的ollama 的deepseek模型。

引入集成ollama的maven依赖

<dependency><groupId>dev.langchain4j</groupId><artifactId>langchain4j-ollama-spring-boot-starter</artifactId><version>1.0.0-beta3</version>

</dependency>

在application.properties 中添加配置

langchain4j.ollama.chat-model.base-url=http://127.0.0.1:11868 #ollma 访问地址

langchain4j.ollama.chat-model.model-name=deepseek-r1:14b # 填写本地运行的模型

langchain4j.ollama.chat-model.log-requests=true

langchain4j.ollama.chat-model.log-responses=true

编写OllamaAssistant 注意要设置 wiringMode 值设为 AiServiceWiringMode.EXPLICIT chatModel 的值设为 ollamaChatModel

@AiService(wiringMode = AiServiceWiringMode.EXPLICIT, chatModel = "ollamaChatModel")

interface OllamaAssistant {@SystemMessage("You are a polite assistant")String chat(String userMessage);

}

ollamaAssistant 调用接口 chatOllama

@RestController

public class OllamaChatController {@GetMapping("/chatOllama")public String chatOllama(String message) {return ollamaAssistant.chat(message);}

}

测试一下,我们完成了指定的本地模型的调用,完美!!!

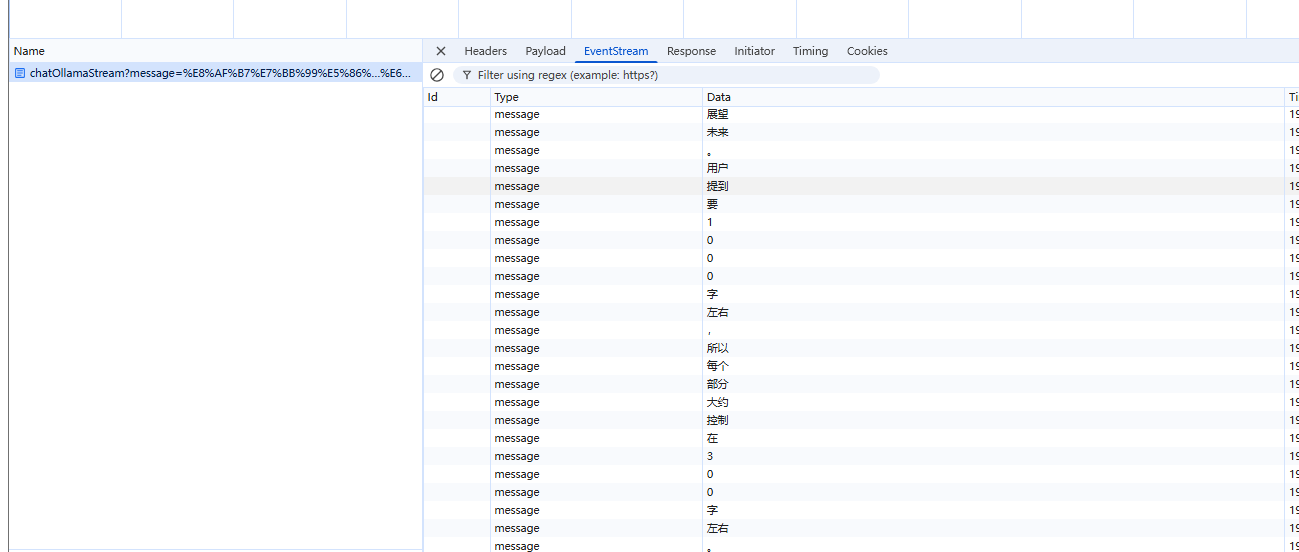

2.2 Streaming 输出

上面的例子中都是结果生成完成后,统一返回。这样使用体验不太好。如果我们想进行流式输出,可以 使用 Flux 作为AIService的返回值

引入集成maven依赖

<dependency><groupId>dev.langchain4j</groupId><artifactId>langchain4j-reactor</artifactId><version>1.0.0-beta3</version>

</dependency>

在application.properties 中添加配置

langchain4j.ollama.streaming-chat-model.base-url=http://192.168.201.225:11868

langchain4j.ollama.streaming-chat-model.model-name=deepseek-r1:14b

编写AiService streamingChatModel 使用 ollamaStreamingChatModel

@AiService(wiringMode = AiServiceWiringMode.EXPLICIT, streamingChatModel = "ollamaStreamingChatModel")interface OllamaStreamAssistant {@SystemMessage("You are a polite assistant")Flux<String> chat(String userMessage);

}

@AutowiredOllamaStreamAssistant ollamaStreamAssistant;@GetMapping(value="/chatOllamaStream",produces = MediaType.TEXT_EVENT_STREAM_VALUE)public Flux<String> chatOllamaStream(String message) {return ollamaStreamAssistant.chat(message);}

}

从测试结果看,我们已经完成流式输出的开发。

**2.3 其他配置 **

从AIService 的注解可以看出,还有许多如ChatMemory管理记忆对话,Tools 工具使用 等功能,这里就不展开了

package dev.langchain4j.service.spring;import dev.langchain4j.agent.tool.Tool;

import dev.langchain4j.memory.ChatMemory;

import dev.langchain4j.memory.chat.ChatMemoryProvider;

import dev.langchain4j.model.chat.ChatLanguageModel;

import dev.langchain4j.model.chat.StreamingChatLanguageModel;

import dev.langchain4j.model.moderation.ModerationModel;

import dev.langchain4j.rag.RetrievalAugmentor;

import dev.langchain4j.rag.content.retriever.ContentRetriever;

import dev.langchain4j.service.AiServices;

import org.springframework.stereotype.Service;import java.lang.annotation.Retention;

import java.lang.annotation.Target;import static dev.langchain4j.service.spring.AiServiceWiringMode.AUTOMATIC;

import static java.lang.annotation.ElementType.TYPE;

import static java.lang.annotation.RetentionPolicy.RUNTIME;/*** An interface annotated with {@code @AiService} will be automatically registered as a bean* and wired with all the following components (beans) available in the context:* <pre>* - {@link ChatLanguageModel}* - {@link StreamingChatLanguageModel}* - {@link ChatMemory}* - {@link ChatMemoryProvider}* - {@link ContentRetriever}* - {@link RetrievalAugmentor}* - All beans containing methods annotated with {@code @}{@link Tool}* </pre>* You can also explicitly specify which components (beans) should be wired into this AI Service* by setting {@link #wiringMode()} to {@link AiServiceWiringMode#EXPLICIT}* and specifying bean names using the following attributes:* <pre>* - {@link #chatModel()}* - {@link #streamingChatModel()}* - {@link #chatMemory()}* - {@link #chatMemoryProvider()}* - {@link #contentRetriever()}* - {@link #retrievalAugmentor()}* </pre>* See more information about AI Services <a href="https://docs.langchain4j.dev/tutorials/ai-services">here</a>* and in the Javadoc of {@link AiServices}.** @see AiServices*/

@Service

@Target(TYPE)

@Retention(RUNTIME)

public @interface AiService {/*** Specifies how LangChain4j components (beans) are wired (injected) into this AI Service.*/AiServiceWiringMode wiringMode() default AUTOMATIC;/*** When the {@link #wiringMode()} is set to {@link AiServiceWiringMode#EXPLICIT},* this attribute specifies the name of a {@link ChatLanguageModel} bean that should be used by this AI Service.*/String chatModel() default "";/*** When the {@link #wiringMode()} is set to {@link AiServiceWiringMode#EXPLICIT},* this attribute specifies the name of a {@link StreamingChatLanguageModel} bean that should be used by this AI Service.*/String streamingChatModel() default "";/*** When the {@link #wiringMode()} is set to {@link AiServiceWiringMode#EXPLICIT},* this attribute specifies the name of a {@link ChatMemory} bean that should be used by this AI Service.*/String chatMemory() default "";/*** When the {@link #wiringMode()} is set to {@link AiServiceWiringMode#EXPLICIT},* this attribute specifies the name of a {@link ChatMemoryProvider} bean that should be used by this AI Service.*/String chatMemoryProvider() default "";/*** When the {@link #wiringMode()} is set to {@link AiServiceWiringMode#EXPLICIT},* this attribute specifies the name of a {@link ContentRetriever} bean that should be used by this AI Service.*/String contentRetriever() default "";/*** When the {@link #wiringMode()} is set to {@link AiServiceWiringMode#EXPLICIT},* this attribute specifies the name of a {@link RetrievalAugmentor} bean that should be used by this AI Service.*/String retrievalAugmentor() default "";/*** When the {@link #wiringMode()} is set to {@link AiServiceWiringMode#EXPLICIT},* this attribute specifies the name of a {@link ModerationModel} bean that should be used by this AI Service.*/String moderationModel() default "";/*** When the {@link #wiringMode()} is set to {@link AiServiceWiringMode#EXPLICIT},* this attribute specifies the names of beans containing methods annotated with {@link Tool} that should be used by this AI Service.*/String[] tools() default {};

}结语

通过本文,我们学会了AIservice 的基本使用,基于这些LangChain4j 封装的功能,可以十分便捷的构建自己的AI 应用。

资源推荐

https://docs.langchain4j.dev/tutorials/spring-boot-integration