基于昇腾300IDUO 部署PaddleOCR模型

一、环境

- Ascend:310P3 96G*4

- CANN:8.0.RC3

- driver:24.1.rc3

- arch:aarch64

- os:linux

如何查看CANN与driver?

cd /usr/local/Ascend/ascend-toolkit/latest

cat version.cfgcat /usr/local/Ascend/driver/version.info

二、配置

下载源码

git clone -b main https://gitee.com/paddlepaddle/PaddleOCR.git

cd PaddleOCR

# 为确保一致的代码环境,切换到指定的commit-id

git reset --hard 433677182创建虚拟环境(这里python版本需要参考mindspore中选择的版本)

# 创建虚拟环境

conda create -n paddleocr python=3.9 -y

conda activate paddleocrpip install -r requirements.txt

pip install paddlepaddle==2.6.1 paddle2onnx==1.2.4准备推理模型

mkdir inference

cd inferencewget -nc https://paddleocr.bj.bcebos.com/PP-OCRv4/chinese/ch_PP-OCRv4_det_server_infer.tar

tar -xf ch_PP-OCRv4_det_server_infer.tar

wget -nc https://paddleocr.bj.bcebos.com/PP-OCRv4/chinese/ch_PP-OCRv4_rec_server_infer.tar

tar -xf ch_PP-OCRv4_rec_server_infer.tarpaddle2onnx

paddle2onnx --model_dir inference/ch_PP-OCRv4_det_server_infer \--model_filename inference.pdmodel \--params_filename inference.pdiparams \--save_file inference/det/model.onnx \--opset_version 11 \--enable_onnx_checker Truepaddle2onnx --model_dir inference/ch_PP-OCRv4_rec_server_infer \--model_filename inference.pdmodel \--params_filename inference.pdiparams \--save_file inference/rec/model.onnx \--opset_version 11 \--enable_onnx_checker Trueonnx2mindir

wget https://ms-release.obs.cn-north-4.myhuaweicloud.com/2.4.1/MindSpore/lite/release/linux/aarch64/cloud_fusion/python37/mindspore-lite-2.4.1-linux-aarch64.tar.gztar -zxvf mindspore-lite-2.4.1-linux-aarch64.tar.gzexport LD_LIBRARY_PATH=/home/PaddleOCR/mindspore-lite-2.4.1-linux-aarch64/tools/converter/lib:${LD_LIBRARY_PATH}

使用 `converter_lite`工具将检测和识别模型分别转换为 `mindir`格式

cd /home/PaddleOCR/mindspore-lite-2.4.1-linux-aarch64/tools/converter/converter

./converter_lite --fmk=ONNX \--saveType=MINDIR \--optimize=ascend_oriented \--modelFile=/home/PaddleOCR/inference/det/model.onnx \--outputFile=/home/PaddleOCR/inference/det/model./converter_lite --fmk=ONNX \--saveType=MINDIR \--optimize=ascend_oriented \--modelFile=/home/PaddleOCR/inference/rec/model.onnx \--outputFile=/home/PaddleOCR/inference/rec/model修改代码

PaddleOCR/tools/infer 下的utility.py

# 在 init_args函数,新增 --use_mindir,用于指示开启mindir推理,大概在39行

parser.add_argument("--use_mindir", type=str2bool, default=False)# 在 create_perdictor函数中,大约 214行的位置,新增mindir模型的初始化逻辑,这部分代码检测/识别/分类共用

if args.use_onnx:...

# ================加入这部分代码=================

elif args.use_mindir: # for ascend inferenceimport mindspore_lite as mslitemodel_file_path = model_dirif not os.path.exists(model_file_path):raise ValueError("not find model file path {}".format(model_file_path))# init context, and set target is ascend.context = mslite.Context()context.target = ["ascend"] # cpu/gpu/ascendcontext.ascend.device_id = args.gpu_id # default to 0# context.ascend.provider = "ge"model = mslite.Model()model.build_from_file(model_file_path, mslite.ModelType.MINDIR, context)return model, model.get_inputs(), None, None

# ================加入这部分代码=================

else:...predict_det.py、predict_rec.py、predict_cls.py

# 在 __init__函数中,大概 43行的位置,保存 args.use_mindir的值

self.use_mindir = args.use_mindir# 在 TextDetector -- predict函数中,大约 254行的位置,新增使用mindir模型推理的逻辑

if self.use_onnx:...

# ================加入这部分代码=================

elif self.use_mindir: # for ascend inferenceself.predictor.resize(self.input_tensor, [img.shape]) # dynamic shapeinputs = self.predictor.get_inputs()inputs[0].set_data_from_numpy(img)# execute inferenceoutputs = self.predictor.predict(inputs)outputs = [o.get_data_to_numpy() for o in outputs]

# ================加入这部分代码=================

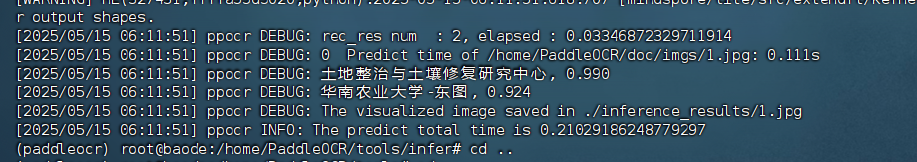

else:self.input_tensor.copy_from_cpu(img)...测试

python /home/PaddleOCR/tools/infer/predict_system.py \--use_mindir=True \--gpu_id=0 \--image_dir=/home/PaddleOCR/doc/imgs/1.jpg \--det_model_dir=/home/PaddleOCR/inference/det/model.mindir \--rec_model_dir=/home/PaddleOCR/inference/rec/model.mindir \--rec_char_dict_path=/home/PaddleOCR/ppocr/utils/ppocr_keys_v1.txt \--use_angle_cls=False \--vis_font_path=/home/PaddleOCR/doc/fonts/simfang.ttf三、常见问题

ModuleNotFoundError: No module named 'mindspore_lite'

Traceback (most recent call last):File "/home/PaddleOCR/tools/infer/predict_system.py", line 327, in <module>main(args)File "/home/PaddleOCR/tools/infer/predict_system.py", line 189, in maintext_sys = TextSystem(args)File "/home/PaddleOCR/tools/infer/predict_system.py", line 53, in __init__self.text_detector = predict_det.TextDetector(args)File "/home/PaddleOCR/tools/infer/predict_det.py", line 140, in __init__) = utility.create_predictor(args, "det", logger)File "/home/PaddleOCR/tools/infer/utility.py", line 216, in create_predictorimport mindspore_lite as mslite

ModuleNotFoundError: No module named 'mindspore_lite'

(paddleocr) root@baode:/home/PaddleOCR/mindspore-lite-2.4.1-linux-aarch64#

(paddleocr) root@baode:/home/PaddleOCR/mindspore-lite-2.4.1-linux-aarch64#

(paddleocr) root@baode:/home/PaddleOCR/mindspore-lite-2.4.1-linux-aarch64# pip install https://ms-release.obs.cn-north-4.myhuaweicloud.com/2.4.1/MindSpore/lite/release/linux/aarch64/cloud_fusion/python39/mindspore_lite-2.4.1-cp39-cp39-linux_aarch64.whl

推理结果