使用tensorRT10部署yolov5实例分割模型(2)

本文基于实际项目的使用经验,优化了原本的代码的文件结构,使得最新的部署代码可以更加方便的嵌入到不同的项目,同时优化的代码也变得更加鲁棒。由于不同项目使用的部署框架的版本不一致,本文使用tensorRT10的接口完成yolov5的实例分割模型部署任务。

这里就不再多说其他,直接上代码,部署代码分为三个文件utils.hpp文件、Trtmodel.hpp文件Trtmodel.cpp文件。

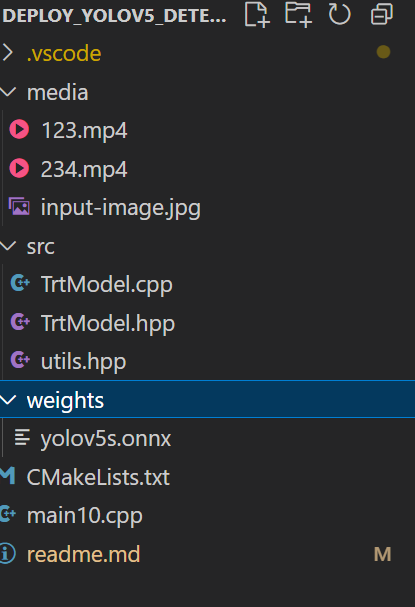

我的项目文件结构如下图:

其中utils.hpp文件如下:

#ifndef UTILS_HPP

#define UTILS_HPP#include <opencv2/opencv.hpp>

#include <cuda_runtime.h>

#include <cassert>

#include <iostream>

#include <memory>#ifndef CUDA_CHECK

#define CUDA_CHECK(call) \do { \cudaError_t err__ = (call); \if (err__ != cudaSuccess) { \std::cerr << "CUDA error [" << static_cast<int>(err__) << "] " \<< cudaGetErrorString(err__) << " at " << __FILE__ \<< ":" << __LINE__ << std::endl; \assert(false); \} \} while (0)

#endif// // 管理 TensorRT/NV 对象:调用 p->destroy()

// // 注意:只有 TensorRT 对象使用此 helper(它们需要调用 destroy() 而不是 delete)。

// template<typename T>

// inline std::shared_ptr<T> make_nvshared(T* ptr){

// return std::shared_ptr<T>(ptr, [](T* p){ if(p) p->destroy(); });

// }// 管理 TensorRT/NV 对象:使用 delete p;

template<typename T>

inline std::shared_ptr<T> make_nvshared(T* ptr){return std::shared_ptr<T>(ptr, std::default_delete<T>());

}/*-------------------------- YOLOV5_SEGMENT --------------------------*/

struct segmentRes {int label{-1}; // idfloat confidence{0.0f}; // confcv::Rect box; // boxcv::Mat boxMask; // mask-map (single channel CV_8U)cv::Scalar box_color;cv::Scalar seg_color;segmentRes() = default;

};/*------------------------------- 业务需求数据结构 -------------------------------*/

struct DetectInfo {int classID{-1}; // idfloat confidence{0.0f}; // confcv::Rect bbox; // boxdouble area{0.0};

};#endif // UTILS_HPP和前面一样,目前trt10不支持智能指针的destroy方法,等后续更新完成支持后,可以解开这个代码。

TrtModel.hpp文件如下:

#ifndef TRTMODEL_HPP

#define TRTMODEL_HPP#include <NvInfer.h>

#include <NvOnnxParser.h>

#include "logger.h"

#include "common.h"

#include <fstream>

#include <iostream>

#include <vector>

#include <string>

#include <random>

#include <cuda_runtime_api.h>

#include <numeric>

#include <array>#include "utils.hpp"class TrtModel

{public:TrtModel(std::string onnxfilepath, bool fp16);~TrtModel();std::vector<segmentRes> segment_postprocess(cv::Mat& frame);bool seg_drawResult(cv::Mat& img, const std::vector<segmentRes>& result);private:bool genEngine();std::vector<unsigned char> load_engine_file();bool Runtime();bool trtIOMemory();void preprocess(cv::Mat srcimg);bool createCudaGraph();// TRT runtime objects (shared_ptr with custom deleter)std::shared_ptr<nvinfer1::IRuntime> m_runtime{nullptr};std::shared_ptr<nvinfer1::ICudaEngine> m_engine{nullptr};std::shared_ptr<nvinfer1::IExecutionContext> m_context{nullptr};cudaStream_t m_stream{nullptr};// Namesstd::string m_inputName;std::vector<std::string> m_outputNames; // can be 1 or 2 outputs// Host / device buffersfloat* m_input_device_memory{nullptr};float* m_input_host_memory{nullptr};// index 0 -> detection (host/device), index 1 -> prototype/segmentation (host/device)std::array<float*, 2> m_output_host_memory{{nullptr, nullptr}};std::array<float*, 2> m_output_device_memory{{nullptr, nullptr}};nvinfer1::Dims m_inputDims{};nvinfer1::Dims m_detectDims{}; // detection output dims (N, boxnum, attrs)nvinfer1::Dims m_segmentDims{}; // segmentation protos dims (N, C, H, W)std::string m_enginePath;std::string onnx_file_path;bool FP16{false};int m_inputSize{0};int m_imgArea{0};int m_detectSize{0};int m_segmentSize{0};int kInputH{0};int kInputW{0};float kNmsThresh = 0.2f;float kConfThresh = 0.5f;float kMaskThresh = 0.5f;float i2d[6] {}, d2i[6] {};cudaGraphExec_t m_graphExec {nullptr};bool m_useGraph {false};// helperstatic inline size_t volume(const nvinfer1::Dims& d) { size_t v = 1;for (int i=0; i<d.nbDims; i++)v *= (d.d[i] > 0 ? static_cast<size_t>(d.d[i]) : 1); return v; };const std::vector<std::string> CLASS_NAMES = { /*需要检测的目标类别*/ "person", "bicycle", "car", "motorcycle", "airplane", "bus", "train", "truck", "boat", "traffic light", "fire hydrant","stop sign", "parking meter", "bench", "bird", "cat", "dog", "horse","sheep", "cow", "elephant", "bear", "zebra", "giraffe", "backpack", "umbrella", "handbag", "tie", "suitcase", "frisbee", "skis","snowboard", "sports ball", "kite", "baseball bat", "baseball glove","skateboard", "surfboard", "tennis racket", "bottle", "wine glass","cup", "fork", "knife", "spoon", "bowl", "banana", "apple", "sandwich","orange", "broccoli", "carrot", "hot dog", "pizza", "donut", "cake", "chair", "couch", "potted plant", "bed", "dining table", "toilet", "tv","laptop", "mouse", "remote", "keyboard", "cell phone", "microwave", "oven", "toaster", "sink", "refrigerator", "book", "clock", "vase","scissors", "teddy bear", "hair drier", "toothbrush"};const std::vector<std::vector<unsigned int>> COLORS_HEX = { /*对不同的检测目标绘制不同的颜色*/{0x00, 0x72, 0xBD}, {0xD9, 0x53, 0x19}, {0xED, 0xB1, 0x20}, {0x7E, 0x2F, 0x8E}, {0x77, 0xAC, 0x30}, {0x4D, 0xBE, 0xEE},{0xA2, 0x14, 0x2F}, {0x4C, 0x4C, 0x4C}, {0x99, 0x99, 0x99}, {0xFF, 0x00, 0x00}, {0xFF, 0x80, 0x00}, {0xBF, 0xBF, 0x00},{0x00, 0xFF, 0x00}, {0x00, 0x00, 0xFF}, {0xAA, 0x00, 0xFF}, {0x55, 0x55, 0x00}, {0x55, 0xAA, 0x00}, {0x55, 0xFF, 0x00},{0xAA, 0x55, 0x00}, {0xAA, 0xAA, 0x00}, {0xAA, 0xFF, 0x00}, {0xFF, 0x55, 0x00}, {0xFF, 0xAA, 0x00}, {0xFF, 0xFF, 0x00},{0x00, 0x55, 0x80}, {0x00, 0xAA, 0x80}, {0x00, 0xFF, 0x80}, {0x55, 0x00, 0x80}, {0x55, 0x55, 0x80}, {0x55, 0xAA, 0x80},{0x55, 0xFF, 0x80}, {0xAA, 0x00, 0x80}, {0xAA, 0x55, 0x80}, {0xAA, 0xAA, 0x80}, {0xAA, 0xFF, 0x80}, {0xFF, 0x00, 0x80},{0xFF, 0x55, 0x80}, {0xFF, 0xAA, 0x80}, {0xFF, 0xFF, 0x80}, {0x00, 0x55, 0xFF}, {0x00, 0xAA, 0xFF}, {0x00, 0xFF, 0xFF},{0x55, 0x00, 0xFF}, {0x55, 0x55, 0xFF}, {0x55, 0xAA, 0xFF}, {0x55, 0xFF, 0xFF}, {0xAA, 0x00, 0xFF}, {0xAA, 0x55, 0xFF},{0xAA, 0xAA, 0xFF}, {0xAA, 0xFF, 0xFF}, {0xFF, 0x00, 0xFF}, {0xFF, 0x55, 0xFF}, {0xFF, 0xAA, 0xFF}, {0x55, 0x00, 0x00},{0x80, 0x00, 0x00}, {0xAA, 0x00, 0x00}, {0xD4, 0x00, 0x00}, {0xFF, 0x00, 0x00}, {0x00, 0x2B, 0x00}, {0x00, 0x55, 0x00},{0x00, 0x80, 0x00}, {0x00, 0xAA, 0x00}, {0x00, 0xD4, 0x00}, {0x00, 0xFF, 0x00}, {0x00, 0x00, 0x2B}, {0x00, 0x00, 0x55},{0x00, 0x00, 0x80}, {0x00, 0x00, 0xAA}, {0x00, 0x00, 0xD4}, {0x00, 0x00, 0xFF}, {0x00, 0x00, 0x00}, {0x24, 0x24, 0x24},{0x49, 0x49, 0x49}, {0x6D, 0x6D, 0x6D}, {0x92, 0x92, 0x92}, {0xB6, 0xB6, 0xB6}, {0xDB, 0xDB, 0xDB}, {0x00, 0x72, 0xBD},{0x50, 0xB7, 0xBD}, {0x80, 0x80, 0x00}};};#endif // TRTMODEL_H这个文件主要用来申明部署过程中常用到的方法和变量,其中对于模型的输入和输出变量使用tensorrt提供的api接口自动查找。

TrtModel.cpp文件源码如下:

#include "TrtModel.hpp"

#include <cstring>

#include <memory>

#include <sys/stat.h>static inline bool file_exists(const std::string& name) {struct stat buffer{};return (stat(name.c_str(), &buffer) == 0);

}// helper

static inline size_t volume(const nvinfer1::Dims& d)

{ size_t v = 1;for (int i=0; i<d.nbDims; i++)v *= (d.d[i] > 0 ? static_cast<size_t>(d.d[i]) : 1); return v;

};TrtModel::TrtModel(std::string onnxfilepath, bool fp16): onnx_file_path(std::move(onnxfilepath)), FP16(fp16)

{const auto idx = onnx_file_path.find(".onnx");const auto basename = onnx_file_path.substr(0, idx);m_enginePath = basename + ".engine";if (file_exists(m_enginePath)){std::cout << "start building model from engine file: " << m_enginePath << std::endl;this->Runtime();} else {std::cout << "start building model from onnx file: " << onnx_file_path << std::endl;this->genEngine();this->Runtime();}this->trtIOMemory();

}bool TrtModel::genEngine(){// 打印模型编译过程的日志sample::gLogger.setReportableSeverity(nvinfer1::ILogger::Severity::kVERBOSE);// 创建builderauto builder = make_nvshared(nvinfer1::createInferBuilder(sample::gLogger.getTRTLogger()));if(!builder){std::cout << " (T_T)~~~, Failed to create builder."<<std::endl;return false;}// 声明显性batch,创建networkconst auto explicitBatch = 1U << static_cast<uint32_t>(nvinfer1::NetworkDefinitionCreationFlag::kEXPLICIT_BATCH);auto network = make_nvshared(builder->createNetworkV2(explicitBatch));if(!network){std::cout << " (T_T)~~~, Failed to create network."<<std::endl;return false;}// 创建 configauto config = make_nvshared(builder->createBuilderConfig());if(!config){std::cout << " (T_T)~~~, Failed to create config."<<std::endl;return false;}// 创建parser 从onnx自动构建模型,否则需要自己构建每个算子auto parser = make_nvshared(nvonnxparser::createParser(*network, sample::gLogger.getTRTLogger())); if(!parser){std::cout << " (T_T)~~~, Failed to create parser."<<std::endl;return false;}auto parsed = parser->parseFromFile(onnx_file_path.c_str(), static_cast<int>(sample::gLogger.getReportableSeverity()));if(!parsed){std::cout << " (T_T)~~~ ,Failed to parse onnx file."<<std::endl;return false;}auto profile = builder->createOptimizationProfile(); config->addOptimizationProfile(profile);// 判断是否使用半精度优化模型if(FP16) config->setFlag(nvinfer1::BuilderFlag::kFP16);// DLA 仅在可用时开启const int numDLACores = builder->getNbDLACores();if (numDLACores > 0) {config->setDefaultDeviceType(nvinfer1::DeviceType::kDLA);config->setDLACore(0);config->setFlag(nvinfer1::BuilderFlag::kGPU_FALLBACK);std::cout << "[TRT] Using DLA core 0 with GPU fallback" << std::endl;} else {config->setDefaultDeviceType(nvinfer1::DeviceType::kGPU);}config->setMemoryPoolLimit(nvinfer1::MemoryPoolType::kWORKSPACE, 1 << 30); /*在新的版本中被使用*/auto profileStream = samplesCommon::makeCudaStream();if(!profileStream){std::cout << " (T_T)~~~, Failed to makeCudaStream."<<std::endl;return false;}config->setProfileStream(*profileStream);// 创建序列化引擎文件auto plan = make_nvshared(builder->buildSerializedNetwork(*network, *config));if(!plan){std::cout << " (T_T)~~~, Failed to SerializedNetwork."<<std::endl;return false;}std::cout << "********************* check model input shape *********************"<<std::endl;std::cout << "input number : "<< network->getNbInputs()<<std::endl;for(size_t i=0; i<static_cast<size_t>(network->getNbInputs()); ++i){auto mInputDims = network->getInput(i)->getDimensions();std::cout << " ✨~ model input dims: "<<mInputDims.nbDims <<std::endl;std::cout << " ✨^_^ model input dim : "<<mInputDims<<std::endl;}std::cout << "********************* check model output shape *********************"<<std::endl;std::cout << "output number : "<< network->getNbOutputs()<<std::endl;for(size_t i=0; i<static_cast<size_t>(network->getNbOutputs()); ++i){auto mOutputDims = network->getOutput(i)->getDimensions();std::cout << " ✨~ model output dims: "<<mOutputDims.nbDims <<std::endl;std::cout << " ✨^_^ model output dim : "<<mOutputDims<<std::endl;}// 序列化保存推理引擎文件文件std::ofstream engine_file(m_enginePath, std::ios::binary);if(!engine_file.good()){std::cout << " (T_T)~~~, Failed to open engine file"<<std::endl;return false;}engine_file.write((char *)plan->data(), plan->size());engine_file.close();std::cout << " ~~Congratulations! 🎉🎉🎉~ Engine build success!!! ✨✨✨~~ " << std::endl;return true;}std::vector<unsigned char> TrtModel::load_engine_file(){std::vector<unsigned char> engine_data;std::ifstream engine_file(m_enginePath, std::ios::binary);if(!engine_file.is_open()) { std::cerr << "[TRT] open engine failed" << std::endl; return engine_data; }engine_file.seekg(0, std::ios::end);const auto length = static_cast<size_t>(engine_file.tellg());engine_data.resize(length);engine_file.seekg(0, std::ios::beg);engine_file.read(reinterpret_cast<char*>(engine_data.data()), length);return engine_data;

}bool TrtModel::Runtime(){initLibNvInferPlugins(&sample::gLogger.getTRTLogger(), "");auto plan = load_engine_file();sample::setReportableSeverity(sample::Severity::kINFO);m_runtime = make_nvshared(nvinfer1::createInferRuntime(sample::gLogger.getTRTLogger()));if(!m_runtime) { std::cerr << "create runtime failed" << std::endl; return false; }m_engine = make_nvshared(m_runtime->deserializeCudaEngine(plan.data(), plan.size()));if(!m_engine) { std::cerr << "deserialize failed" << std::endl; return false; }const int nbIOTensors = m_engine->getNbIOTensors();for (int i = 0; i < nbIOTensors; ++i) {const char* name = m_engine->getIOTensorName(i);bool isInput = m_engine->getTensorIOMode(name) == nvinfer1::TensorIOMode::kINPUT;auto dims = m_engine->getTensorShape(name);auto dtype = m_engine->getTensorDataType(name);std::cout << "[TRT] Tensor[" <<i<<"] " << name << ", isInput=" << isInput << ", dims=" << dims << ", dtype=" << static_cast<int>(dtype) << std::endl;if (isInput) {m_inputName = name;} else {m_outputNames.push_back(name);}}if (m_outputNames.empty()) {std::cerr << "No output tensors found" << std::endl;return false;}m_context = make_nvshared(m_engine->createExecutionContext());if(!m_context) { std::cerr << "create context failed" << std::endl; return false; }CUDA_CHECK(cudaStreamCreateWithFlags(&m_stream, cudaStreamNonBlocking));std::cout << "runtime ready" << std::endl;return true;

}bool TrtModel::trtIOMemory() {// Input dims: query using tensor namem_inputDims = m_context->getTensorShape(m_inputName.c_str());// Input H/Wif (m_inputDims.nbDims >= 4) {// assume dims are N,C,H,WkInputH = m_inputDims.d[2];kInputW = m_inputDims.d[3];} else if (m_inputDims.nbDims == 3) {// maybe C,H,WkInputH = m_inputDims.d[1];kInputW = m_inputDims.d[2];} else {std::cerr << "Unsupported input dim layout" << std::endl;return false;}m_imgArea = kInputH * kInputW;m_inputSize = static_cast<int>(TrtModel::volume(m_inputDims) * sizeof(float));// Determine which output tensor corresponds to detection and which to protos (segment)std::string detectTensorName;std::string segmentTensorName;if (m_outputNames.size() == 1) {// only one output -> assume it's detectiondetectTensorName = m_outputNames[0];} else {// try to distinguish by nbDimsauto dims0 = m_context->getTensorShape(m_outputNames[0].c_str());auto dims1 = m_context->getTensorShape(m_outputNames[1].c_str());if (dims0.nbDims == 4) { // protos typically 4-D (N, C, H, W)segmentTensorName = m_outputNames[0];detectTensorName = m_outputNames[1];} else if (dims1.nbDims == 4) {segmentTensorName = m_outputNames[1];detectTensorName = m_outputNames[0];} else {// fallback: first -> detect, second -> segmentdetectTensorName = m_outputNames[0];segmentTensorName = (m_outputNames.size() > 1 ? m_outputNames[1] : "");}}if (!detectTensorName.empty()) {m_detectDims = m_context->getTensorShape(detectTensorName.c_str());m_detectSize = static_cast<int>(TrtModel::volume(m_detectDims) * sizeof(float));}if (!segmentTensorName.empty()) {m_segmentDims = m_context->getTensorShape(segmentTensorName.c_str());m_segmentSize = static_cast<int>(TrtModel::volume(m_segmentDims) * sizeof(float));}// Allocate host/device memoryCUDA_CHECK(cudaMallocHost(&m_input_host_memory, m_inputSize));CUDA_CHECK(cudaMalloc(&m_input_device_memory, m_inputSize));if (m_detectSize > 0) CUDA_CHECK(cudaMallocHost(&m_output_host_memory[0], m_detectSize));if (m_segmentSize > 0) CUDA_CHECK(cudaMallocHost(&m_output_host_memory[1], m_segmentSize));if (m_detectSize > 0) CUDA_CHECK(cudaMalloc(&m_output_device_memory[0], m_detectSize));if (m_segmentSize > 0) CUDA_CHECK(cudaMalloc(&m_output_device_memory[1], m_segmentSize));// Set tensor addresses in context (once, since buffers are fixed)m_context->setTensorAddress(m_inputName.c_str(), m_input_device_memory);if (!detectTensorName.empty()) m_context->setTensorAddress(detectTensorName.c_str(), m_output_device_memory[0]);if (!segmentTensorName.empty()) m_context->setTensorAddress(segmentTensorName.c_str(), m_output_device_memory[1]);std::cout << "after optimizer input shape: " << m_context->getTensorShape(m_inputName.c_str()) << std::endl;if (!segmentTensorName.empty()) std::cout << "after optimizer segment shape: " << m_context->getTensorShape(segmentTensorName.c_str()) << std::endl;if (!detectTensorName.empty()) std::cout << "after optimizer detect shape: " << m_context->getTensorShape(detectTensorName.c_str()) << std::endl;// Create CUDA Graphm_useGraph = createCudaGraph();if (m_useGraph) {std::cout << "CUDA Graph created successfully." << std::endl;} else {std::cout << "Failed to create CUDA Graph, falling back to enqueueV3." << std::endl;}return true;

}bool TrtModel::createCudaGraph() {cudaGraph_t graph;cudaError_t err;// Synchronize stream before captureerr = cudaStreamSynchronize(m_stream);if (err != cudaSuccess) {std::cerr << "cudaStreamSynchronize failed: " << cudaGetErrorString(err) << std::endl;return false;}// Begin captureerr = cudaStreamBeginCapture(m_stream, cudaStreamCaptureModeGlobal);if (err != cudaSuccess) {std::cerr << "cudaStreamBeginCapture failed: " << cudaGetErrorString(err) << std::endl;return false;}// Enqueue the inferencebool status = m_context->enqueueV3(m_stream);if (!status) {std::cerr << "enqueueV3 failed during graph capture." << std::endl;cudaStreamEndCapture(m_stream, nullptr);return false;}// End captureerr = cudaStreamEndCapture(m_stream, &graph);if (err != cudaSuccess) {std::cerr << "cudaStreamEndCapture failed: " << cudaGetErrorString(err) << std::endl;return false;}// Instantiate the grapherr = cudaGraphInstantiate(&m_graphExec, graph, nullptr, nullptr, 0);cudaGraphDestroy(graph);if (err != cudaSuccess) {std::cerr << "cudaGraphInstantiate failed: " << cudaGetErrorString(err) << std::endl;return false;}return true;

}void TrtModel::preprocess(cv::Mat srcimg)

{// 1) 计算 letterbox 仿射矩阵const float r = std::min(kInputW / (float)srcimg.cols, kInputH / (float)srcimg.rows);const float dx = (kInputW - r * srcimg.cols) * 0.5f;const float dy = (kInputH - r * srcimg.rows) * 0.5f;i2d[0] = r; i2d[1] = 0.0f; i2d[2] = dx;i2d[3] = 0.0f; i2d[4] = r; i2d[5] = dy;cv::Mat m2x3_i2d(2, 3, CV_32F, i2d);cv::Mat m2x3_d2i(2, 3, CV_32F, d2i);cv::invertAffineTransform(m2x3_i2d, m2x3_d2i);// 2) 复用一张静态缓存,避免每帧 new(放到类里做一次性成员也行)static thread_local cv::Mat letterbox; // CV_8UC3letterbox.create(kInputH, kInputW, CV_8UC3);cv::warpAffine(srcimg, letterbox, m2x3_i2d, letterbox.size(),cv::INTER_LINEAR, cv::BORDER_CONSTANT, cv::Scalar::all(0));if (!letterbox.data) { std::cerr << "ERROR: Image is empty!\n"; return; }// 3) BGR->RGB,uint8->float,并归一化到[0,1]static thread_local cv::Mat rgb8, rgb32f;cv::cvtColor(letterbox, rgb8, cv::COLOR_BGR2RGB);rgb8.convertTo(rgb32f, CV_32F, 1.0 / 255.0);cv::Mat planes[3]; cv::split(rgb32f, planes);// 5) 直接把每个平面 memcpy 到 pinned host buffer(NCHW)const size_t planeBytes = (size_t)m_imgArea * sizeof(float);// NCHW offsetsfloat* dst = m_input_host_memory;std::memcpy(dst + 0 * m_imgArea, planes[0].ptr<float>(), planeBytes); // Rstd::memcpy(dst + 1 * m_imgArea, planes[1].ptr<float>(), planeBytes); // Gstd::memcpy(dst + 2 * m_imgArea, planes[2].ptr<float>(), planeBytes); // B// 6) H2D(仍然异步)CUDA_CHECK(cudaMemcpyAsync(m_input_device_memory, m_input_host_memory,m_inputSize, cudaMemcpyHostToDevice, m_stream));

}static inline void affine_project(const float* M, float x, float y, float& ox, float& oy) {ox = M[0]*x + M[1]*y + M[2];oy = M[3]*x + M[4]*y + M[5];

}std::vector<segmentRes> TrtModel::segment_postprocess(cv::Mat& frame) {std::vector<int> classIds; // 实例分割的idstd::vector<float> confidences; // 实例分割的置信度std::vector<cv::Rect> boxes; // 检测出来的框坐标std::vector<std::vector<float>> picked_proposals; // 挑选出来output0[:,:, 5 + _className.size():m_detectDims.d[2]]的map-mask信息值this->preprocess(frame);if (m_useGraph) {CUDA_CHECK(cudaGraphLaunch(m_graphExec, m_stream));} else {bool status = this->m_context->enqueueV3(m_stream);if(!status){std::cout<<"(T_T)~~~, Failed to execute inference, Please check your input and output."<<std::endl;}}CUDA_CHECK(cudaMemcpyAsync(m_output_host_memory[0], m_output_device_memory[0], m_detectSize, cudaMemcpyDeviceToHost, m_stream)); // detCUDA_CHECK(cudaMemcpyAsync(m_output_host_memory[1], m_output_device_memory[1], m_segmentSize, cudaMemcpyDeviceToHost, m_stream)); // protoCUDA_CHECK(cudaStreamSynchronize(m_stream));// step1: 先处理好bbox部分,完成bbox的解码任务float* pdata = m_output_host_memory[0];const float r = this->i2d[0]; // 缩放因子(假设各向同性)for (int j = 0; j < static_cast<int>(m_detectDims.d[1]); ++j) {float box_score = pdata[4]; // 获取分割的每个目标的置信度if (box_score >= this->kConfThresh) {cv::Mat scores(1, static_cast<int>(m_detectDims.d[2] - 5 - m_segmentDims.d[1]), CV_32FC1, pdata + 5);cv::Point classIdPoint;double max_class_socre;cv::minMaxLoc(scores, 0, &max_class_socre, 0, &classIdPoint);max_class_socre = (float)max_class_socre;if (max_class_socre >= this->kConfThresh) {std::vector<float> temp_proto(pdata + static_cast<int>(m_detectDims.d[2] - m_segmentDims.d[1]), pdata + static_cast<int>(m_detectDims.d[2]));picked_proposals.push_back(temp_proto);float cx = pdata[0];float cy = pdata[1];float w = pdata[2];float h = pdata[3];float cx_orig, cy_orig;affine_project(this->d2i, cx, cy, cx_orig, cy_orig);float w_orig = w / r;float h_orig = h / r;int left = std::max(static_cast<int>(cx_orig - 0.5f * w_orig), 0);int top = std::max(static_cast<int>(cy_orig - 0.5f * h_orig), 0);classIds.push_back(classIdPoint.x);confidences.push_back(max_class_socre * box_score);boxes.push_back(cv::Rect(left, top, static_cast<int>(w_orig), static_cast<int>(h_orig)));}}pdata += static_cast<int>(m_detectDims.d[2]);}// 对检测出来的框做nms操作std::vector<int> nms_result; // 保存符合要求的检测框的索引cv::dnn::NMSBoxes(boxes, confidences, this->kConfThresh, this->kNmsThresh, nms_result);std::vector<std::vector<float>> temp_mask_proposals;cv::Rect holeImgRect(0, 0, frame.cols, frame.rows);std::vector<segmentRes> output; // 存放检测的结果std::mt19937 gen{std::random_device{}()};std::uniform_int_distribution<int> dis(80, 180);for (uint i = 0; i < nms_result.size(); ++i) {int idx = nms_result[i];segmentRes result;result.label = classIds[idx];result.confidence = confidences[idx];result.box = boxes[idx] & holeImgRect;result.box_color = cv::Scalar(dis(gen), dis(gen), dis(gen));output.push_back(result);temp_mask_proposals.push_back(picked_proposals[idx]);}// step2: 处理mask-map,完成的解码pdata = m_output_host_memory[1];cv::Mat maskProposals;if(temp_mask_proposals.size()!=0){ for (size_t i = 0; i < temp_mask_proposals.size(); ++i){maskProposals.push_back( cv::Mat(temp_mask_proposals[i]).t() );}std::vector<float> mask(pdata, pdata + static_cast<size_t>(m_segmentDims.d[0] * m_segmentDims.d[1] * m_segmentDims.d[2] * m_segmentDims.d[3]));cv::Mat mask_protos = cv::Mat(mask);cv::Mat protos = mask_protos.reshape(0, { static_cast<int>(m_segmentDims.d[1]), static_cast<int>(m_segmentDims.d[2] * m_segmentDims.d[3]) });//mask-map mask_protoscv::Mat matmulRes = (maskProposals * protos).t(); // n*32 32*25600 cv::Mat masks = matmulRes.reshape(output.size(), {static_cast<int>(m_segmentDims.d[2]) , static_cast<int>(m_segmentDims.d[3])});std::vector<cv::Mat> maskChannels;cv::split(masks, maskChannels);// 计算mask的roi(去除letterbox padding)float dx = this->i2d[2];float dy = this->i2d[5];float mask_w = static_cast<float>(m_segmentDims.d[3]);float mask_h = static_cast<float>(m_segmentDims.d[2]);float input_w = static_cast<float>(this->kInputW);float input_h = static_cast<float>(this->kInputH);float mask_padw = dx / input_w * mask_w;float mask_padh = dy / input_h * mask_h;float mask_neww = (input_w - 2.0f * dx) / input_w * mask_w;float mask_newh = (input_h - 2.0f * dy) / input_h * mask_h;cv::Rect roi(static_cast<int>(mask_padw + 0.5f),static_cast<int>(mask_padh + 0.5f),static_cast<int>(mask_neww + 0.5f),static_cast<int>(mask_newh + 0.5f));for (size_t i = 0; i < output.size(); ++i) {cv::Mat dest, mask;// sigmoid操作cv::exp(-maskChannels[i], dest);dest = 1.0 / (1.0 + dest);//160 * 160dest = dest(roi);cv::resize(dest, mask, frame.size(), cv::INTER_NEAREST);// crop ----提取大于置信度的掩码部分cv::Rect temp_rect = output[i].box;mask = mask(temp_rect) > this->kMaskThresh;output[i].boxMask = mask;output[i].seg_color = cv::Scalar(dis(gen), dis(gen), dis(gen));}}return output;

}bool TrtModel::seg_drawResult(cv::Mat& img, const std::vector<segmentRes>& result) {// Reuse a static buffer for temporary mask processingstatic thread_local cv::Mat temp_mask;for (const auto& seg : result) {// Draw bounding box directly on the input imagecv::rectangle(img, seg.box, seg.box_color, 2);if (seg.boxMask.empty()) continue;// Ensure temp_mask is the right size and type for the box ROItemp_mask.create(seg.box.height, seg.box.width, img.type());temp_mask.setTo(cv::Scalar(0, 0, 0));// Apply the segmentation mask with semi-transparent colortemp_mask.setTo(seg.seg_color, seg.boxMask);// Blend the mask onto the ROI of the input imagecv::Mat roi = img(seg.box);cv::addWeighted(roi, 0.7, temp_mask, 0.3, 0, roi);}return true; // 如果需要返回状态,可以返回true

}TrtModel::~TrtModel()

{if (m_graphExec) { cudaGraphExecDestroy(m_graphExec); m_graphExec = nullptr; }if (m_stream) { cudaStreamDestroy(m_stream); m_stream = nullptr; }if (m_input_host_memory) { cudaFreeHost(m_input_host_memory); m_input_host_memory = nullptr; }if (m_input_device_memory) { cudaFree(m_input_device_memory); m_input_device_memory = nullptr; }for (auto &h : m_output_host_memory) {if (h) { cudaFreeHost(h); h = nullptr; }}for (auto &d : m_output_device_memory) {if (d) { cudaFree(d); d = nullptr; }}m_context.reset();m_engine.reset();m_runtime.reset();

}

//==========================================================================================// ------------------------ utility: optimizeContoursAndCalculateArea ------------------------

double optimizeContoursAndCalculateArea(const cv::Mat& temp) {cv::Mat filledMask;cv::morphologyEx(temp, filledMask, cv::MORPH_CLOSE, cv::getStructuringElement(cv::MORPH_RECT, cv::Size(5, 5)));cv::Mat smoothedMask;cv::GaussianBlur(filledMask, smoothedMask, cv::Size(5, 5), 0);std::vector<std::vector<cv::Point>> contours;std::vector<cv::Vec4i> hierarchy;cv::findContours(smoothedMask, contours, hierarchy, cv::RETR_EXTERNAL, cv::CHAIN_APPROX_SIMPLE);double totalArea = 0.0;for (const auto& contour : contours) {totalArea += cv::contourArea(contour);}return totalArea;

}std::vector<DetectInfo> format_OutputSeg_DetectInfo(std::vector<segmentRes> &segResults)

{std::vector<DetectInfo> detectInfos;if (segResults.empty()) return detectInfos;for (const auto& seg : segResults) {DetectInfo detectinfo;detectinfo.classID = seg.label;detectinfo.bbox = seg.box;detectinfo.area = optimizeContoursAndCalculateArea(seg.boxMask);detectinfo.confidence = seg.confidence;detectInfos.push_back(detectinfo);}return detectInfos;

}

构造函数部分先查找engine模型文件,如果不存在engine文件,从genEngine方法加载onnx模型生成engine。随后进行构建runtime和申请推理模型的输入输出数据的内存申请,最后生成推理上下文。加载输入图像数据,对图像预处理,对推理结果后处理。

接口使用代码如下:

#include <iostream>

// #include "BYTETracker.h"

#include "TrtModel.hpp"int main() {static TrtModel trtmodel("weights/yolov5s-seg1.onnx", true);cv::VideoCapture cap("media/123.mp4");// 检查视频是否成功打开if (!cap.isOpened()) {std::cerr << "Error: Could not open video file.\n";return -1;}cv::Size frameSize(cap.get(cv::CAP_PROP_FRAME_WIDTH), cap.get(cv::CAP_PROP_FRAME_HEIGHT));// 获取帧率double video_fps = cap.get(cv::CAP_PROP_FPS);std::cout << "width: " << frameSize.width << " height: " << frameSize.height << " fps: " << video_fps << std::endl;cv::Mat frame;int frame_nums = 0;// 读取和显示视频帧,直到视频结束while (cap.read(frame)) {auto start = std::chrono::high_resolution_clock::now();auto output = trtmodel.segment_postprocess(frame);trtmodel.seg_drawResult(frame, output);cv::putText(frame, "duck_nums: " + std::to_string(123), cv::Point(10, 100),cv::FONT_HERSHEY_SIMPLEX, 3, cv::Scalar(0, 0, 255), 5);// // 获取程序结束时间点auto end = std::chrono::high_resolution_clock::now();double duration_ms = std::chrono::duration<double, std::micro>(end - start).count() / 1000.0; double fps = 1000.0 / duration_ms;// 格式化FPS文本std::stringstream ss;ss << "FPS: " << std::fixed << std::setprecision(2) << fps;std::string fps_text = ss.str();// 在帧上绘制FPScv::putText(frame, fps_text, cv::Point(200, 200), cv::FONT_HERSHEY_DUPLEX, 3, cv::Scalar(0, 255, 0), 2, 0);std::cout<<"--fps-- "<<fps_text<<std::endl;// // 显示处理后的帧cv::imshow("Processed-trans-Video", frame);frame_nums += 1;std::string filename = "./111/" + std::to_string(frame_nums) + ".jpg";cv::imwrite(filename, frame);if (cv::waitKey(25) == 27) {break;}}// 释放视频捕获对象和关闭所有窗口cap.release();cv::destroyAllWindows();return 0;

}本项目的部署代码使用非常简单,只需要实例化,加载模型即可正式开始你的检测任务啦。

我的CMakeLists.txt配置文件如下:

cmake_minimum_required(VERSION 3.11)

project(CountObj LANGUAGES CXX)set(CMAKE_CXX_STANDARD 17)list(APPEND CMAKE_MODULE_PATH "${CMAKE_CURRENT_SOURCE_DIR}")

# CUDA 配置

set(CMAKE_CUDA_COMPILER /usr/local/cuda-12.3/bin/nvcc)

enable_language(CUDA)# 查找并包含Eigen3

if(NOT EIGEN3_FOUND)set(EIGEN3_INCLUDE_DIR "/usr/include/eigen3")include_directories(${EIGEN3_INCLUDE_DIR})

endif()# 添加bytetrack文件夹中的源文件和头文件

file(GLOB BYTE_TRACK_SOURCES "bytetrack/*.cpp")

file(GLOB BYTE_TRACK_HEADERS "bytetrack/*.h")# 添加src文件夹中的源文件和头文件

file(GLOB SRC_CPP "src/*.cpp")

file(GLOB SRC_HPP "src/*.hpp")# OpenCV

find_package(OpenCV REQUIRED)# 包含目录配置

include_directories(/usr/local/cuda-12.3/include # CUDA/opt/TensorRT/TensorRT-10.10.0.31/include/ # TensorRT/opt/TensorRT/TensorRT-10.10.0.31/samples/common # tensorRT的使用例子logger/opt/TensorRT/TensorRT-10.10.0.31/samples${CMAKE_CURRENT_SOURCE_DIR}/bytetrack # 确保包含bytetrack文件夹${CMAKE_CURRENT_SOURCE_DIR}/src # 确保包含src文件夹${EIGEN3_INCLUDE_DIR} # 包含Eigen库的头文件路径

)# 链接目录配置

link_directories(/usr/local/cuda-12.3/lib64/opt/TensorRT/TensorRT-10.10.0.31/lib//usr/local/lib

)add_executable(buildmain11.cpp/opt/TensorRT/TensorRT-10.10.0.31/samples/common/logger.cpp${BYTE_TRACK_SOURCES}${SRC_CPP}

)# 设置 CUDA 架构

set_target_properties(build PROPERTIES CUDA_ARCHITECTURES "61;70;75;89"CUDA_SEPARABLE_COMPILATION ON

)# 链接库配置

target_link_libraries(build PRIVATE# OpenCV${OpenCV_LIBS} # TensorRTnvinfer nvinfer_plugin nvonnxparser # CUDAcudart

)# 附加编译选项(可选)

target_compile_options(build PRIVATE-Wall-Wno-deprecated-declarations-O2

)

不支持动态推理,batch设置为固定大小。

完整详细代码见云盘分享

通过网盘分享的文件:trt10_yolov5_segment_basic_demo.zip

链接: https://pan.baidu.com/s/1f4RAZYFEbldbk4TBYjbsHQ?pwd=phac 提取码: phac

--来自百度网盘超级会员v6的分享