Python-LLMChat

Python-LLMChat

使用Python进行大模型对话,分別使用【request】【FastAPI】【Flask】【Langchain】进行实现,附带POSTMAN请求示例,代码仓库实现地址:https://gitee.com/enzoism/python_llm_chat

pip install -r requirements.txt

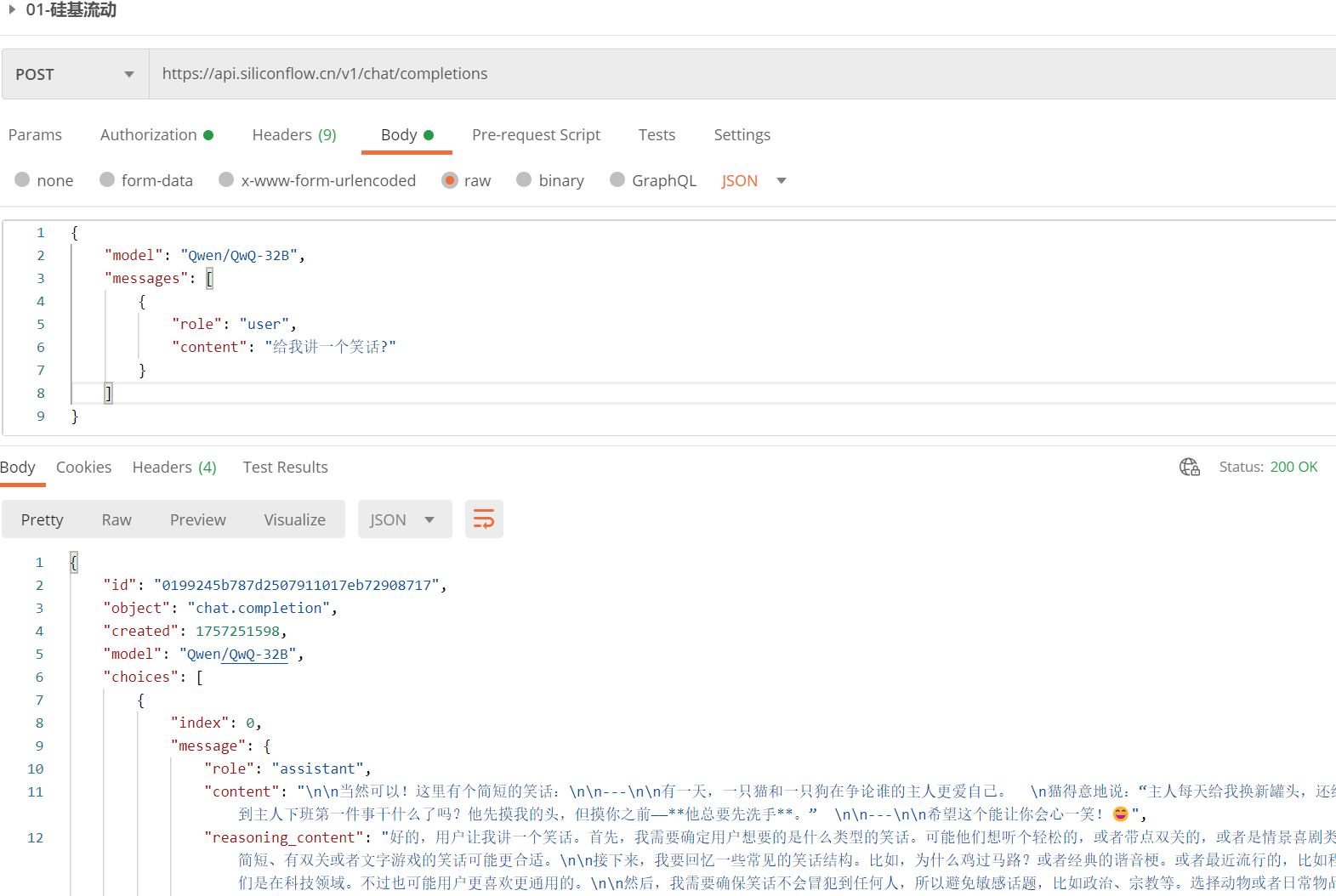

1-request原生请求

postman都可以实现的那种请求方式

import jsonimport requestsurl = "https://api.siliconflow.cn/v1/chat/completions"

API_KEY = "sk-rnqurwrjarpxjetwkcphjymkcrbxpoyhpueaxwtkevmdwhlw"payload = {"model": "Qwen/QwQ-32B","messages": [{"role": "user","content": "给我讲一个笑话?"}]

}

headers = {"Authorization": f"Bearer {API_KEY}","Content-Type": "application/json"

}response = requests.post(url, json=payload, headers=headers)

# 假设 response 是你通过 requests 得到的响应

json_data = response.json()print(json_data)# 打印标准格式的 JSON(带缩进,中文正常显示)

print(json.dumps(json_data, indent=4, ensure_ascii=False))

2-request原生请求-添加会话历史-FastAPI

from typing import List, Dictimport requests

import uvicorn

from fastapi import FastAPI, HTTPException

from pydantic import BaseModelapp = FastAPI(title="聊天历史管理API", version="1.0.0")# API配置

API_URL = "https://api.siliconflow.cn/v1/chat/completions"

API_KEY = "sk-rnqurwrjarpxjetwkcphjymkcrbxpoyhpueaxwtkevmdwhlw"# 模拟数据库存储用户聊天历史

user_chat_history: Dict[str, List[Dict[str, str]]] = {}class ChatRequest(BaseModel):user_id: strmessage: strclass ChatResponse(BaseModel):user_id: strresponse: strchat_history: List[Dict[str, str]]def get_ai_response(messages: List[Dict[str, str]]) -> str:"""调用AI模型获取回复"""payload = {"model": "Qwen/QwQ-32B","messages": messages}headers = {"Authorization": f"Bearer {API_KEY}","Content-Type": "application/json"}try:response = requests.post(API_URL, json=payload, headers=headers)response.raise_for_status()return response.json()['choices'][0]['message']['content']except requests.exceptions.RequestException as e:raise HTTPException(status_code=500, detail=f"AI模型调用失败: {str(e)}")@app.post("/chat", response_model=ChatResponse)

async def chat_endpoint(chat_request: ChatRequest):"""处理用户聊天请求"""user_id = chat_request.user_iduser_message = chat_request.message# 获取用户历史记录(如果没有则创建)if user_id not in user_chat_history:user_chat_history[user_id] = []# 添加用户消息到历史记录user_chat_history[user_id].append({"role": "user","content": user_message})# 获取AI回复ai_response = get_ai_response(user_chat_history[user_id])# 添加AI回复到历史记录user_chat_history[user_id].append({"role": "assistant","content": ai_response})return ChatResponse(user_id=user_id,response=ai_response,chat_history=user_chat_history[user_id])@app.get("/history/{user_id}", response_model=List[Dict[str, str]])

async def get_chat_history(user_id: str):"""获取用户聊天历史"""if user_id not in user_chat_history:return []return user_chat_history[user_id]@app.delete("/history/{user_id}")

async def clear_chat_history(user_id: str):"""清除用户聊天历史"""if user_id in user_chat_history:user_chat_history[user_id] = []return {"message": f"用户 {user_id} 的聊天历史已清除"}return {"message": f"用户 {user_id} 没有聊天历史"}@app.get("/")

async def root():return {"message": "聊天历史管理API正在运行", "docs": "/docs"}if __name__ == "__main__":uvicorn.run(app, host="0.0.0.0", port=8000)3-request原生请求-添加会话历史-Flask版本

from typing import List, Dictimport requests

from flask import Flask, request, jsonifyapp = Flask(__name__)# API配置

API_URL = "https://api.siliconflow.cn/v1/chat/completions"

API_KEY = "sk-rnqurwrjarpxjetwkcphjymkcrbxpoyhpueaxwtkevmdwhlw"# 模拟数据库存储用户聊天历史

user_chat_history: Dict[str, List[Dict[str, str]]] = {}def get_ai_response(messages: List[Dict[str, str]]) -> str:"""调用AI模型获取回复"""payload = {"model": "Qwen/QwQ-32B","messages": messages}headers = {"Authorization": f"Bearer {API_KEY}","Content-Type": "application/json"}try:response = requests.post(API_URL, json=payload, headers=headers)response.raise_for_status()return response.json()['choices'][0]['message']['content']except requests.exceptions.RequestException as e:return f"AI 模型调用失败: {str(e)}"@app.route("/chat", methods=["POST"])

def chat():"""处理用户聊天请求"""data = request.get_json()user_id = data.get("user_id")user_message = data.get("message")if not user_id or not user_message:return jsonify({"error": "缺少 user_id 或 message"}), 400# 初始化用户历史if user_id not in user_chat_history:user_chat_history[user_id] = []# 添加用户消息user_chat_history[user_id].append({"role": "user", "content": user_message})# 获取AI回复ai_response = get_ai_response(user_chat_history[user_id])# 添加AI回复到历史记录user_chat_history[user_id].append({"role": "assistant", "content": ai_response})return jsonify({"user_id": user_id,"response": ai_response,"chat_history": user_chat_history[user_id]})@app.route("/history/<user_id>", methods=["GET"])

def get_history(user_id):"""获取用户聊天历史"""history = user_chat_history.get(user_id, [])return jsonify(history)@app.route("/history/<user_id>", methods=["DELETE"])

def clear_history(user_id):"""清除用户聊天历史"""if user_id in user_chat_history:user_chat_history[user_id] = []return jsonify({"message": f"用户 {user_id} 的聊天历史已清除"})return jsonify({"message": f"用户 {user_id} 没有聊天历史"})@app.route("/")

def index():return jsonify({"message": "Flask 聊天历史管理API正在运行", "endpoints": ["/chat", "/history/<user_id>"]})if __name__ == "__main__":app.run(debug=True, host="0.0.0.0", port=8000)4-request原生请求-Langchain版本

import osfrom flask import Flask, request, jsonify

from langchain.schema import HumanMessage

from langchain_openai import ChatOpenAIapp = Flask(__name__)# ---------- 关键配置 ----------

# SiliconFlow硅基流动 兼容 OpenAI 接口

os.environ["OPENAI_API_KEY"] = "sk-rnqurwrjarpxjetwkcphjymkcrbxpoyhpueaxwtkevmdwhlw"

os.environ["OPENAI_API_BASE"] = "https://api.siliconflow.cn/v1"MODEL_NAME = "Qwen/QwQ-32B"# LangChain 客户端

llm = ChatOpenAI(model_name=MODEL_NAME,temperature=0.7,max_tokens=512

)# ---------- 内存历史 ----------

user_histories: dict[str, list] = {} # list[HumanMessage | AIMessage]def get_history_list(user_id: str):"""返回 LangChain 消息对象列表"""return user_histories.setdefault(user_id, [])@app.route("/chat", methods=["POST"])

def chat():data = request.get_json()user_id = data.get("user_id")user_text = data.get("message")if not user_id or not user_text:return jsonify({"error": "缺少 user_id 或 message"}), 400history = get_history_list(user_id)history.append(HumanMessage(content=user_text))# 调用模型ai_msg = llm.invoke(history) # 返回 AIMessagehistory.append(ai_msg)# 转成普通 dict 给前端serializable = [{"role": "user" if isinstance(msg, HumanMessage) else "assistant","content": msg.content}for msg in history]return jsonify({"user_id": user_id,"response": ai_msg.content,"chat_history": serializable})@app.route("/history/<user_id>", methods=["GET"])

def get_history(user_id):history = get_history_list(user_id)serializable = [{"role": "user" if isinstance(msg, HumanMessage) else "assistant","content": msg.content}for msg in history]return jsonify(serializable)@app.route("/history/<user_id>", methods=["DELETE"])

def clear_history(user_id):user_histories.pop(user_id, None)return jsonify({"message": f"用户 {user_id} 的聊天历史已清除"})@app.route("/")

def index():return jsonify({"message": "Flask + LangChain 聊天服务已启动","endpoints": ["/chat", "/history/<user_id>"]})if __name__ == "__main__":app.run(debug=True, host="0.0.0.0", port=8000)