编写一个用scala写的spark程序从本地读取数据,写到本地

有些内容,其实是和这篇文章是重合的:

https://blog.csdn.net/lwprain/article/details/142336420

其实说到底还是需要配下hadoop的开发环境,核心还是:

1、添加环境变量 HADOOP_HOME,内容为:D:\java\hadoop-3.3.6

2、到项目https://github.com/cdarlint/winutils/tree/master/hadoop-3.3.6/bin中,下载:

hadoop.dll、winutils.exe

放到d:\java\hadoop-3.3.6\bin中。

3、将路径D:\java\hadoop-3.3.6\bin放到PATH中

这几条,具体遇到其他问题,可以参考原文章。

工程什么的都不说了,直接贴相关的内容吧

4、pom.xml的内容:

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd"><modelVersion>4.0.0</modelVersion><groupId>groupId</groupId><artifactId>scala-spark01</artifactId><version>1.0-SNAPSHOT</version><packaging>jar</packaging><properties><maven.compiler.source>8</maven.compiler.source><maven.compiler.target>8</maven.compiler.target></properties><dependencies><dependency><groupId>org.scala-lang</groupId><artifactId>scala-library</artifactId><version>2.12.20</version></dependency><dependency><groupId>org.apache.spark</groupId><artifactId>spark-core_2.12</artifactId><version>3.5.6</version></dependency><dependency><groupId>org.apache.hadoop</groupId><artifactId>hadoop-client</artifactId><version>3.3.6</version></dependency></dependencies><build><plugins><plugin><groupId>net.alchim31.maven</groupId><artifactId>scala-maven-plugin</artifactId><version>3.2.2</version><executions><execution><goals><goal>compile</goal><goal>testCompile</goal></goals></execution></executions><configuration><jvmArgs><!-- <jvmArg>--add-exports</jvmArg>--><!-- <jvmArg>java.base/sun.nio.ch=ALL-UNNAMED</jvmArg>--><!-- <jvmArg>--add-opens</jvmArg>--><!-- <jvmArg>java.base/sun.nio.ch=ALL-UNNAMED</jvmArg>--><!-- <jvmArg>--add-opens</jvmArg>--><!-- <jvmArg>java.base/java.lang=ALL-UNNAMED</jvmArg>--></jvmArgs></configuration></plugin><plugin><groupId>org.apache.maven.plugins</groupId><artifactId>maven-shade-plugin</artifactId><version>3.2.4</version><executions><execution><phase>package</phase><goals><goal>shade</goal></goals><configuration><transformers><transformer implementation="org.apache.maven.plugins.shade.resource.ManifestResourceTransformer"><mainClass>org.rainpet.WordCount</mainClass></transformer></transformers></configuration></execution></executions></plugin></plugins></build></project>

5、主代码:

package org.rainpetimport org.apache.spark.rdd.RDD

import org.apache.spark.{SparkConf, SparkContext}object WordCount {def main(args: Array[String]): Unit = {val sparkConf=new SparkConf().setAppName("WordCount").setMaster("local[2]");//创建sparkConf,设置模式为本地模式,2个线程val sparkContext=new SparkContext(sparkConf)// 定义输出路径val outputPath = "file:///D:/java/wordcount_out"// 删除已存在的输出目录val hadoopConf = sparkContext.hadoopConfigurationval hdfs = org.apache.hadoop.fs.FileSystem.getLocal(hadoopConf)val outPath = new org.apache.hadoop.fs.Path(outputPath)if (hdfs.exists(outPath)) {hdfs.delete(outPath, true)}val data:RDD[String]=sparkContext.textFile("D:/java/workspace_gitee/cloud-compute-course-demo/scala-spark01/src/main/resources/word.txt")val words :RDD[String]=data.flatMap(_.split(" "))val wordAndOne:RDD[(String,Int)]=words.map(x=>(x,1))val wordAndCount:RDD[(String,Int)]=wordAndOne.reduceByKey(_+_)wordAndCount.saveAsTextFile(outputPath);//将结果保存到文件val result:Array[(String,Int)]=wordAndCount.collect()println(result.toBuffer)sparkContext.stop()}

}6、环境:

scala:2.12.20

spark:3.5.6

hadoop:3.3.6

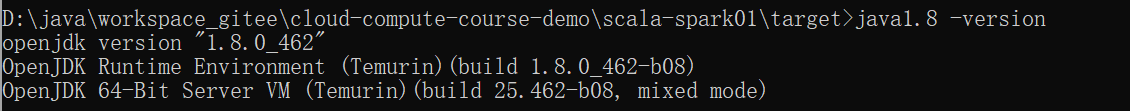

openjdk:1.8

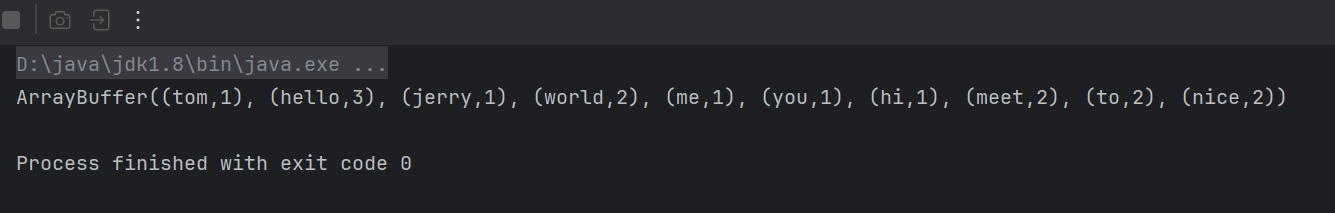

运行结果:

word.txt:

hello world

hello tom

hello jerry

hi world

nice to meet you

nice to meet me