DINOv3 重磅发布

2025年8月14日 Meta 发布了 DINOv3 。

主页:https://ai.meta.com/dinov3/

论文:DINOv3

HuggingFace地址:https://huggingface.co/collections/facebook/dinov3-68924841bd6b561778e31009

官方博客:https://ai.meta.com/blog/dinov3-self-supervised-vision-model/

代码:https://github.com/facebookresearch/dinov3

如上图所示,高分辨率密集特征,作者将使用DINOv3输出特征获得的带有红叉标记的块与所有其他块之间的余弦相似性进行可视化。

DINOv3的发布标志着在大规模自监督学习(SSL)训练方面取得了突破,且展示了一个单一冻结的自监督学习主干网络可以作为通用视觉编码器。

DINOv3 如何使用呢?官方提供了两种方式,很简单。

from transformers import pipeline

from transformers.image_utils import load_imageurl = "https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/pipeline-cat-chonk.jpeg"

image = load_image(url)feature_extractor = pipeline(model="facebook/dinov3-vitb16-pretrain-lvd1689m",task="image-feature-extraction",

)

features = feature_extractor(image)

import torch

from transformers import AutoImageProcessor, AutoModel

from transformers.image_utils import load_imageurl = "http://images.cocodataset.org/val2017/000000039769.jpg"

image = load_image(url)pretrained_model_name = "facebook/dinov3-vitb16-pretrain-lvd1689m"

processor = AutoImageProcessor.from_pretrained(pretrained_model_name)

model = AutoModel.from_pretrained(pretrained_model_name, device_map="auto",

)inputs = processor(images=image, return_tensors="pt").to(model.device)

with torch.inference_mode():outputs = model(**inputs)pooled_output = outputs.pooler_output

print("Pooled output shape:", pooled_output.shape)

如果你要将模型下载到本地,可以直接加载模型所在目录,如下所示:

import torch

from transformers import AutoImageProcessor, AutoModel

from transformers.image_utils import load_imageurl = "http://images.cocodataset.org/val2017/000000039769.jpg"

image = load_image(url)pretrained_model_name = "../model/dinov3-vitl16-pretrain-lvd1689m" # 本地large模型

processor = AutoImageProcessor.from_pretrained(pretrained_model_name, use_safetensors=True)

model = AutoModel.from_pretrained(pretrained_model_name, device_map="auto",

)inputs = processor(images=image, return_tensors="pt").to(model.device)

with torch.inference_mode():outputs = model(**inputs)

pooled_output = outputs.pooler_output

print("Pooled output shape:", pooled_output.shape)输出结果为:

Pooled output shape: torch.Size([1, 1024])pipeline()方法也是一样的方法。

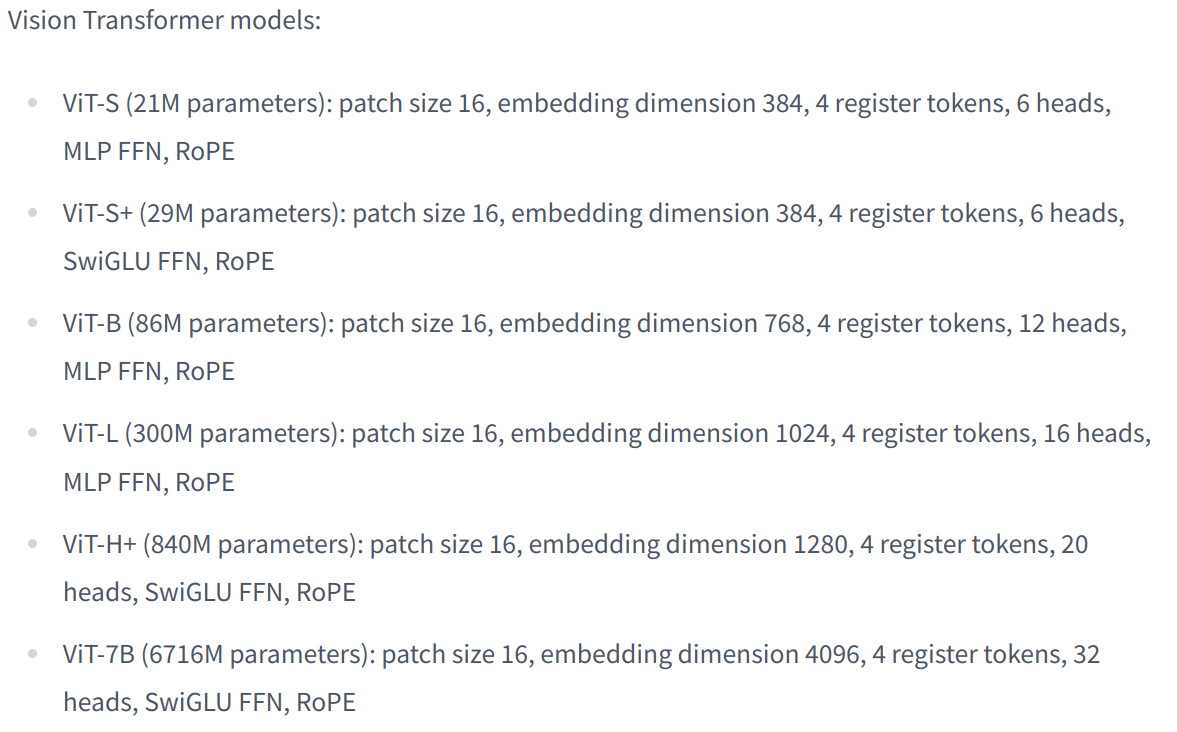

关于模型输出特征的维度:

正如我上面的输出结果:large模型->1024dim

待更新...