GCN图卷积神经网络的Pytorch实现

一、Pytorch代码

使用Cora数据集,采用两次GCN卷积操作,进行节点分类任务。

import torch

import torch.nn.functional as F

from torch_geometric.graphgym.register import optimizer_dict

from torch_geometric.nn import GCNConv,global_mean_pool

from torch_geometric.datasets import Planetoid

from torch_geometric.data import DataLoader

import matplotlib.pyplot as plt

plt.rcParams['font.sans-serif'] = ['SimHei'] # 设置中文字体

plt.rcParams['axes.unicode_minus'] = False # 正常显示负号

import osclass Net(torch.nn.Module):def __init__(self):super().__init__()self.conv1=GCNConv(dataset.num_node_features,16)self.conv2=GCNConv(16,dataset.num_classes)def forward(self,data):x,edge_index=data.x,data.edge_indexx=self.conv1(x,edge_index)x=F.relu(x)x=F.dropout(x,training=self.training)x=self.conv2(x,edge_index)return F.log_softmax(x,dim=1)if __name__=="__main__":dataset = Planetoid(root="./data/Cora", name="Cora")data=dataset[0]model=Net()optimizer=torch.optim.Adam(model.parameters(),lr=0.01,weight_decay=5e-4)Loss=[]test_acc=[]model.train()for epoch in range(200):optimizer.zero_grad()out=model(data)loss=F.nll_loss(out[data.train_mask],data.y[data.train_mask]) #负对数似然损失函数loss.backward()optimizer.step()Loss.append(loss.item())model.eval()_,pred=model(data).max(dim=1)correct=int(pred[data.test_mask].eq(data.y[data.test_mask]).sum().item())acc=correct/int(data.test_mask.sum())test_acc.append(acc)print(f"Epoch:{epoch} Train Loss:{loss.item()} Test Acc:{acc}")base_dir="C:\\Users\\Administrator\\PycharmProjects\\PythonProject2\\PythonProject\\PythonProject\\PythonProject\\GNN"dir1=os.path.join(base_dir,"cora_gcn_loss.jpg")dir2=os.path.join(base_dir,"cora_gcn_test_acc.jpg")plt.figure(figsize=(12,6))plt.plot(Loss,color="tomato")plt.xlabel("epoch")plt.ylabel("loss")plt.title("训练集的损失函数图")plt.grid()plt.savefig(dir1,dpi=300)plt.close()plt.figure(figsize=(12,6))plt.plot(test_acc,color="orange")plt.xlabel("epoch")plt.ylabel("acc")plt.title("测试集的准确率")plt.grid()plt.savefig(dir2,dpi=300)plt.close()二、运行结果

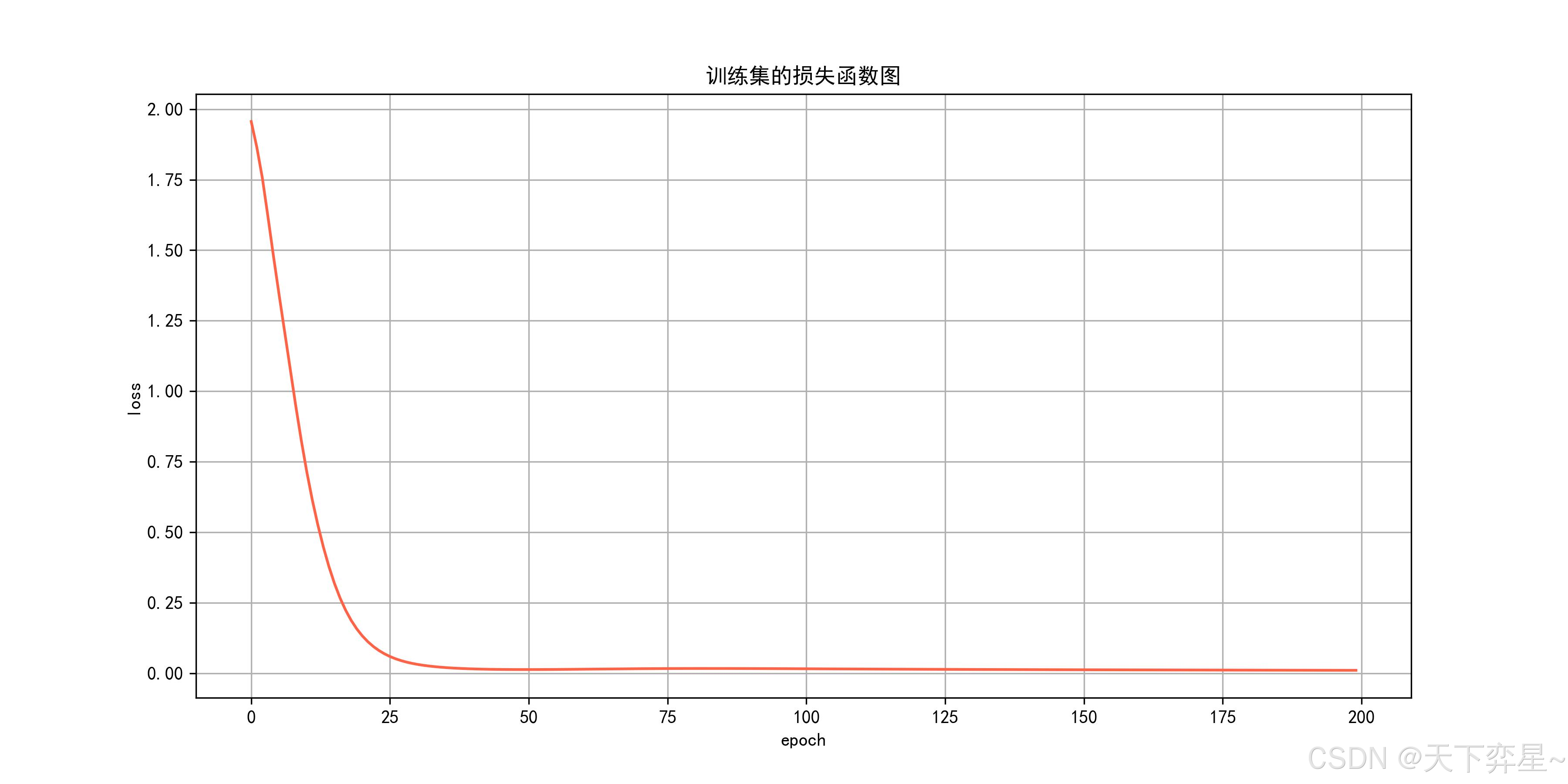

2.1 损失函数图

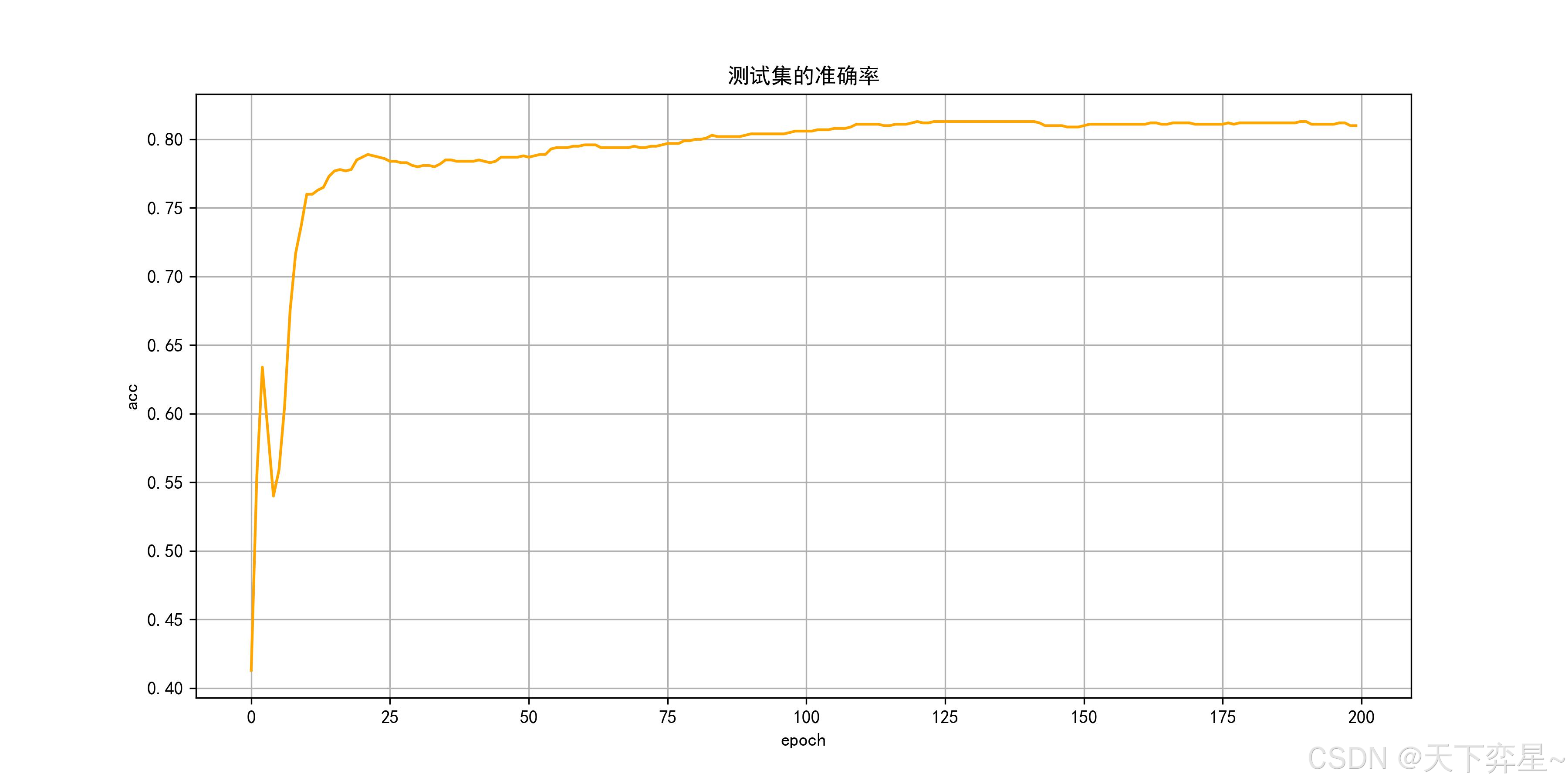

2.2 测试集准确率变化

2.3 每个epoch的输出

Epoch:0 Train Loss:1.9429240226745605 Test Acc:0.473

Epoch:1 Train Loss:1.8335933685302734 Test Acc:0.593

Epoch:2 Train Loss:1.6950715780258179 Test Acc:0.646

Epoch:3 Train Loss:1.5449037551879883 Test Acc:0.665

Epoch:4 Train Loss:1.3957326412200928 Test Acc:0.68

Epoch:5 Train Loss:1.2522907257080078 Test Acc:0.702

Epoch:6 Train Loss:1.1146392822265625 Test Acc:0.726

Epoch:7 Train Loss:0.9850963950157166 Test Acc:0.742

Epoch:8 Train Loss:0.865148663520813 Test Acc:0.753

Epoch:9 Train Loss:0.7546350359916687 Test Acc:0.766

Epoch:10 Train Loss:0.6532057523727417 Test Acc:0.779

Epoch:11 Train Loss:0.5612899661064148 Test Acc:0.789

Epoch:12 Train Loss:0.47923964262008667 Test Acc:0.798

Epoch:13 Train Loss:0.4067971706390381 Test Acc:0.802

Epoch:14 Train Loss:0.34367871284484863 Test Acc:0.802

Epoch:15 Train Loss:0.2893293499946594 Test Acc:0.801

Epoch:16 Train Loss:0.24299605190753937 Test Acc:0.805

Epoch:17 Train Loss:0.20382674038410187 Test Acc:0.806

Epoch:18 Train Loss:0.17094141244888306 Test Acc:0.804

Epoch:19 Train Loss:0.14352205395698547 Test Acc:0.803

Epoch:20 Train Loss:0.12082599848508835 Test Acc:0.803

Epoch:21 Train Loss:0.10212802141904831 Test Acc:0.805

Epoch:22 Train Loss:0.08679106831550598 Test Acc:0.809

Epoch:23 Train Loss:0.07417013496160507 Test Acc:0.81

Epoch:24 Train Loss:0.06373254209756851 Test Acc:0.808

Epoch:25 Train Loss:0.05506579577922821 Test Acc:0.804

Epoch:26 Train Loss:0.047877322882413864 Test Acc:0.802

Epoch:27 Train Loss:0.04192071035504341 Test Acc:0.802

Epoch:28 Train Loss:0.037012018263339996 Test Acc:0.8

Epoch:29 Train Loss:0.032964423298835754 Test Acc:0.798

Epoch:30 Train Loss:0.029622385278344154 Test Acc:0.798

Epoch:31 Train Loss:0.026850802823901176 Test Acc:0.799

Epoch:32 Train Loss:0.024533459916710854 Test Acc:0.798

Epoch:33 Train Loss:0.022576363757252693 Test Acc:0.798

Epoch:34 Train Loss:0.020912956446409225 Test Acc:0.798

Epoch:35 Train Loss:0.01949603296816349 Test Acc:0.797

Epoch:36 Train Loss:0.01829376071691513 Test Acc:0.796

Epoch:37 Train Loss:0.017278574407100677 Test Acc:0.795

Epoch:38 Train Loss:0.016427984461188316 Test Acc:0.794

Epoch:39 Train Loss:0.01572219282388687 Test Acc:0.794

Epoch:40 Train Loss:0.015136897563934326 Test Acc:0.794

Epoch:41 Train Loss:0.01465317141264677 Test Acc:0.794

Epoch:42 Train Loss:0.014255816116929054 Test Acc:0.794

Epoch:43 Train Loss:0.013931368477642536 Test Acc:0.796

Epoch:44 Train Loss:0.013672472909092903 Test Acc:0.795

Epoch:45 Train Loss:0.013474125415086746 Test Acc:0.796

Epoch:46 Train Loss:0.013331538066267967 Test Acc:0.796

Epoch:47 Train Loss:0.013239811174571514 Test Acc:0.795

Epoch:48 Train Loss:0.01319477055221796 Test Acc:0.797

Epoch:49 Train Loss:0.013191784732043743 Test Acc:0.797

Epoch:50 Train Loss:0.013221818953752518 Test Acc:0.797

Epoch:51 Train Loss:0.013279520906507969 Test Acc:0.8

Epoch:52 Train Loss:0.01336253248155117 Test Acc:0.8

Epoch:53 Train Loss:0.013466333039104939 Test Acc:0.801

Epoch:54 Train Loss:0.013588031753897667 Test Acc:0.803

Epoch:55 Train Loss:0.01372613850980997 Test Acc:0.802

Epoch:56 Train Loss:0.0138785345479846 Test Acc:0.802

Epoch:57 Train Loss:0.01404199842363596 Test Acc:0.803

Epoch:58 Train Loss:0.014217497780919075 Test Acc:0.803

Epoch:59 Train Loss:0.01439977902919054 Test Acc:0.804

Epoch:60 Train Loss:0.014586491510272026 Test Acc:0.803

Epoch:61 Train Loss:0.014775114133954048 Test Acc:0.803

Epoch:62 Train Loss:0.014964202418923378 Test Acc:0.803

Epoch:63 Train Loss:0.015152176842093468 Test Acc:0.804

Epoch:64 Train Loss:0.015336167998611927 Test Acc:0.805

Epoch:65 Train Loss:0.015514318831264973 Test Acc:0.805

Epoch:66 Train Loss:0.01568502001464367 Test Acc:0.805

Epoch:67 Train Loss:0.015847589820623398 Test Acc:0.804

Epoch:68 Train Loss:0.01600045897066593 Test Acc:0.804

Epoch:69 Train Loss:0.01614467054605484 Test Acc:0.805

Epoch:70 Train Loss:0.016276754438877106 Test Acc:0.805

Epoch:71 Train Loss:0.01639663800597191 Test Acc:0.805

Epoch:72 Train Loss:0.016504433006048203 Test Acc:0.805

Epoch:73 Train Loss:0.01659868098795414 Test Acc:0.805

Epoch:74 Train Loss:0.016679927706718445 Test Acc:0.803

Epoch:75 Train Loss:0.01674964651465416 Test Acc:0.803

Epoch:76 Train Loss:0.016807589679956436 Test Acc:0.803

Epoch:77 Train Loss:0.016852566972374916 Test Acc:0.802

Epoch:78 Train Loss:0.016885491088032722 Test Acc:0.802

Epoch:79 Train Loss:0.016908036544919014 Test Acc:0.802

Epoch:80 Train Loss:0.01692015491425991 Test Acc:0.8

Epoch:81 Train Loss:0.016921622678637505 Test Acc:0.8

Epoch:82 Train Loss:0.016912296414375305 Test Acc:0.8

Epoch:83 Train Loss:0.016894150525331497 Test Acc:0.8

Epoch:84 Train Loss:0.01686643622815609 Test Acc:0.8

Epoch:85 Train Loss:0.01682898961007595 Test Acc:0.8

Epoch:86 Train Loss:0.01678611896932125 Test Acc:0.799

Epoch:87 Train Loss:0.016737814992666245 Test Acc:0.799

Epoch:88 Train Loss:0.016682147979736328 Test Acc:0.799

Epoch:89 Train Loss:0.016619514673948288 Test Acc:0.799

Epoch:90 Train Loss:0.016552427783608437 Test Acc:0.8

Epoch:91 Train Loss:0.016480647027492523 Test Acc:0.8

Epoch:92 Train Loss:0.016405712813138962 Test Acc:0.8

Epoch:93 Train Loss:0.016329515725374222 Test Acc:0.8

Epoch:94 Train Loss:0.01624993048608303 Test Acc:0.8

Epoch:95 Train Loss:0.01616790145635605 Test Acc:0.8

Epoch:96 Train Loss:0.016083937138319016 Test Acc:0.799

Epoch:97 Train Loss:0.015997864305973053 Test Acc:0.801

Epoch:98 Train Loss:0.015910038724541664 Test Acc:0.801

Epoch:99 Train Loss:0.015820365399122238 Test Acc:0.801

Epoch:100 Train Loss:0.015730848535895348 Test Acc:0.801

Epoch:101 Train Loss:0.015641991049051285 Test Acc:0.801

Epoch:102 Train Loss:0.015551982447504997 Test Acc:0.801

Epoch:103 Train Loss:0.015460828319191933 Test Acc:0.801

Epoch:104 Train Loss:0.015370532870292664 Test Acc:0.801

Epoch:105 Train Loss:0.015280655585229397 Test Acc:0.801

Epoch:106 Train Loss:0.015191550366580486 Test Acc:0.801

Epoch:107 Train Loss:0.015103167854249477 Test Acc:0.801

Epoch:108 Train Loss:0.01501508243381977 Test Acc:0.801

Epoch:109 Train Loss:0.014928753487765789 Test Acc:0.801

Epoch:110 Train Loss:0.014843959361314774 Test Acc:0.801

Epoch:111 Train Loss:0.01476089283823967 Test Acc:0.801

Epoch:112 Train Loss:0.01467831153422594 Test Acc:0.801

Epoch:113 Train Loss:0.014596865512430668 Test Acc:0.801

Epoch:114 Train Loss:0.014517165720462799 Test Acc:0.801

Epoch:115 Train Loss:0.014438762329518795 Test Acc:0.801

Epoch:116 Train Loss:0.014360867440700531 Test Acc:0.802

Epoch:117 Train Loss:0.01428411528468132 Test Acc:0.803

Epoch:118 Train Loss:0.014208598993718624 Test Acc:0.802

Epoch:119 Train Loss:0.014135085977613926 Test Acc:0.802

Epoch:120 Train Loss:0.014063213020563126 Test Acc:0.802

Epoch:121 Train Loss:0.01399277988821268 Test Acc:0.802

Epoch:122 Train Loss:0.013923508115112782 Test Acc:0.802

Epoch:123 Train Loss:0.013854768127202988 Test Acc:0.802

Epoch:124 Train Loss:0.0137864351272583 Test Acc:0.803

Epoch:125 Train Loss:0.013720217160880566 Test Acc:0.803

Epoch:126 Train Loss:0.013654814101755619 Test Acc:0.804

Epoch:127 Train Loss:0.013590318150818348 Test Acc:0.804

Epoch:128 Train Loss:0.01352674700319767 Test Acc:0.804

Epoch:129 Train Loss:0.013463341630995274 Test Acc:0.804

Epoch:130 Train Loss:0.013401255011558533 Test Acc:0.804

Epoch:131 Train Loss:0.013341032899916172 Test Acc:0.805

Epoch:132 Train Loss:0.013280920684337616 Test Acc:0.806

Epoch:133 Train Loss:0.013221459463238716 Test Acc:0.806

Epoch:134 Train Loss:0.01316215842962265 Test Acc:0.806

Epoch:135 Train Loss:0.013103933073580265 Test Acc:0.807

Epoch:136 Train Loss:0.013046271167695522 Test Acc:0.807

Epoch:137 Train Loss:0.012989106588065624 Test Acc:0.807

Epoch:138 Train Loss:0.012933368794620037 Test Acc:0.807

Epoch:139 Train Loss:0.012878047302365303 Test Acc:0.806

Epoch:140 Train Loss:0.012822895310819149 Test Acc:0.806

Epoch:141 Train Loss:0.012768763117492199 Test Acc:0.807

Epoch:142 Train Loss:0.01271540205925703 Test Acc:0.807

Epoch:143 Train Loss:0.012662047520279884 Test Acc:0.807

Epoch:144 Train Loss:0.012610061094164848 Test Acc:0.807

Epoch:145 Train Loss:0.012558558024466038 Test Acc:0.808

Epoch:146 Train Loss:0.012508128769695759 Test Acc:0.808

Epoch:147 Train Loss:0.012457980774343014 Test Acc:0.808

Epoch:148 Train Loss:0.01240801066160202 Test Acc:0.808

Epoch:149 Train Loss:0.01235883217304945 Test Acc:0.808

Epoch:150 Train Loss:0.012310194782912731 Test Acc:0.808

Epoch:151 Train Loss:0.012262031435966492 Test Acc:0.808

Epoch:152 Train Loss:0.012214711867272854 Test Acc:0.808

Epoch:153 Train Loss:0.012168481945991516 Test Acc:0.808

Epoch:154 Train Loss:0.012121256440877914 Test Acc:0.808

Epoch:155 Train Loss:0.012074427679181099 Test Acc:0.809

Epoch:156 Train Loss:0.012028360739350319 Test Acc:0.809

Epoch:157 Train Loss:0.011983374133706093 Test Acc:0.809

Epoch:158 Train Loss:0.0119386101141572 Test Acc:0.809

Epoch:159 Train Loss:0.01189413946121931 Test Acc:0.809

Epoch:160 Train Loss:0.011850536800920963 Test Acc:0.809

Epoch:161 Train Loss:0.011807534843683243 Test Acc:0.809

Epoch:162 Train Loss:0.011764495633542538 Test Acc:0.809

Epoch:163 Train Loss:0.011721866205334663 Test Acc:0.809

Epoch:164 Train Loss:0.011679567396640778 Test Acc:0.809

Epoch:165 Train Loss:0.011638054624199867 Test Acc:0.809

Epoch:166 Train Loss:0.011597426608204842 Test Acc:0.809

Epoch:167 Train Loss:0.011557132937014103 Test Acc:0.809

Epoch:168 Train Loss:0.011517229489982128 Test Acc:0.809

Epoch:169 Train Loss:0.011477210558950901 Test Acc:0.809

Epoch:170 Train Loss:0.011438659392297268 Test Acc:0.809

Epoch:171 Train Loss:0.011400625109672546 Test Acc:0.809

Epoch:172 Train Loss:0.011362049728631973 Test Acc:0.807

Epoch:173 Train Loss:0.011323662474751472 Test Acc:0.807

Epoch:174 Train Loss:0.011285802349448204 Test Acc:0.807

Epoch:175 Train Loss:0.011248385533690453 Test Acc:0.807

Epoch:176 Train Loss:0.011211426928639412 Test Acc:0.807

Epoch:177 Train Loss:0.011174659244716167 Test Acc:0.807

Epoch:178 Train Loss:0.011138970032334328 Test Acc:0.809

Epoch:179 Train Loss:0.011103731580078602 Test Acc:0.808

Epoch:180 Train Loss:0.01106821745634079 Test Acc:0.808

Epoch:181 Train Loss:0.011033419519662857 Test Acc:0.808

Epoch:182 Train Loss:0.010998581536114216 Test Acc:0.808

Epoch:183 Train Loss:0.010963555425405502 Test Acc:0.808

Epoch:184 Train Loss:0.010929064825177193 Test Acc:0.809

Epoch:185 Train Loss:0.010895474813878536 Test Acc:0.809

Epoch:186 Train Loss:0.010861996561288834 Test Acc:0.809

Epoch:187 Train Loss:0.010829135775566101 Test Acc:0.809

Epoch:188 Train Loss:0.010796524584293365 Test Acc:0.809

Epoch:189 Train Loss:0.01076436322182417 Test Acc:0.809

Epoch:190 Train Loss:0.010732785798609257 Test Acc:0.809

Epoch:191 Train Loss:0.010701286606490612 Test Acc:0.809

Epoch:192 Train Loss:0.010670561343431473 Test Acc:0.81

Epoch:193 Train Loss:0.010640090331435204 Test Acc:0.81

Epoch:194 Train Loss:0.010609396733343601 Test Acc:0.81

Epoch:195 Train Loss:0.010578806512057781 Test Acc:0.81

Epoch:196 Train Loss:0.010548238642513752 Test Acc:0.81

Epoch:197 Train Loss:0.010518139228224754 Test Acc:0.81

Epoch:198 Train Loss:0.010489010252058506 Test Acc:0.81

Epoch:199 Train Loss:0.010459662415087223 Test Acc:0.81进程已结束,退出代码为 0