爱芯元智芯片推理cn-clip

主要参考:GitHub - AXERA-TECH/CLIP-ONNX-AX650-CPP

1.clip模型转onnx

pip install onnxmltools

pip install protobuf==4.25.5

export CUDA_VISIBLE_DEVICES=0

export PYTHONPATH=${PYTHONPATH}:`pwd`/cn_clip

DATAPATH="/data/LLM/clip-data/"

mkdir -p ${DATAPATH}/deploy/

checkpoint_path=${DATAPATH}/pretrained_weights/epoch_latest_event0521.pt

cd /data/LLM/Chinese-CLIP

执行以下命令可以转换onnx:

python cn_clip/deploy/pytorch_to_onnx.py --model-arch ViT-B-16 --pytorch-ckpt-path ${checkpoint_path} --save-onnx-path ${DATAPATH}/deploy/vit-b-16 --convert-text --convert-vision

2.onnx转axmodel

cnclip_config_npu3_U8.json文件

{"model_type": "ONNX","npu_mode": "NPU3","quant": {"input_configs": [{"tensor_name": "image","calibration_dataset": "./val.tar","calibration_size": 224,"calibration_mean": [122.77,116.746,104.093],"calibration_std": [68.5,66.632,70.323]}],"calibration_method": "MinMax","precision_analysis": false},"input_processors": [{"tensor_name": "image","tensor_format": "RGB","src_format": "RGB","src_dtype": "U8","src_layout": "NHWC","csc_mode": "NoCSC"}],"compiler": {"check": 0}

}

执行:

pulsar2 build --input vit-b-16.img.fp32.onnx --output_name vit-b-16.img.fp32.axmodel --config cnclip_config_npu3_U8.json

可以得到:

![]()

3.代码编译

git clone https://github.com/AXERA-TECH/CLIP-ONNX-AX650-CPP/tree/main.git

1.下载依赖的opencv和onnxruntime

2.cmakelist修改

打开cmakelist.txt,在开头增加

set(CMAKE_C_COMPILER "aarch64-linux-gnu-gcc")

set(CMAKE_CXX_COMPILER "aarch64-linux-gnu-g++")

add_compile_options(-g3 -Wall)

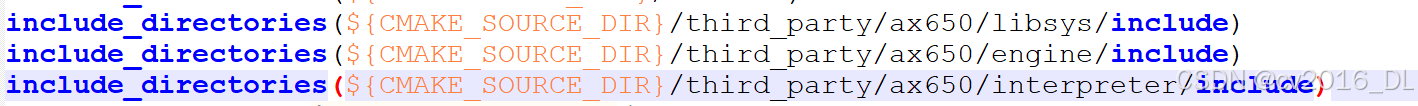

增加包含文件:

增加依赖库:

mkdir build

mkdir build

cd build

执行:

cmake -DONNXRUNTIME_DIR=${onnxruntime_dir} -DOpenCV_DIR=${opencv_cmake_file_dir} -DBSP_MSP_DIR=${msp_out_dir} -DBUILD_WITH_AX650=ON ..

make -j4

4运行程序

下载feature_matmul.onnx

# feature matmul model

wget https://github.com/ZHEQIUSHUI/CLIP-ONNX-AX650-CPP/releases/download/3models/feature_matmul.onnx执行代码:

./main -l 1 -v cn_vocab.txt -t cn_text.txt -i images/ --ienc cn_clip_vitb16.axmodel --tenc vitb16.txt.fp32.onnx -d feature_matmul.onnx