AlexNet训练和测试FashionMNIST数据集

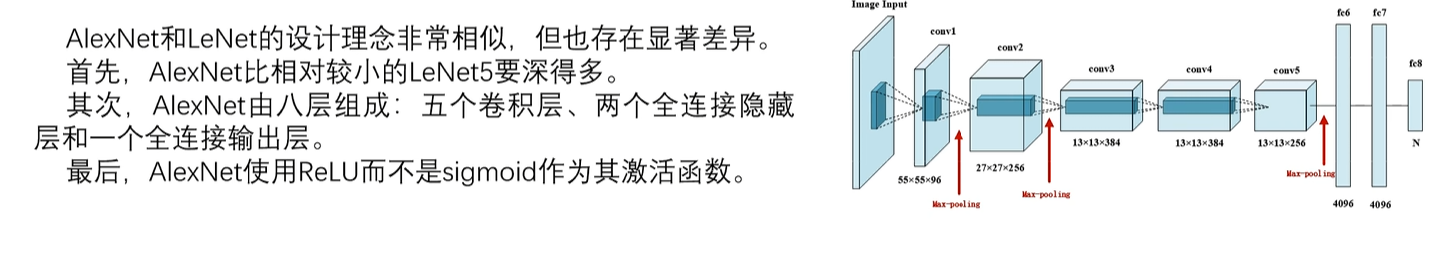

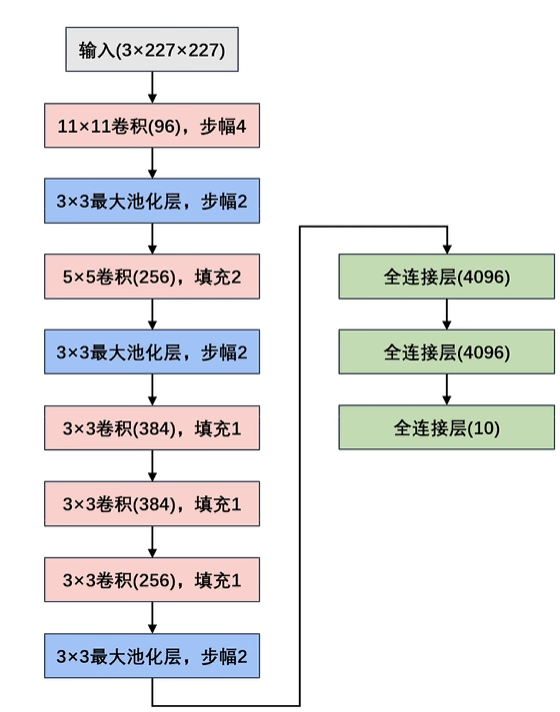

1.AlexNet网络结构

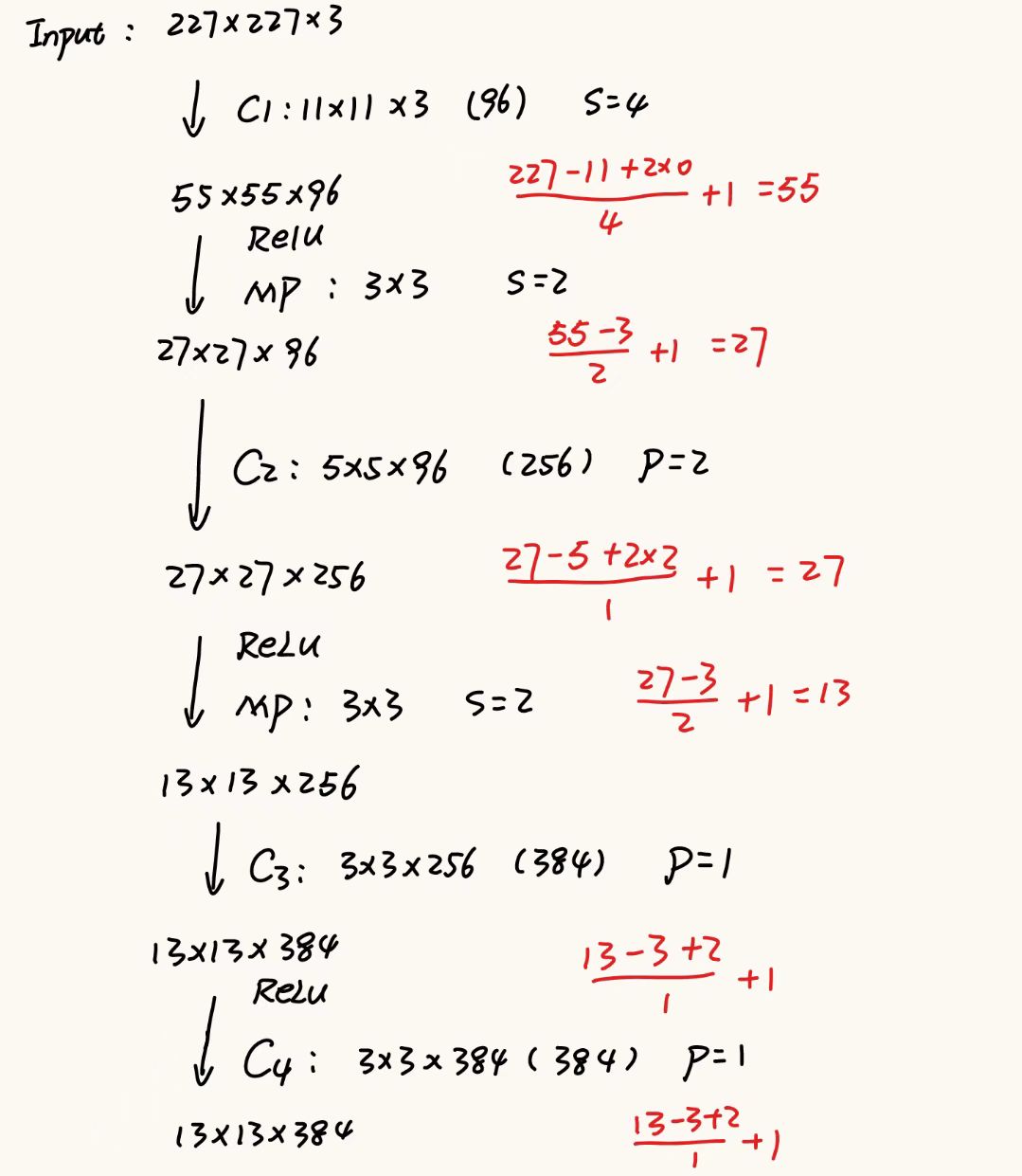

2.AlexNet网络参数

3.代码实现model

import torch

import torch.nn as nn

from torchsummary import summary

import torch.nn.functional as F #dropout要用到class AlexNet(nn.Module):def __init__(self):super().__init__()self.conv1 = nn.Conv2d(in_channels=1, out_channels=96, kernel_size=11, stride=4)self.relu = nn.ReLU()self.max_pool = nn.MaxPool2d(kernel_size=3, stride=2)self.conv2 = nn.Conv2d(in_channels=96, out_channels=256, kernel_size=5, padding=2)self.conv3 = nn.Conv2d(in_channels=256, out_channels=384, kernel_size=3, padding=1)self.conv4 = nn.Conv2d(in_channels=384, out_channels=384, kernel_size=3, padding=1)self.conv5 = nn.Conv2d(in_channels=384, out_channels=256, kernel_size=3, padding=1)self.flatten = nn.Flatten()self.fc1 = nn.Linear(in_features=256*6*6, out_features=4096)self.fc2 = nn.Linear(in_features=4096, out_features=4096)self.fc3 = nn.Linear(in_features=4096, out_features=10)def forward(self, x):x = self.max_pool(self.relu(self.conv1(x)))x = self.max_pool(self.relu(self.conv2(x)))x = self.relu(self.conv3(x))x = self.relu(self.conv4(x))x = self.max_pool(self.relu(self.conv5(x)))x = self.flatten(x)x = self.relu(self.fc1(x))x = F.dropout(x,p=0.5)x = self.relu(self.fc2(x))x = F.dropout(x,p=0.5)x = self.fc3(x)return xif __name__ == '__main__':device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")model = AlexNet().to(device)print(summary(model,(1,227,227)))这里如果输入为227*227*3 ,则参数总和为58,322,314

如果输入为227*227*1 ,则参数总和为58,299,082

4.代码实现AlexNet训练

import torch #pytorch基础库

from torchvision.datasets import FashionMNIST #导入FashionMNIST数据集

from torchvision import transforms #数据处理库

import torch.utils.data as data #数据处理库

import torch.nn as nn #pytorch中线性库

import matplotlib.pyplot as plt #可视化库

from model import AlexNet #从前面的model文件中导入LeNet网络结构import copy

import time

import pandas as pd#===数据加载函数

def train_val_process():#== 图像预处理,可预防过拟合transform = transforms.Compose([transforms.Resize((227,227)), #调整图片尺寸为227*227#transforms.CenterCrop((227, 227)), #中心裁剪#transforms.RandomCrop((227, 227)), #随机裁剪transforms.RandomHorizontalFlip(), #随机水平翻转transforms.ToTensor(), #转成张量 & 归一化到[0,1]#transforms.ColorJitter(), #调整亮度、对比度、饱和度transforms.Normalize((0.2860,), (0.3530,)) #标准化,这里可以写脚本来确定])#== 下载数据集train_data = FashionMNIST(root='./data',train=True,transform=transform,download=True)#== 划分数据集 80%为训练集,20%为验证集train_data,val_data =data.random_split(train_data,[round(len(train_data)*0.8),round(len(train_data)*0.2)])#== 加载训练集和验证集train_loader = data.DataLoader(train_data,batch_size=64,shuffle=True,num_workers=4)val_loader = data.DataLoader(val_data,batch_size=64,shuffle=True,num_workers=4)return train_loader,val_loaderdef model_train_process(model, train_loader, val_loader, num_epochs):# 如果有GPU用GPU,没有GPU用CPUdevice = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")# 优化器optimizer = torch.optim.Adam(model.parameters(), lr=0.001)# loss函数使用交叉熵loss,来更新模型参数# 回归一般用均方差,分类一般用交叉熵criterion = nn.CrossEntropyLoss()# 将模型放入设备model = model.to(device)# 复制当前模型参数,以便找到最优模型参数# 这里要导入包,import copybest_model_wts = copy.deepcopy(model.state_dict())# 参数初始化# 最优精度best_acc = 0.0# train集loss列表train_loss_all = []# val集loss列表val_loss_all = []# train集val列表train_acc_all = []# val集val列表val_acc_all = []# 保存当前时间,以便计算训练用时# 导包 import timesince = time.time()# 轮次循环for epoch in range(num_epochs):print('Epoch {}/{}'.format(epoch + 1, num_epochs))print('-' * 30)# 轮次初始化train_loss = 0.0val_loss = 0.0train_acc = 0.0val_acc = 0.0train_num = 0.0 # 训练样本数val_num = 0.0 # 验证样本数# batch循环# 每一轮中从train_loader中按batch中取出标签和特征for batch_idx, (inputs, targets) in enumerate(train_loader):# 将特征放入设备中inputs, targets = inputs.to(device), targets.to(device)# 开启训练模式model.train()# 前向传播outputs = model(inputs)# 经过softmax得到batch中最大值对应的行标pred = torch.argmax(outputs, dim=1)# 计算每一batch的lossloss = criterion(outputs, targets)# 梯度置为0,开始反向传播optimizer.zero_grad()# 反向传播loss.backward()# 更新网络参数optimizer.step()# 计算该batch的训练集的loss# input.size(0)为数据集大小train_loss += loss.item() * inputs.size(0)#计算训练集的准确度train_acc += torch.sum(pred == targets)# 计算训练集样本数train_num += inputs.size(0)for batch_idx, (inputs, targets) in enumerate(val_loader):inputs, targets = inputs.to(device), targets.to(device)model.eval()outputs = model(inputs)pred = torch.argmax(outputs, dim=1)loss = criterion(outputs, targets)val_loss += loss.item() * inputs.size(0)val_acc += torch.sum(pred == targets)val_num += inputs.size(0)# 计算用时并且打印输出time_use = time.time() - sinceprint("训练耗时:{:.0f}m{:.0f}s".format(time_use // 60, time_use % 60))# 计算并且保存每一轮次的loss和准确度并且打印输出time_use = time.time() - since# 计算并且保存每一次迭代的loss和准确度train_loss_all.append(train_loss / train_num)val_loss_all.append(val_loss / val_num)train_acc_all.append((train_acc / train_num).item())val_acc_all.append((val_acc / val_num).item())print("{} Train Loss:{:.4f} Train Acc:{:.4f}".format(epoch + 1, train_loss_all[-1], train_acc_all[-1]))print("{} Val Loss:{:.4f} Val Acc:{:.4f}".format(epoch + 1, val_loss_all[-1], val_acc_all[-1]))# 寻找最高准确度的权重if val_acc_all[-1] > best_acc:#保存当前的最高准确度best_acc = val_acc_all[-1]# 保存当前的参数best_model_wts = copy.deepcopy(model.state_dict())# 加载最优模型model.load_state_dict(best_model_wts)# 保存最优模型torch.save(model.state_dict(), './best_model.pth')train_process = pd.DataFrame(data={"epoch": range(1, num_epochs + 1),"train_loss_all": train_loss_all,"train_acc_all": train_acc_all,"val_loss_all": val_loss_all,"val_acc_all": val_acc_all})return train_processdef plot(train_process):#图的大小12*4plt.figure(figsize=(12,4))#将图分为一行两列,并且现在这个图为1plt.subplot(121)plt.plot(train_process['epoch'],train_process.train_loss_all,'-ro',label='train_loss')plt.plot(train_process['epoch'],train_process.val_loss_all,'-bs',label='val_loss')plt.legend()plt.xlabel('epoch')plt.ylabel('loss')plt.subplot(122)plt.plot(train_process['epoch'],train_process.train_acc_all,'-ro',label='train_acc')plt.plot(train_process['epoch'],train_process.val_acc_all,'-bs',label='val_acc')plt.legend()plt.xlabel('epoch')plt.ylabel('accuracy')plt.show()if __name__ == '__main__':model = AlexNet()train_loader,val_loader = train_val_process()train_process = model_train_process(model,train_loader,val_loader,num_epochs=50)plot(train_process)修改地方:

1)from model import AlexNet

2)图片大小:28*28 ---> 227*227

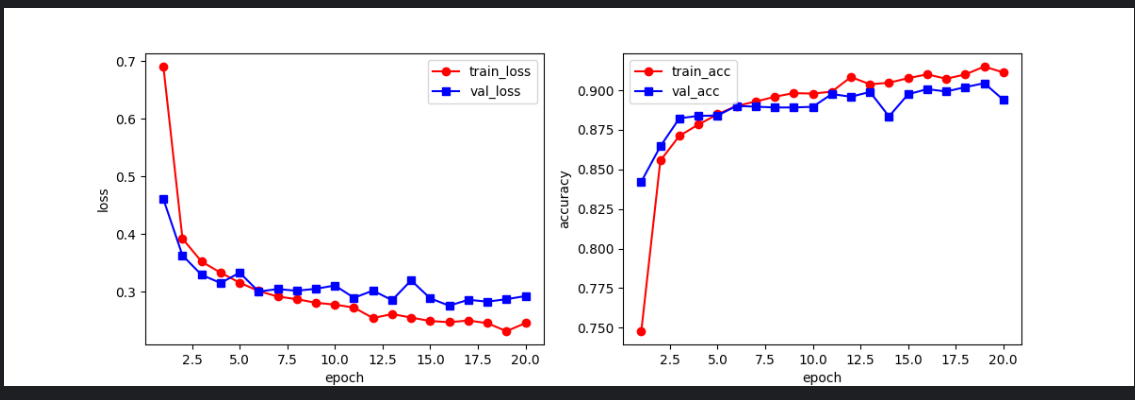

训练效果

5.代码实现AlexNet测试

import torch

from torch.utils.data import DataLoader

from torchvision import transforms

from torchvision.datasets import FashionMNIST

from model import AlexNetdef test_data_loader():transform = transforms.Compose([transforms.Resize((227,227)),transforms.ToTensor(),transforms.Normalize((0.2860,), (0.3530,))])test_data = FashionMNIST(root='./data', train=False, transform=transform)test_loader = DataLoader(test_data, batch_size=1, shuffle=False)return test_loaderdef test_model():device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')model.to(device)model.eval()test_corrects = 0total = 0with torch.no_grad():for inputs,labels in test_data_loader():inputs = inputs.to(device)labels = labels.to(device)outputs = model(inputs)preds = torch.argmax(outputs, dim=1)test_corrects += torch.sum(preds == labels).item()total += labels.size(0)test_acc = test_corrects / totalprint(f'Test Accuracy: {test_acc * 100:.2f}%')return test_accdef predict(model,test_loader):device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')model = model.to(device)model.eval()classes = ['T-shirt/top', 'Trouser', 'Pullover', 'Dress', 'Goat', 'Sandal','Shirt', 'Sneaker', 'Bag', 'Ankle boot']with torch.no_grad():for inputs,labels in test_loader:inputs = inputs.to(device)labels = labels.to(device)outputs = model(inputs)preds = torch.argmax(outputs, dim=1)pred_labels = preds.item()true_labels = labels.item()print(f"预测值:{classes[pred_labels]}------真实值:{classes[true_labels]}")if __name__ == '__main__':model = AlexNet()model.load_state_dict(torch.load('./model.pth'))test_loader = test_data_loader()test_acc = test_model()predict(model,test_loader)这里train和test的图像预处理步骤要一致

#train预处理

transform = transforms.Compose([transforms.Resize((227,227)), #调整图片尺寸为28*28#transforms.CenterCrop((227, 227)), #中心裁剪#transforms.RandomCrop((227, 227)), #随机裁剪transforms.RandomHorizontalFlip(), #随机水平翻转transforms.ToTensor(), #转成张量 & 归一化到[0,1]#transforms.ColorJitter(), #调整亮度、对比度、饱和度transforms.Normalize((0.2860,), (0.3530,)) #标准化,这里可以写脚本来确定])

#测试预处理

transform = transforms.Compose([transforms.Resize((227,227)),transforms.ToTensor(),transforms.Normalize((0.2860,), (0.3530,))])