记一次生产环境Hbase填坑之路、Hbase客户端登陆、kerberos认证、端口列表、Pod上手撕代码【Hbase最佳实践】

背景

1、软件系统(转储系统)需要向生产环境迁移:迁到国产操作系统、国产资源池(Hbase存储不变)

2、老环境上的转储系统本身存在写入hbase的性能问题、及部分省份写入hbase失败的问题(20%失败)

3、全国31个省写入数据量大

4、向国产环境迁移没有成功,该工程(6年前的工程)作者之前从来没有参与过

5、新的环境迁移涉及主机端口开通、及网络端口打通等诸多事项

环境

1、Oracle JDK8u202(Oracle JDK8最后一个非商业版本) 下载地址:Oracle JDK8u202

2、Hbase 1.1.2.2.5 Apache HBase – Apache HBase Downloads(多年前的项目,所以其版本较旧, 该版本已归档: Index of /dist/hbase )

3、Hadoop 2.7.1.2.5 Apache Hadoop (该版本已归档: Central Repository: org/apache/hadoop/hadoop-common/2.7.1)

4、Kerberos 1.10.3-10 MIT Kerberos Consortium (该版本已归档:Historic MIT Kerberos Releases)

Hbase测试代码

话不多说,先看代码作者在POD上手撕的测试代码,这是指登陆代码(因为只要登陆成功就能解决所有问题):

主类

package com.asiainfo.crm.ai;import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.HBaseConfiguration;

import org.apache.hadoop.hbase.security.UserProvider;

import org.apache.hadoop.hbase.shaded.com.google.common.base.Preconditions;

import org.apache.hadoop.hbase.util.Strings;

import org.apache.hadoop.net.DNS;/*** export LIB_HOME=/app/tomcat/webapps/ROOT/WEB-INF/lib/javac -d ../classes -cp $LIB_HOME\*:. Transfer2Application.javajava -cp $LIB_HOME\*:. Transfer2Application*/

public class Transfer2Application

{public static void main(String[] args){try {System.out.println("################## hello world! 1 ");//初始化Hbase信息initHbase();} catch (Exception e) {e.printStackTrace();}}/*** 系统启动时初始化Hbase连接信息,包括kerberos认证** @throws Exception*/private static void initHbase() throws Exception{System.out.println("################## hello world! initHbase ");System.getProperties().setProperty("hadoop.home.dir", "/app/tomcat/temp/hbase");System.setProperty("java.security.krb5.conf", "/app/tomcat/krb5.conf");Configuration conf = HBaseConfiguration.create();conf.set("hbase.keytab.file", "/app/tomcat/gz**.app.keytab");conf.set("hbase.kerberos.principal", "gz***/gz**-***.dcs.com@DCS.COM");UserProvider userProvider = UserProvider.instantiate(conf);// login the server principal (if using secure Hadoop)if (userProvider.isHadoopSecurityEnabled() && userProvider.isHBaseSecurityEnabled()){String machineName = Strings.domainNamePointerToHostName(DNS.getDefaultHost(conf.get( "hbase.rest.dns.interface", "default"),conf.get( "hbase.rest.dns.nameserver", "default")));String keytabFilename = conf.get( "hbase.keytab.file" );Preconditions.checkArgument(keytabFilename != null && !keytabFilename.isEmpty(),"hbase.keytab.file"+ " should be set if security is enabled");String principalConfig = conf.get( "hbase.kerberos.principal" );Preconditions.checkArgument(principalConfig != null && !principalConfig.isEmpty(),"hbase.kerberos.principal" + " should be set if security is enabled");userProvider.login( "hbase.keytab.file" , "hbase.kerberos.principal", machineName);System.out.println("########## login success! #######");}else{System.out.println("不具备登陆条件!");System.out.println("userProvider.isHadoopSecurityEnabled() :"+userProvider.isHadoopSecurityEnabled() );System.out.println("userProvider.isHBaseSecurityEnabled():"+userProvider.isHBaseSecurityEnabled());}}

}

POD上手撕代码的几条重要命令

# 应用pod上 lib目录路径,用于设置java classpath

export LIB_HOME=/app/tomcat/webapps/ROOT/WEB-INF/lib/

# 进入src目录并创建主类源文件

cd src

# 编译主类,并将lib目录下所有jar加入到classpath中

javac -d ../classes -cp $LIB_HOME\*:. Transfer2Application.java

# 进入classes目录

cd ../classes

# 运行二进制文件

java -cp $LIB_HOME\*:. Transfer2Application运行成功效果日志(基于shell)

################## hello world! 1

################## hello world! initHbase

14:23:01.194 [main] DEBUG org.apache.hadoop.util.Shell - setsid exited with exit code 0

14:23:01.207 [main] DEBUG org.apache.hadoop.security.Groups - Creating new Groups object

14:23:01.234 [main] DEBUG org.apache.hadoop.util.NativeCodeLoader - Trying to load the custom-built native-hadoop library...

14:23:01.235 [main] DEBUG org.apache.hadoop.util.NativeCodeLoader - Failed to load native-hadoop with error: java.lang.UnsatisfiedLinkError: no hadoop in java.library.path

14:23:01.235 [main] DEBUG org.apache.hadoop.util.NativeCodeLoader - java.library.path=/usr/java/packages/lib/amd64:/usr/lib64:/lib64:/lib:/usr/lib

14:23:01.235 [main] WARN org.apache.hadoop.util.NativeCodeLoader - Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

14:23:01.235 [main] DEBUG org.apache.hadoop.util.PerformanceAdvisory - Falling back to shell based

14:23:01.235 [main] DEBUG org.apache.hadoop.security.JniBasedUnixGroupsMappingWithFallback - Group mapping impl=org.apache.hadoop.security.ShellBasedUnixGroupsMapping

14:23:01.285 [main] DEBUG org.apache.hadoop.security.Groups - Group mapping impl=org.apache.hadoop.security.JniBasedUnixGroupsMappingWithFallback; cacheTimeout=300000; warningDeltaMs=5000

14:23:01.338 [main] DEBUG org.apache.hadoop.metrics2.lib.MutableMetricsFactory - field org.apache.hadoop.metrics2.lib.MutableRate org.apache.hadoop.security.UserGroupInformation$UgiMetrics.loginSuccess with annotation @org.apache.hadoop.metrics2.annotation.Metric(always=false, about=, sampleName=Ops, type=DEFAULT, valueName=Time, value=[Rate of successful kerberos logins and latency (milliseconds)])

14:23:01.345 [main] DEBUG org.apache.hadoop.metrics2.lib.MutableMetricsFactory - field org.apache.hadoop.metrics2.lib.MutableRate org.apache.hadoop.security.UserGroupInformation$UgiMetrics.loginFailure with annotation @org.apache.hadoop.metrics2.annotation.Metric(always=false, about=, sampleName=Ops, type=DEFAULT, valueName=Time, value=[Rate of failed kerberos logins and latency (milliseconds)])

14:23:01.345 [main] DEBUG org.apache.hadoop.metrics2.lib.MutableMetricsFactory - field org.apache.hadoop.metrics2.lib.MutableRate org.apache.hadoop.security.UserGroupInformation$UgiMetrics.getGroups with annotation @org.apache.hadoop.metrics2.annotation.Metric(always=false, about=, sampleName=Ops, type=DEFAULT, valueName=Time, value=[GetGroups])

14:23:01.346 [main] DEBUG org.apache.hadoop.metrics2.impl.MetricsSystemImpl - UgiMetrics, User and group related metrics

14:23:01.556 [main] DEBUG org.apache.hadoop.security.UserGroupInformation - hadoop login

14:23:01.557 [main] DEBUG org.apache.hadoop.security.UserGroupInformation - hadoop login commit

14:23:01.558 [main] DEBUG org.apache.hadoop.security.UserGroupInformation - using kerberos user:gz***/gz***-ctdfs01.dcs.com@DCS.COM

14:23:01.558 [main] DEBUG org.apache.hadoop.security.UserGroupInformation - Using user: "gz***/gz***-ctdfs01.dcs.com@DCS.COM" with name gz***/gz***-ctdfs01.dcs.com@DCS.COM

14:23:01.558 [main] DEBUG org.apache.hadoop.security.UserGroupInformation - User entry: "gz***/gz***-ctdfs01.dcs.com@DCS.COM"

14:23:01.558 [main] INFO org.apache.hadoop.security.UserGroupInformation - Login successful for user gz***/gz***-ctdfs01.dcs.com@DCS.COM using keytab file /app/tomcat/gz***.app.keytab

########## login success! #######运行成功效果日志(基于动态库)

sh-4.2# java -cp $LIB_HOME\*:. Transfer2Application

################## hello world! 1

################## hello world! initHbase

17:46:05.469 [main] DEBUG org.apache.hadoop.util.Shell - setsid exited with exit code 0

17:46:05.481 [main] DEBUG org.apache.hadoop.security.Groups - Creating new Groups object

17:46:05.504 [main] DEBUG org.apache.hadoop.util.NativeCodeLoader - Trying to load the custom-built native-hadoop library...

17:46:05.504 [main] DEBUG org.apache.hadoop.util.NativeCodeLoader - Loaded the native-hadoop library

17:46:05.504 [main] DEBUG org.apache.hadoop.security.JniBasedUnixGroupsMapping - Using JniBasedUnixGroupsMapping for Group resolution

17:46:05.505 [main] DEBUG org.apache.hadoop.security.JniBasedUnixGroupsMappingWithFallback - Group mapping impl=org.apache.hadoop.security.JniBasedUnixGroupsMapping

17:46:05.553 [main] DEBUG org.apache.hadoop.security.Groups - Group mapping impl=org.apache.hadoop.security.JniBasedUnixGroupsMappingWithFallback; cacheTimeout=300000; warningDeltaMs=5000

17:46:05.604 [main] DEBUG org.apache.hadoop.metrics2.lib.MutableMetricsFactory - field org.apache.hadoop.metrics2.lib.MutableRate org.apache.hadoop.security.UserGroupInformation$UgiMetrics.loginSuccess with annotation @org.apache.hadoop.metrics2.annotation.Metric(always=false, about=, sampleName=Ops, type=DEFAULT, valueName=Time, value=[Rate of successful kerberos logins and latency (milliseconds)])

17:46:05.611 [main] DEBUG org.apache.hadoop.metrics2.lib.MutableMetricsFactory - field org.apache.hadoop.metrics2.lib.MutableRate org.apache.hadoop.security.UserGroupInformation$UgiMetrics.loginFailure with annotation @org.apache.hadoop.metrics2.annotation.Metric(always=false, about=, sampleName=Ops, type=DEFAULT, valueName=Time, value=[Rate of failed kerberos logins and latency (milliseconds)])

17:46:05.611 [main] DEBUG org.apache.hadoop.metrics2.lib.MutableMetricsFactory - field org.apache.hadoop.metrics2.lib.MutableRate org.apache.hadoop.security.UserGroupInformation$UgiMetrics.getGroups with annotation @org.apache.hadoop.metrics2.annotation.Metric(always=false, about=, sampleName=Ops, type=DEFAULT, valueName=Time, value=[GetGroups])

17:46:05.612 [main] DEBUG org.apache.hadoop.metrics2.impl.MetricsSystemImpl - UgiMetrics, User and group related metrics

17:46:05.818 [main] DEBUG org.apache.hadoop.security.UserGroupInformation - hadoop login

17:46:05.819 [main] DEBUG org.apache.hadoop.security.UserGroupInformation - hadoop login commit

17:46:05.820 [main] DEBUG org.apache.hadoop.security.UserGroupInformation - using kerberos user:gzcrm/gzcrm-ctdfs01.dcs.com@DCS.COM

17:46:05.820 [main] DEBUG org.apache.hadoop.security.UserGroupInformation - Using user: "gzcrm/gzcrm-ctdfs01.dcs.com@DCS.COM" with name gzcrm/gzcrm-ctdfs01.dcs.com@DCS.COM

17:46:05.820 [main] DEBUG org.apache.hadoop.security.UserGroupInformation - User entry: "gzcrm/gzcrm-ctdfs01.dcs.com@DCS.COM"

17:46:05.821 [main] INFO org.apache.hadoop.security.UserGroupInformation - Login successful for user gzcrm/gzcrm-ctdfs01.dcs.com@DCS.COM using keytab file /app/tomcat/gzcrm.app.keytab

########## login success! #######说明:

基于动态库的方式操作hbase会得到更好的性能效果。

常见问题(FAQ):

Q1:运行时报 Kerberos krb5 configuration not found

18:39:46.030 [main] DEBUG org.apache.hadoop.util.Shell - setsid exited with exit code 0

18:39:46.041 [main] DEBUG org.apache.hadoop.security.Groups - Creating new Groups object

18:39:46.058 [main] DEBUG org.apache.hadoop.util.NativeCodeLoader - Trying to load the custom-built native-hadoop library...

18:39:46.059 [main] DEBUG org.apache.hadoop.util.NativeCodeLoader - Failed to load native-hadoop with error: java.lang.UnsatisfiedLinkError: no hadoop in java.library.path

18:39:46.059 [main] DEBUG org.apache.hadoop.util.NativeCodeLoader - java.library.path=/usr/java/packages/lib/amd64:/usr/lib64:/lib64:/lib:/usr/lib

18:39:46.059 [main] WARN org.apache.hadoop.util.NativeCodeLoader - Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

18:39:46.059 [main] DEBUG org.apache.hadoop.util.PerformanceAdvisory - Falling back to shell based

18:39:46.059 [main] DEBUG org.apache.hadoop.security.JniBasedUnixGroupsMappingWithFallback - Group mapping impl=org.apache.hadoop.security.ShellBasedUnixGroupsMapping

18:39:46.113 [main] DEBUG org.apache.hadoop.security.Groups - Group mapping impl=org.apache.hadoop.security.JniBasedUnixGroupsMappingWithFallback; cacheTimeout=300000; warningDeltaMs=5000

18:39:46.166 [main] DEBUG org.apache.hadoop.metrics2.lib.MutableMetricsFactory - field org.apache.hadoop.metrics2.lib.MutableRate org.apache.hadoop.security.UserGroupInformation$UgiMetrics.loginSuccess with annotation @org.apache.hadoop.metrics2.annotation.Metric(always=false, about=, sampleName=Ops, type=DEFAULT, valueName=Time, value=[Rate of successful kerberos logins and latency (milliseconds)])

18:39:46.172 [main] DEBUG org.apache.hadoop.metrics2.lib.MutableMetricsFactory - field org.apache.hadoop.metrics2.lib.MutableRate org.apache.hadoop.security.UserGroupInformation$UgiMetrics.loginFailure with annotation @org.apache.hadoop.metrics2.annotation.Metric(always=false, about=, sampleName=Ops, type=DEFAULT, valueName=Time, value=[Rate of failed kerberos logins and latency (milliseconds)])

18:39:46.172 [main] DEBUG org.apache.hadoop.metrics2.lib.MutableMetricsFactory - field org.apache.hadoop.metrics2.lib.MutableRate org.apache.hadoop.security.UserGroupInformation$UgiMetrics.getGroups with annotation @org.apache.hadoop.metrics2.annotation.Metric(always=false, about=, sampleName=Ops, type=DEFAULT, valueName=Time, value=[GetGroups])

18:39:46.173 [main] DEBUG org.apache.hadoop.metrics2.impl.MetricsSystemImpl - UgiMetrics, User and group related metrics

18:39:46.219 [main] DEBUG org.apache.hadoop.security.authentication.util.KerberosName - Kerberos krb5 configuration not found, setting default realm to empty

java.lang.IllegalArgumentException: Can't get Kerberos realmat org.apache.hadoop.security.HadoopKerberosName.setConfiguration(HadoopKerberosName.java:65)at org.apache.hadoop.security.UserGroupInformation.initialize(UserGroupInformation.java:277)at org.apache.hadoop.security.UserGroupInformation.ensureInitialized(UserGroupInformation.java:262)at org.apache.hadoop.security.UserGroupInformation.isAuthenticationMethodEnabled(UserGroupInformation.java:339)at org.apache.hadoop.security.UserGroupInformation.isSecurityEnabled(UserGroupInformation.java:333)at org.apache.hadoop.hbase.security.User$SecureHadoopUser.isSecurityEnabled(User.java:428)at org.apache.hadoop.hbase.security.User.isSecurityEnabled(User.java:268)at org.apache.hadoop.hbase.security.UserProvider.isHadoopSecurityEnabled(UserProvider.java:159)at Transfer2Application.initHbase(Transfer2Application.java:44)at Transfer2Application.main(Transfer2Application.java:22)

Caused by: java.lang.reflect.InvocationTargetExceptionat sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)at java.lang.reflect.Method.invoke(Method.java:498)at org.apache.hadoop.security.authentication.util.KerberosUtil.getDefaultRealm(KerberosUtil.java:84)at org.apache.hadoop.security.HadoopKerberosName.setConfiguration(HadoopKerberosName.java:63)... 9 more

Caused by: KrbException: Cannot locate default realmat sun.security.krb5.Config.getDefaultRealm(Config.java:1029)... 15 more

Q2:运行时报hbase.keytab.file should be set if security is enabled

18:41:21.911 [main] DEBUG org.apache.hadoop.util.Shell - setsid exited with exit code 0

18:41:21.922 [main] DEBUG org.apache.hadoop.security.Groups - Creating new Groups object

18:41:21.945 [main] DEBUG org.apache.hadoop.util.NativeCodeLoader - Trying to load the custom-built native-hadoop library...

18:41:21.945 [main] DEBUG org.apache.hadoop.util.NativeCodeLoader - Failed to load native-hadoop with error: java.lang.UnsatisfiedLinkError: no hadoop in java.library.path

18:41:21.945 [main] DEBUG org.apache.hadoop.util.NativeCodeLoader - java.library.path=/usr/java/packages/lib/amd64:/usr/lib64:/lib64:/lib:/usr/lib

18:41:21.946 [main] WARN org.apache.hadoop.util.NativeCodeLoader - Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

18:41:21.946 [main] DEBUG org.apache.hadoop.util.PerformanceAdvisory - Falling back to shell based

18:41:21.946 [main] DEBUG org.apache.hadoop.security.JniBasedUnixGroupsMappingWithFallback - Group mapping impl=org.apache.hadoop.security.ShellBasedUnixGroupsMapping

18:41:21.995 [main] DEBUG org.apache.hadoop.security.Groups - Group mapping impl=org.apache.hadoop.security.JniBasedUnixGroupsMappingWithFallback; cacheTimeout=300000; warningDeltaMs=5000

18:41:22.046 [main] DEBUG org.apache.hadoop.metrics2.lib.MutableMetricsFactory - field org.apache.hadoop.metrics2.lib.MutableRate org.apache.hadoop.security.UserGroupInformation$UgiMetrics.loginSuccess with annotation @org.apache.hadoop.metrics2.annotation.Metric(always=false, about=, sampleName=Ops, type=DEFAULT, valueName=Time, value=[Rate of successful kerberos logins and latency (milliseconds)])

18:41:22.052 [main] DEBUG org.apache.hadoop.metrics2.lib.MutableMetricsFactory - field org.apache.hadoop.metrics2.lib.MutableRate org.apache.hadoop.security.UserGroupInformation$UgiMetrics.loginFailure with annotation @org.apache.hadoop.metrics2.annotation.Metric(always=false, about=, sampleName=Ops, type=DEFAULT, valueName=Time, value=[Rate of failed kerberos logins and latency (milliseconds)])

18:41:22.053 [main] DEBUG org.apache.hadoop.metrics2.lib.MutableMetricsFactory - field org.apache.hadoop.metrics2.lib.MutableRate org.apache.hadoop.security.UserGroupInformation$UgiMetrics.getGroups with annotation @org.apache.hadoop.metrics2.annotation.Metric(always=false, about=, sampleName=Ops, type=DEFAULT, valueName=Time, value=[GetGroups])

18:41:22.053 [main] DEBUG org.apache.hadoop.metrics2.impl.MetricsSystemImpl - UgiMetrics, User and group related metrics

java.lang.IllegalArgumentException: hbase.keytab.file should be set if security is enabledat org.apache.hadoop.hbase.shaded.com.google.common.base.Preconditions.checkArgument(Preconditions.java:92)at Transfer2Application.initHbase(Transfer2Application.java:53)at Transfer2Application.main(Transfer2Application.java:22):22)A:没有正确设置hbase.keytab.file

conf.set("hbase.keytab.file", "/app/tomcat/gzcrm.app.keytab");Q3:运行时报Failed to load native-hadoop with error: java.lang.UnsatisfiedLinkError: no hadoop in java.library.path

18:39:46.041 [main] DEBUG org.apache.hadoop.security.Groups - Creating new Groups object

18:39:46.058 [main] DEBUG org.apache.hadoop.util.NativeCodeLoader - Trying to load the custom-built native-hadoop library...

18:39:46.059 [main] DEBUG org.apache.hadoop.util.NativeCodeLoader - Failed to load native-hadoop with error: java.lang.UnsatisfiedLinkError: no hadoop in java.library.path

18:39:46.059 [main] DEBUG org.apache.hadoop.util.NativeCodeLoader - java.library.path=/usr/java/packages/lib/amd64:/usr/lib64:/lib64:/lib:/usr/lib

18:39:46.059 [main] WARN org.apache.hadoop.util.NativeCodeLoader - Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

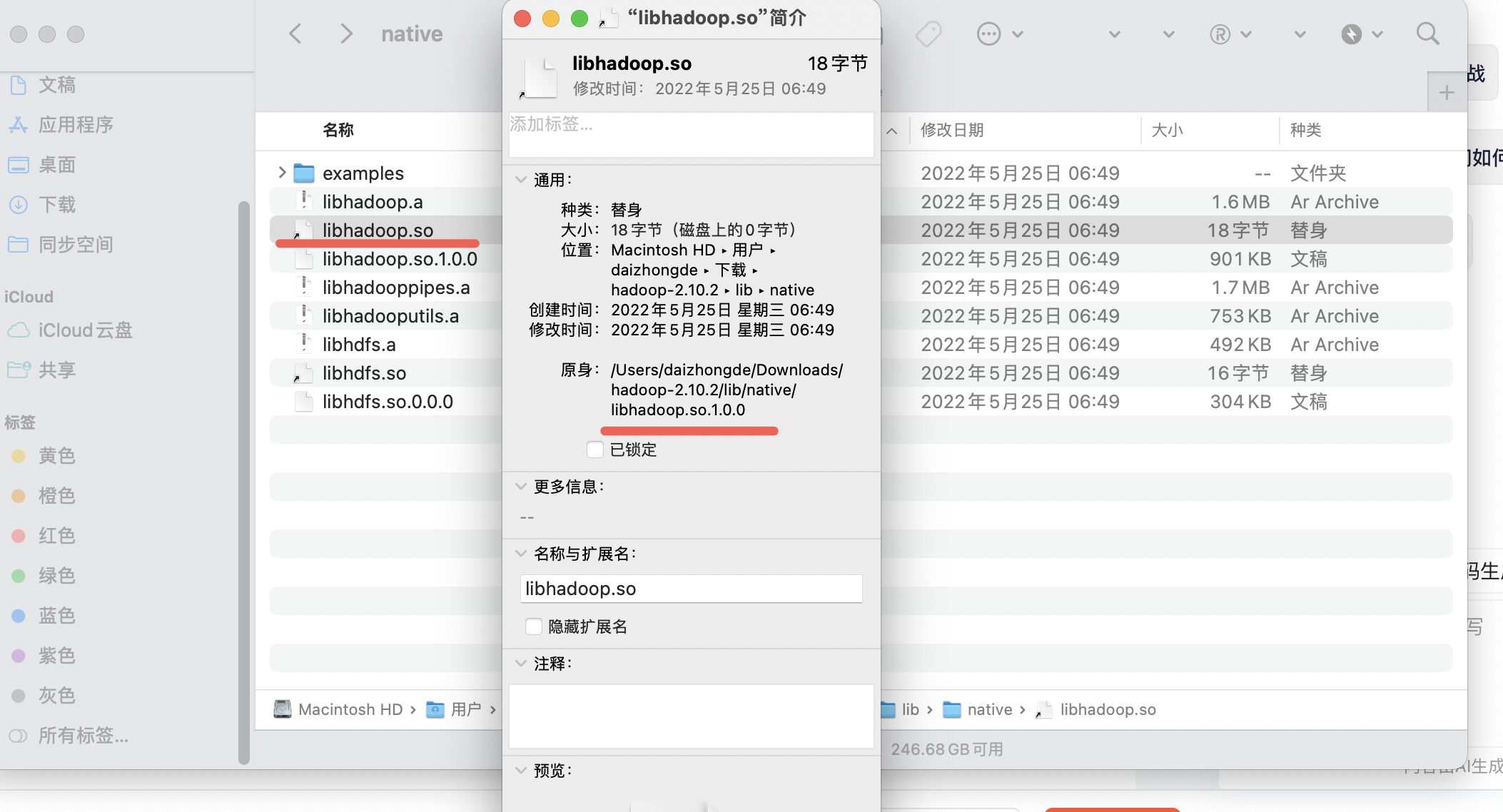

18:39:46.059 [main] DEBUG org.apache.hadoop.util.PerformanceAdvisory - Falling back to shell basedA:没有成功加载hadoop动态库。从官方下载动态库并拷贝到动态库路径中即可。官方动态库下载地址:https://archive.apache.org/dist/hadoop/common/hadoop-2.7.1/hadoop-2.7.1.tar.gz

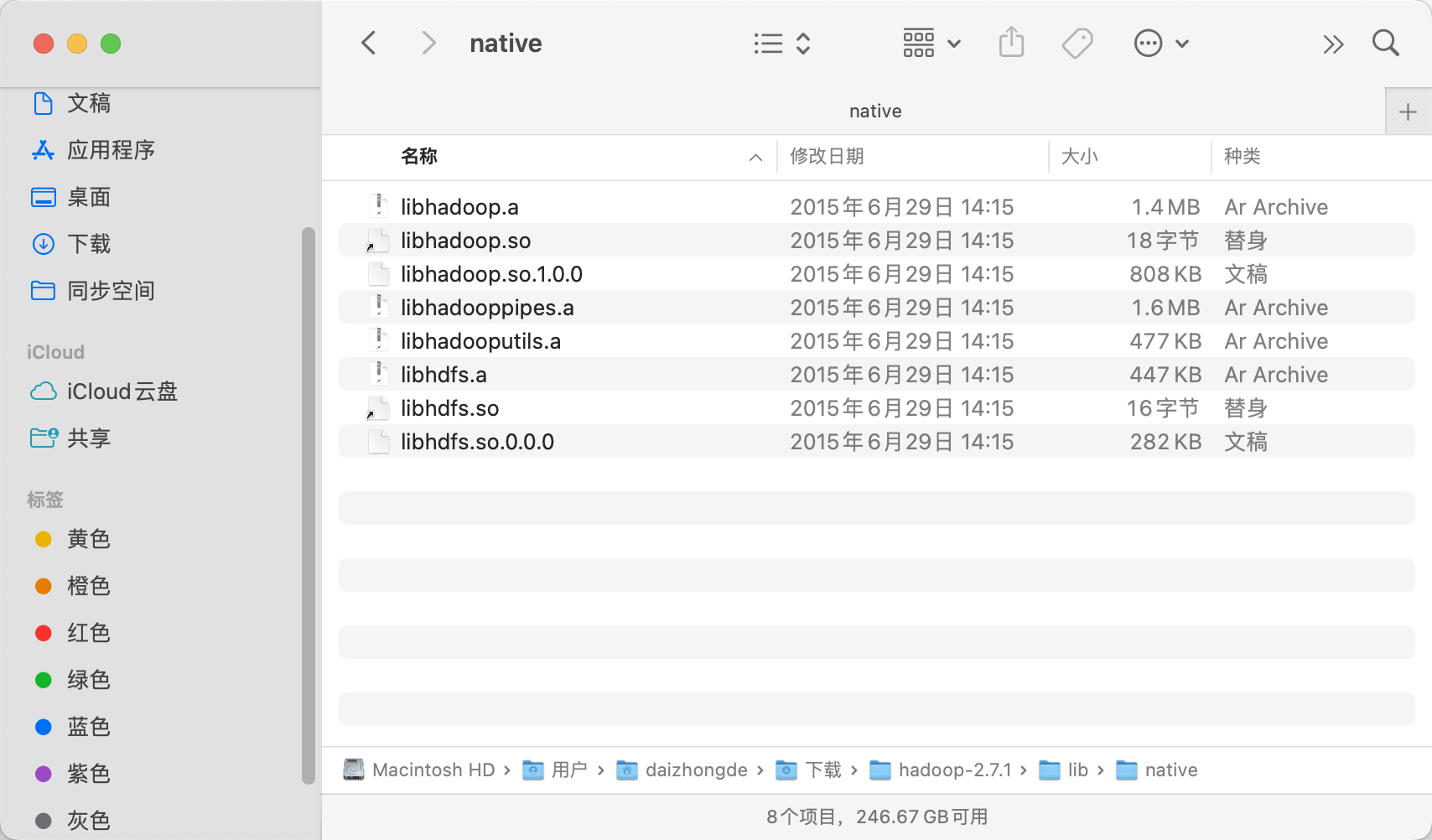

动态库文件路径:hadoop-2.7.1/lib/native

把这个目录所有文件拷贝到:/usr/lib 目录下 ,其为日志中提示的java.library.path的目录之一

注意:

默认拷贝到lib下的文件不包含可直接使用的动态库(不会被加载),原因是默认是的文件链接,拷贝进去就丢失了。需要重新创建文件链接、或做二次拷贝、或是重命名

sh-4.2# ls -lh /usr/lib|grep hadoop

-rwxrwxrwx 1 root root 1.4M Aug 24 14:22 libhadoop.a

-rwxrwxrwx 1 root root 1.6M Aug 24 14:22 libhadooppipes.a

-rwxrwxrwx 1 root root 790K Aug 24 14:22 libhadoop.so.1.0.0

-rwxrwxrwx 1 root root 466K Aug 24 14:22 libhadooputils.a

sh-4.2#

sh-4.2#

sh-4.2# cd /usr/lib

sh-4.2# cp libhadoop.so.1.0.0 libhadoop.so

sh-4.2# cp libhdfs.so.0.0.0 libhdfs.so

sh-4.2#

sh-4.2# ls -lh /usr/lib|grep hadoop

-rwxrwxrwx 1 root root 1.4M Aug 24 14:22 libhadoop.a

-rwxrwxrwx 1 root root 1.6M Aug 24 14:22 libhadooppipes.a

-rwxr-xr-x 1 root root 790K Aug 24 17:44 libhadoop.so

-rwxrwxrwx 1 root root 790K Aug 24 14:22 libhadoop.so.1.0.0

-rwxrwxrwx 1 root root 466K Aug 24 14:22 libhadooputils.a

sh-4.2#

sh-4.2#

sh-4.2# ls -lh /usr/lib|grep hdfs

-rwxrwxrwx 1 root root 437K Aug 24 14:22 libhdfs.a

-rwxr-xr-x 1 root root 276K Aug 24 17:45 libhdfs.so

-rwxrwxrwx 1 root root 276K Aug 24 14:22 libhdfs.so.0.0.0本地查看:

hadoop-2.7.1

hadoop-2.10.2

Q4:运行时报各种网络不通,包括不能访问kerberos服务

A:打通网络端口,开通hbase服务端主机端口,清单如下(实际大家的端口号可能不一致,但都有):

| 端口号 | 客户端是否必需打通该端口 | 端口说明 |

| 8090 | 资源管理页面端口 | |

| 50470 | https节点访问端口 | |

| 8485 | √ | 共享目录端口 |

| 8020 | √ | rpc端口 |

| 50070 | √ | 节点http访问端口(需要打通) |

| 2181 | √ | zookeeper服务端口(需要打通) |

| 8088 | √ | RM资源管理器http端口 |

| 16000 | √ | master服务端口 |

| 3888 | √ | 主zookeeper端口 |

| 16010 | √ | master 信息端口 |

| 13562 | √ | mapreduce分片服务端口 |

| 2049 | nfs文件系统访问端口 | |

| 16100 | 多播端口 | |

| 8080 | √ | 服务rest端口 |

| 16020 | √ | RS区域服务端口 |

| 21 | ftp端口 | |

| 4242 | nfs挂载端口 | |

| 88 | √ | KDC通信端口 |

| 80 | √ | Kerberos服务端口 |

| 749 | √ | 这是一个可选的端口,主要用于Kerberos的TCP服 |

| 800 | 这些端口用于Kerberos的KDC之间的通信,特别是在设置了跨多个KDC的环境时 | |

| 801 | 这些端口用于Kerberos的KDC之间的通信,特别是在设置了跨多个KDC的环境时 | |

| 464 | √ | 这是Kpasswd服务的端口,用于更改Kerberos用户的密码。 |

| 750 | 一些实现可能使用这个端口来支持Kpasswd服务的额外功能。 | |

| 636 | 如果Kerberos配置为使用LDAP over SSL(LDAPS),则LDAPS服务通常运行在这个端口上。虽然这不是Kerberos原生的一部分,但它常被用于管理Kerberos用户账户和策略。 | |

| 9090 | Thrift接口端口,支持非Java客户端访问HBase | |

| 60020 | RegionServer工作节点端口,处理客户端读写请求 | |

| 2888 | 集群内机器通讯使用,Leader监听此端口 |

附件一:批量验证网络端口连通性的脚本

Linux shell 批量验证网络主机端口连通性_linux shell批量测试ip连通性-CSDN博客